Discover How to Learn American Sign Language (ASL) with AI with Intel Tiber Developer Cloud: Developer Spotlight: Intel Student Ambassadors’ Success Story Using Intel’s AI Tools.

ASL Bridgify

It is now crucial to be able to connect with those who have hearing impairments in the post-pandemic world of distant employment and virtual experiences. This emphasises how crucial it is to provide a platform that teaches sign language in a variety of dialects in order to promote cross-cultural communication. This capability is surprisingly absent from a lot of video conferencing, e-learning, and entertainment platforms.

Who Invented American Sign Language ASL

To address this gap and transform ASL instruction, the “ASL Bridgify” project was created. ASL Bridgify, created by a group of Intel Student Ambassadors and their colleagues at the University of California, Berkeley AI Hackathon (June 22–23, 2024), improves education and communication for those with hearing impairments. The AI solution makes use of Intel Tiber Developer Cloud’s AI frameworks and tools optimizations.

Bridgify in ASL

With its cutting-edge, personalized learning routes, extensive AI-driven modules, and real-time feedback, their groundbreaking American Sign Language (ASL) learning platform seeks to revolutionize education.

Motivation

To meet the demand for an interactive real-time pose-estimation based ASL learning paradigm, they developed ASL Bridgify. They anticipate that working from home and having more distant experiences in the post-pandemic environment will indicate the need of communicating with those who have hearing impairments. Many systems that are built on video conferencing, education, and entertainment lack this functionality. Surprisingly, the top language learning app, Duolingo, does not provide ASL instruction.

What Is American Sign Language ASL?

What it accomplishes

ASL Bridgify is an online learning resource that specializes in teaching ASL. It provide thorough courses that support language learning in methods that have been shown to work in science. It support your learning process with a personalized AI and an intuitive user interface. Since they understand that artificial intelligence may take on forms other than chatbots, their AI models are connected with videos to monitor hand movements using TensorFlow and Media Pipe.

How it was constructed?

It used a number of technologies to develop an instructional platform. Tailwind, Supabase, and Next.js are used for the frontend. Their backend trained all LLMs using Python libraries like PyTorch, TensorFlow, and Keras, using the Intel Developer Cloud GPU and CPU to speed up the training process. Using Flask, the linked the front and back ends. Additionally, every one integrated their trained models with OpenAI API for Retrival-Augmented-Generation (RAG) and Google Search API.

Obstacles they encountered

The largest obstacle was time. Even with the use of Intel Developer Cloud GPU capabilities, training a single Large Language Model required an enormous amount of time. It was a barrier since, until the LLM finished training, they were unable to test any additional code on a single machine. Due to time constraints, they were unable to finish their first attempt to preprocess words and sentences utilising hand posture to map ASL and encoder-decoder architecture. The intent is to add ASL phrases in the future.

Achievements of which they are pleased

Using PyTorch GPUs on the Intel Cloud, researchers were able to effectively train first big language models from scratch. They are ecstatic to incorporate this success into their front end. They have learnt a great deal about AI by putting three AI tools into practice, each using a distinct approach, including constructing with IPEX and contacting an API. As they provide an original ASL teaching platform to the globe, Their enthusiasm only rises.

Regarding The Student Ambassadors at Intel

Sapana Dhakal attends Cerritos College in California

As part of the Intel Student Ambassador Program, each of the aforementioned uses the tools and frameworks of the oneAPI and Intel Tiber Developer Cloud to create optimised AI solutions in partnership with developer communities.

Regarding The ASL Bridgify Initiative

ASL Bridgify is an AI-powered program that provides quick ASL translation in addition to acting as a dynamic instructor. Through extensive AI-driven modules, it offers personalized learning experiences and real-time feedback.

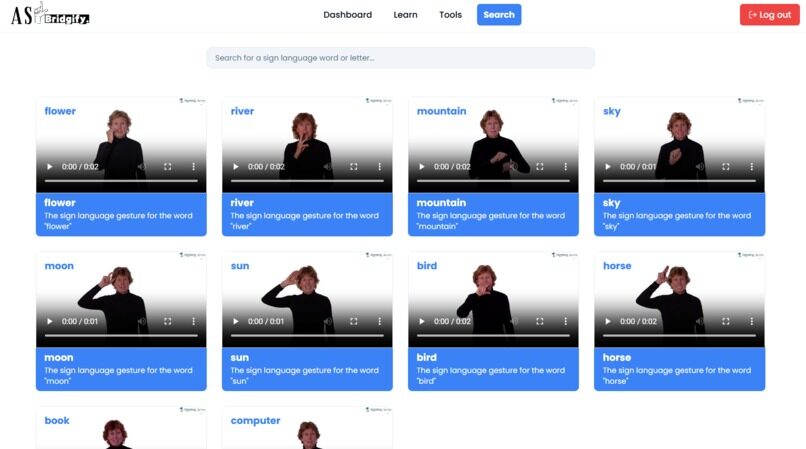

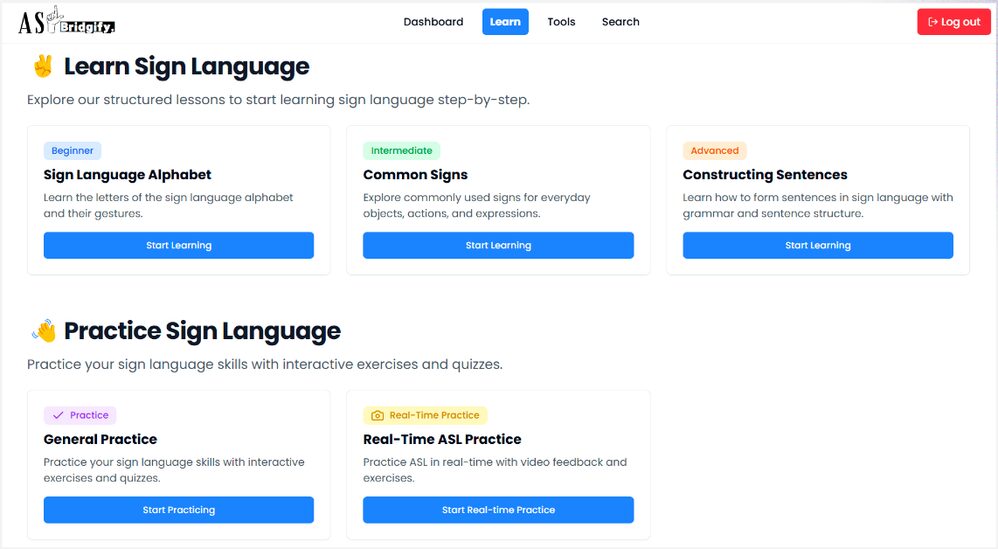

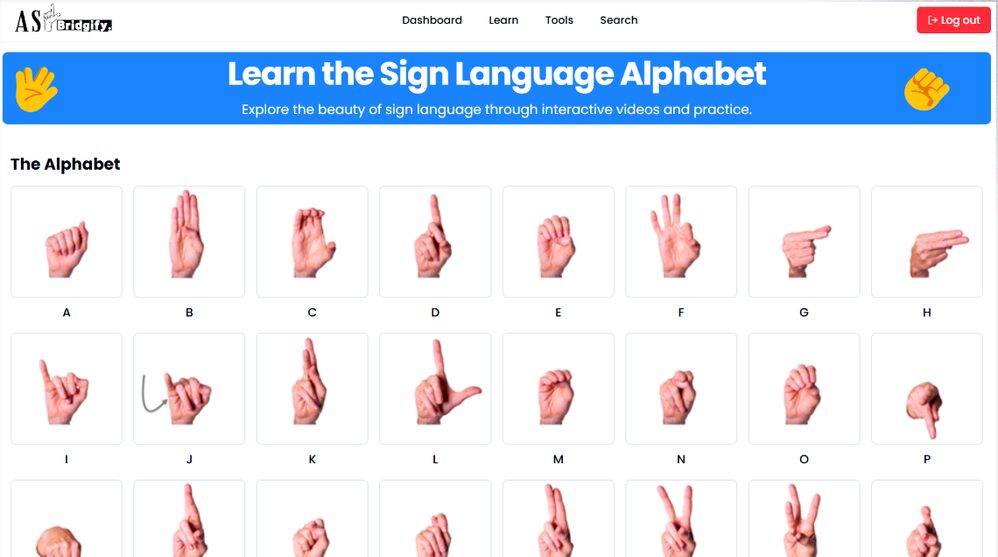

The program offers interactive practice sessions and courses categorized into varying degrees of difficulty, as seen in figure 1 below.

ASL Alphabet Chart

The educational modules appear as follows:

At the UC Berkeley AI Hackathon, ASL Bridgify took home a $25,000 prize from Academic Innovation Catalyst in the “AI for Good” category.

Utilised Intel Tools and Technologies

Using the pytorch-gpu environment on the Intel Tiber Developer Cloud, the ASL Bridgify team trained first large language models (LLMs) from scratch. These models were then integrated into an extensive learning platform. To train models for ASL letter and word prediction, they employed the Intel Extension for PyTorch and the Intel Extension for TensorFlow.

What Comes Next?

Discover more about ASL Bridgify and a number of other cutting-edge AI projects created at the Berkeley AI Hackathon. Become a member of Intel Tiber Developer Cloud to access the company’s most recent accelerated hardware and software, which are optimized for edge computing, HPC, and artificial intelligence applications. Explore resources for developing AI PCs and learn more about Intel AI PCs.

They also invite you to explore Intel-optimized AI Tools and AI Frameworks for faster AI/ML development on heterogeneous, cross-vendor architectures.