Azure AI Health Bot

The need for chatbots and health copilots that help patients and medical professionals with a range of administrative and clinical activities is being driven by the generative AI age. Large language models (LLMs) may be used by these chatbots to create conversational AI chat experiences that can provide accurate and trustworthy information based on a lot of medical data and literature.

Many healthcare companies are working to create their own healthcare copilot experiences that use generative AI and LLMs to give intelligent and entertaining chat experiences in response to the increased demand.

During the process, healthcare companies have come to understand that, to meet the specific requirements of the industry, they need a means to combine the advantages of generative AI for interactive chat experiences with the advantages of protocol-based flows and customized workflows for the delivery of correct and pertinent information. They might provide their clients and end users with a more customized and all-encompassing service by using a hybrid strategy that blends the two.

Furthermore, in order to fulfill the high standards of the healthcare business, chat interactions in the healthcare domain must make use of domain-specific models and health-specific protections.

They are implementing new generative AI safety measures tailored to the healthcare industry in a closed preview for the Azure AI Health Bot services in order to meet these demands. Healthcare businesses may create their own copilot experiences with the help of an integration that preview customers can explore using Microsoft Copilot Studio.

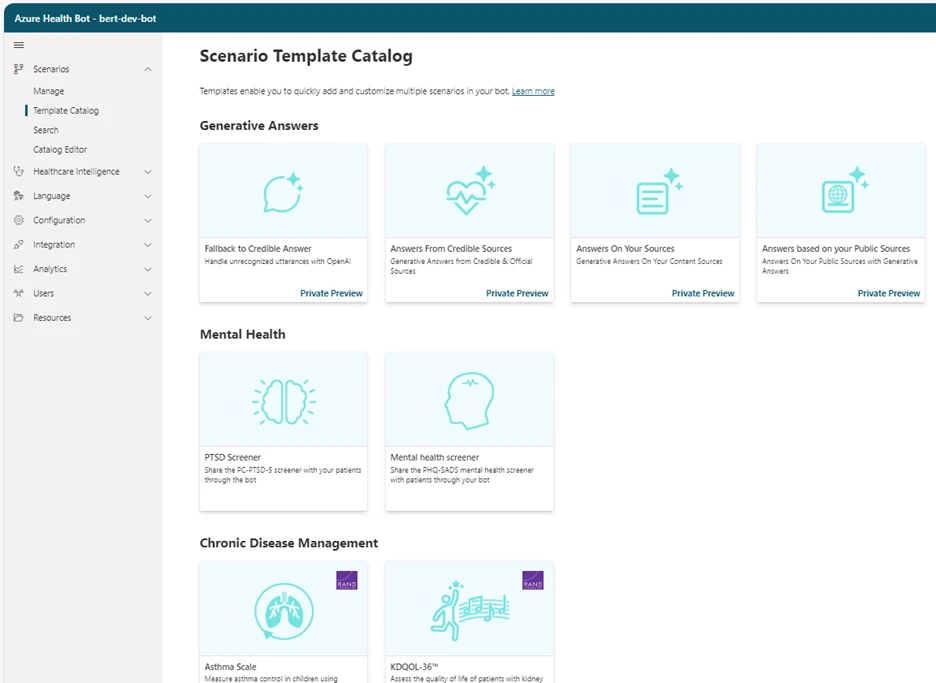

- Reusable functionality tailored to the healthcare industry is being made available. This includes pre-packaged healthcare intelligence plugins, templates, content, and connections as well as pre-built capabilities, use cases, and scenarios.

- Meeting the specific requirements of the healthcare business by letting users create copilots for their physicians and patients, supporting protocol-based processes alongside generative AI-based solutions, and letting users stay compliant with current industry norms, guidelines, and protocols.

- Implementing health-specific safeguards and quality standards that are tailored specifically for the healthcare industry, as well as enabling customers to construct copilots responsibly fitted to healthcare demands, are all examples of applying safeguards relevant to the healthcare industry.

Capabilities of generative AI

They released the Azure AI Health Bot preview with Azure OpenAI Service in April 2023, providing generative AI-based fallback responses.

They are now extending those features beyond backup solutions, allowing their healthcare clients to enhance their copilot experiences even further with the following features, which are available in private preview:

- Solutions with generated power based on the customer’s own resources. During the copilot experience, the sources are integrated with skills, protocol-based pre-built processes, and created descriptive scenarios. To allow generative replies based on their preferred sources, customers may import their Azure OpenAI Service endpoint and index.

- Responses that are generated based on the websites of the clients. These sources, which are searched in real-time, might contain a wide range of information such as frequently asked questions, patient therapies, health publications, medical recommendations, and appointment scheduling details. This method guarantees that patients get assistance for all the many facets of their healthcare journey in addition to medical advice.

- Generative responses based on reliable healthcare sources will be included into new healthcare intelligence tools.

- Utilize pre-built, protocol-based healthcare intelligence features like triage and symptom checkers seamlessly. A wealth of pre-built protocol templates is available for usage with generative AI-based solutions.

- In situations pertaining to healthcare, a credible generative AI backup guarantees correct and dependable solutions. When solutions are unavailable, this function uses reputable information to improve replies, giving consumers trustworthy advice supported by clinical Retrieval-Augmented Generation (RAG). Ensuring the transmission of reliable information in healthcare settings and assisting in the mitigation of possible mistakes.

Integrated security

Built-in healthcare protections are now available in private preview for Azure AI Health Bot, which uses generative AI technology to create copilot experiences that are tailored to the particular demands and requirements of the healthcare industry. These consist of:

- Clinical precautions include recognizing hallucinations and omissions in generated replies, enforcing the use of reputable sources, healthcare-adapted filters and quality checks to enable verification of clinical evidence connected with answers, and more.

- Healthcare chat safeguards include, among other things, customizable AI-related disclaimers that are integrated into the chat experience that users are presented with, the ability to gather feedback from end users, the ability to analyze the engagement through integrated, dedicated reporting, and abuse monitoring that is tailored specifically for the healthcare industry.

- Compliance measures tailored to the healthcare industry include pre-installed permission management, integrated Data Subject Rights (DSRs), automatic audit trails, and more.

Consumer enthusiasm is rising

The generative AI capabilities during preview are already being used by a large number of clients.

With Microsoft Cloud for Healthcare, you can do more with your data

Health organizations can create health data services, transform patient and medical professional experiences, find new insights through machine learning and artificial intelligence, and confidently manage protected health information (PHI) data with the Azure AI Health Bot, which is equipped with generative AI and healthcare safeguards. With Microsoft Cloud for Healthcare, you can enable your data for the next wave of innovation in healthcare.