Amazon SageMaker HyperPod task governance

AWS is launched Amazon SageMaker HyperPod task governance, a new innovation that makes it simple and central to monitor and optimise GPU and Trainium utilisation across generative AI model building tasks, including inference, training, and fine-tuning.

Customers tell us that while they are investing more and more in generative AI projects, they are having trouble deploying their limited compute resources effectively. Inefficient resource allocation results from a lack of dynamic, centralised governance; some initiatives stagnate while others underuse resources. Administrators are burdened with continual planning, data scientists and developers experience delays, AI advances are delivered late, and cost overruns arise from wasteful resource use.

You can speed up the time to market for AI innovations with Amazon SageMaker HyperPod task governance while preventing cost overruns brought on by underutilised computing resources. Administrators can establish quotas that control the distribution of compute resources according to project budgets and job priorities in a few simple steps. SageMaker HyperPod automatically organises and completes activities like model training, fine-tuning, and evaluation that data scientists or developers produce within predetermined quotas.

When high-priority activities require rapid attention, SageMaker HyperPod task governance automatically frees up compute from lower-priority tasks. Low-priority training tasks are paused, checkpoints are saved, and they are resumed later when resources become available. Furthermore, idle compute that is within a team’s quota can be immediately utilised to speed up waiting work for another team.

Developers and data scientists have the ability to examine pending jobs, manage their task queues, and change priorities as necessary. Additionally, administrators can compute resource utilisation across teams and projects, monitor and audit scheduled tasks, and make necessary adjustments to allocations in order to optimise costs and increase resource availability throughout the organisation. This strategy maximises resource efficiency while encouraging the timely completion of important projects.

How to begin Amazon SageMaker HyperPod task governance

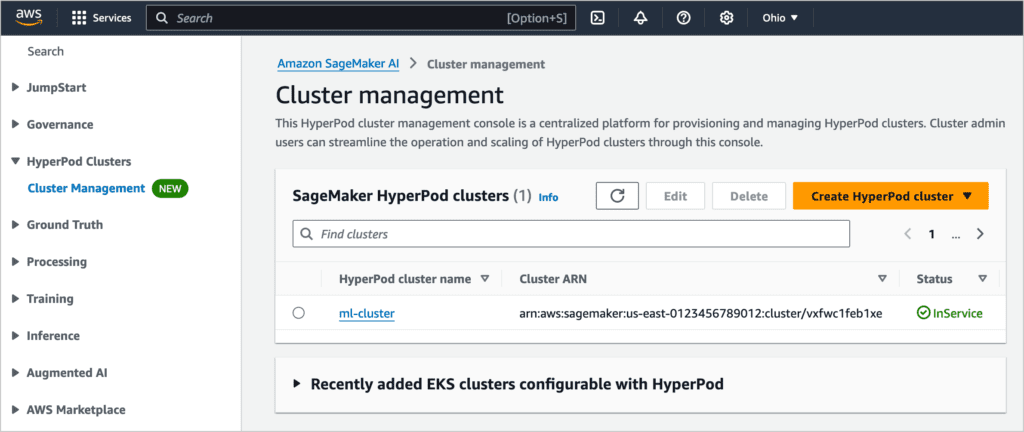

HyperPod offers task governance for Amazon EKS clusters. To provision and manage clusters in the Amazon SageMaker AI console, locate Cluster Management under HyperPod Clusters. Using this console as an administrator, you may optimize HyperPod cluster scaling and operation.

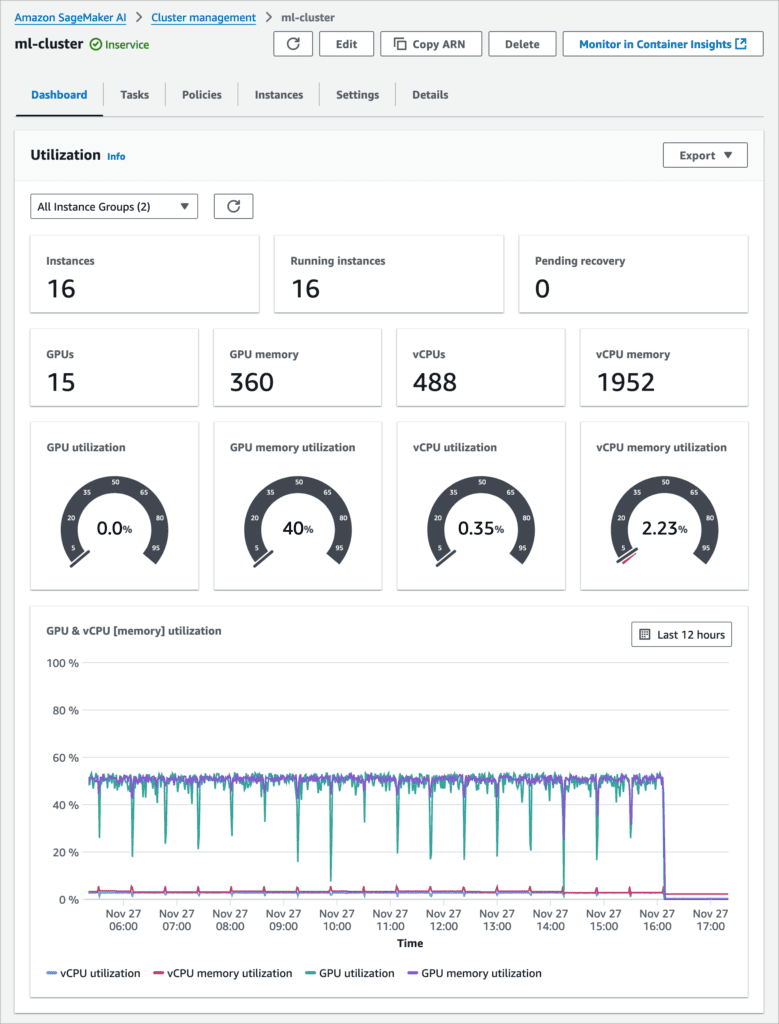

A new Dashboard, Tasks, and Policies tab appears on the cluster detail page after selecting a HyperPod cluster.

New dashboard

Cluster utilisation, team-based, and task-based KPIs are all summarised in the new dashboard.

First, for all instance groups, you can see both trend-based and point-in-time data for important computational resources, including as memory usage, GPU, and vCPU.

With an emphasis on GPU utilisation versus compute allocation across teams, you can then obtain thorough insights into resource management tailored to each team. To examine data like GPU/CPU utilisation, borrowed GPUs/CPUs, and GPUs/CPUs allotted for tasks, you may apply filters that are customisable for teams and cluster instance groups.

Metrics including the number of active, pending, and preempted tasks, as well as the average task runtime and wait time, can also be used to evaluate task performance and the effectiveness of resource allocation. You can integrate with Amazon CloudWatch Container Insights or Amazon Managed Grafana to obtain complete observability into your SageMaker HyperPod cluster resources and software components.

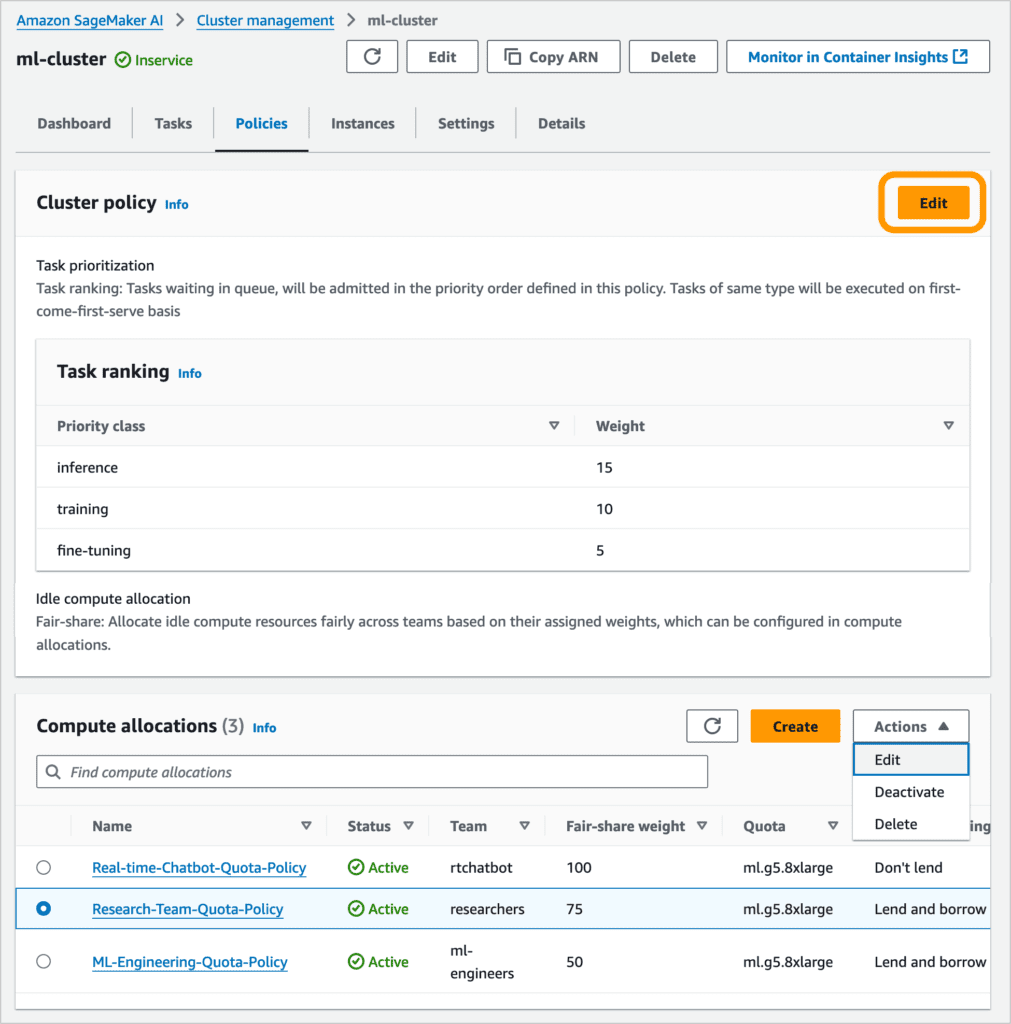

Create and manage a cluster policy

You can set up a cluster strategy that assigns idle compute to teams specified in compute allocations and prioritises important workloads in order to enable task prioritisation and fair-share resource allocation.

In the Cluster policy section, select Edit to set up priority classes and equitable distribution of borrowed compute.

Task ranking or first-come, first-served by default are two ways to specify how tasks in the queue are admitted for task prioritisation. Tasks in the queue will be admitted in the priority order specified by this cluster policy if task ranking is selected. Tasks of the same priority class will be completed in order of priority.

Additionally, you can set up the default distribution of idle compute between teams to be either fair-share or first-come, first-served. Teams can borrow idle compute in the fair-share setup according to their allotted weights, which are set up in relative compute allocations. As a result, each team can expedite their waiting tasks by receiving an equitable amount of idle compute.

To allocate compute resources among teams, enable settings that let teams lend and borrow idle compute, set up preemption of their own low-priority tasks, and give teams fair-share weights, you can create and modify compute allocations in the Policies page’s Compute allocation section.

Choose a team name in the Team section, and your data science and machine learning (ML) teams will have a corresponding Kubernetes namespace built for them to use. You can enable the preemption option based on task priority, allowing higher-priority jobs to preempt lower-priority ones, and specify a fair-share weight for a more equitable distribution of idle capacity across your teams.

Teams can be assigned instance type quotas in the Compute section. In order to accommodate future growth, you can also assign quotas for instance types that are not yet present in the cluster.

By letting teams lend their unused capacity to other teams, you can allow them to share idle compute resources. Teams can only borrow idle compute under this reciprocal borrowing paradigm if they are also prepared to share their own unused resources with others. In order to allow teams to borrow more compute resources than they have been allotted, you can additionally set the borrow limit.

Run your training task in SageMaker HyperPod cluster

Using the HyperPod Command Line Interface (CLI) command, a data scientist can submit a training job and use the quota allotted for your team. You can launch a job and specify which namespace contains the allocation using the HyperPod CLI.

$ hyperpod start-job –name smpv2-llama2 –namespace hyperpod-ns-ml-engineers Successfully created job smpv2-llama2 $ hyperpod list-jobs –all-namespaces { “jobs”: [ { “Name”: “smpv2-llama2”, “Namespace”: “hyperpod-ns-ml-engineers”, “CreationTime”: “2024-09-26T07:13:06Z”, “State”: “Running”, “Priority”: “fine-tuning-priority” }, … ] }

You may view every task in your cluster under the Tasks tab. Depending on its policy, each task has a varied priority and capacity requirement. A higher priority job will run first and the current task will be suspended if you run it.