Palo Alto Networks’ suggestions on database conversion from Cassandra to Bigtable

In this blog post, we look at how Palo Alto Networks, a leading cybersecurity company worldwide, solved its scalability and performance issues by switching from Apache Cassandra to Bigtable, Google Cloud’s enterprise-grade, low-latency NoSQL database service. This allowed them to achieve 5x lower latency and cut their total cost of ownership in half. Please continue reading if you want to find out how they approached this migration.

Bigtable has been supporting both internal systems and external clients at Google. Google Cloud wants to tackle the most challenging use cases in the business and reach more developers with Bigtable. Significant progress has been made in that approach with recent Bigtable features:

- High-performance, workload-isolated, on-demand analytical processing of transactional data is made possible by the innovative Bigtable Data Boost technology. Without interfering with your operational workloads, it enables you to run queries, ETL tasks, and train machine learning models directly and as often as necessary on your transactional data.

- Several teams can safely use the same tables and exchange data from your databases thanks to the authorized views feature, which promotes cooperation and effective data use.

- Distributed counters: This feature continuously and scalablely provides real-time operation metrics and machine learning features by aggregating data at write time to assist you in processing high-frequency event data, such as clickstreams, directly in your database.

- SQL support: With more than 100 SQL functions now included into Bigtable, developers may use their current knowledge to take advantage of Bigtable’s scalability and performance.

For a number of business-critical workloads, including Advanced WildFire, Bigtable is the database of choice because to these improvements and its current features.

From Cassandra to Bigtable at Palo Alto Networks

Advanced WildFire from Palo Alto Networks is the biggest cloud-based malware protection engine in the business, evaluating more than 1 billion samples per month to shield enterprises from complex and cunning attacks. It leverages more than 22 distinct Google Cloud services in 21 different regions to do this. A NoSQL database is essential to processing massive volumes of data for Palo Alto Networks’ Global Verdict Service (GVS), a key component of WildFire, which must be highly available for service uptime. When creating Wildfire, Apache Cassandra first appeared to be a good fit. But when performance requirements and data volumes increased, a number of restrictions surfaced:

- Performance bottlenecks: Usually caused by compaction procedures, high latency, frequent timeouts, and excessive CPU utilization affected user experience and performance.

- Operational difficulty: Managing a sizable Cassandra cluster required a high level of overhead and specialized knowledge, which raised management expenses and complexity.

- Challenges with replication: Low-latency replication across geographically separated regions was challenging to achieve, necessitating a sophisticated mesh architecture to reduce lag.

- Scaling challenges: Node updates required a lot of work and downtime, and scaling Cassandra horizontally proved challenging and time-consuming.

To overcome these constraints, Palo Alto Networks made the decision to switch from GVS to Bigtable. Bigtable’s assurance of the following influenced this choice:

- High availability: Bigtable guarantees nearly continuous operation and maximum uptime with an availability SLA of 99.999%.

- Scalability: It can easily handle Palo Alto Networks’ constantly increasing data needs because to its horizontally scalable architecture, which offers nearly unlimited scalability.

- Performance: Bigtable provides read and write latency of only a few milliseconds, which greatly enhances user experience and application responsiveness.

- Cost-effectiveness: Bigtable’s completely managed solution lowers operating expenses in comparison to overseeing a sizable, intricate Cassandra cluster.

For Palo Alto Networks, the switch to Bigtable produced outstanding outcomes:

- Five times less latency: The Bigtable migration resulted in a five times reduced latency, which significantly enhanced application responsiveness and user experience.

- 50% cheaper: Palo Alto Networks was able to cut costs by 50% because of Bigtable’s effective managed service strategy.

- Improved availability: The availability increased from 99.95% to a remarkable 99.999%, guaranteeing almost continuous uptime and reducing interruptions to services.

- Infrastructure became simpler and easier to manage as a result of the removal of the intricate mesh architecture needed for Cassandra replication.

- Production problems were reduced by an astounding 95% as a result of the move, which led to more seamless operations and fewer interruptions.

- Improved scalability: Bigtable offered 20 times the scale that their prior Cassandra configuration could accommodate, giving them plenty of space to expand.

Fortunately, switching from Cassandra to Bigtable can be a simple procedure. Continue reading to find out how.

The Cassandra to Bigtable migration

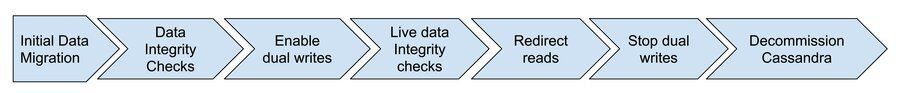

Palo Alto wanted to maintain business continuity and data integrity during the Cassandra to Bigtable migration. An outline of the several-month-long migration process’s steps is provided below:

The first data migration

- To begin receiving the transferred data, create a Bigtable instance, clusters, and tables.

- Data should be extracted from Cassandra and loaded into Bigtable for each table using the data migration tool. It is important to consider read requests while designing the row keys. It is generally accepted that a table’s Cassandra primary key and its Bigtable row key should match.

- Make sure that the column families, data types, and columns in Bigtable correspond to those in Cassandra.

- Write more data to the Cassandra cluster during this phase.

Verification of data integrity:

Using data validation tools or custom scripts, compare the Cassandra and Bigtable data to confirm that the migration was successful. Resolve any disparities or contradictions found in the data.

Enable dual writes:

- Use Cassandra and dual writes to Bigtable for every table.

- To route write requests to both databases, use application code.

Live checks for data integrity:

- Using continuous scheduled scripts, do routine data integrity checks on live data to make sure that the data in Bigtable and Cassandra stays consistent.

- Track the outcomes of the data integrity checks and look into any anomalies or problems found.

Redirect reads:

- Switch read operations from Cassandra to Bigtable gradually by adding new endpoints to load balancers and/or changing the current application code.

- Keep an eye on read operations’ performance and latency.

Cut off dual writes:

After redirecting all read operations to Bigtable, stop writing to Cassandra and make sure that Bigtable receives all write requests.

Decommission Cassandra:

Following the migration of all data and the redirection of read activities to Bigtable, safely terminate the Cassandra cluster.

Tools for migrating current data

The following tools were employed by Palo Alto Networks throughout the migration process:

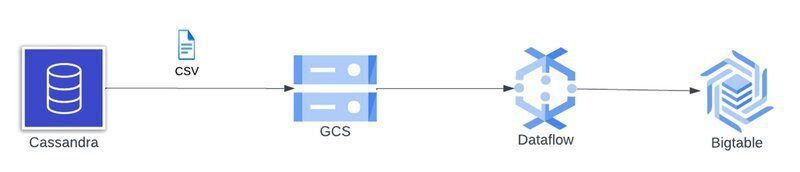

‘dsbulk’ is a utility for dumping data. Data can be exported from Cassandra into CSV files using the ‘dsbulk’ tool. Cloud Storage buckets are filled with these files for later use.

To load data into Bigtable, create dataflow pipelines: The CSV files were loaded into Bigtable in a test environment using dataflow pipelines.

At the same time, Palo Alto decided to take a two-step method because data transfer is crucial: first, a dry-run migration, and then the final migration. This tactic assisted in risk reduction and process improvement.

A dry-run migration’s causes include:

- Test impact: Determine how the ‘dsbulk’ tool affects the live Cassandra cluster, particularly when it is under load, and modify parameters as necessary.

- Issue identification: Find and fix any possible problems related to the enormous amount of data (terabytes).

- Calculate the estimated time needed for the migration in order to schedule live traffic handling for the final migration.

It then proceeded to the last migration when it was prepared.

Steps in the final migration:

- Set up pipeline services:

- Reading data from all MySQL servers and publishing it to a Google Cloud Pub/Sub topic is the function of the reader service.

- Writer service: Converts a Pub/Sub topic into data that is written to Bigtable.

- Cut-off time: Establish a cut-off time and carry out the data migration procedure once more.

- Start services: Get the writer and reader services up and running.

- Complete final checks: Verify accuracy and completeness by conducting thorough data integrity checks.

This methodical technique guarantees a seamless Cassandra to Bigtable migration, preserving data integrity and reducing interference with ongoing business processes. Palo Alto Networks was able to guarantee an efficient and dependable migration at every stage through careful planning.

Best procedures for migrations

Database system migrations are complicated processes that need to be carefully planned and carried out. Palo Alto used the following best practices for their Cassandra to Bigtable migration:

- Data model mapping: Examine and convert your current Cassandra data model to a Bigtable schema that makes sense. Bigtable allows for efficient data representation by providing flexibility in schema construction.

- Instruments for data migration: Reduce downtime and expedite the data transfer process by using data migration solutions such as the open-source “Bigtable cbt” tool.

- Adjusting performance: To take full advantage of Bigtable’s capabilities and optimize performance, optimize your Bigtable schema and application code.

- Modification of application code: Utilize the special features of Bigtable by modifying your application code to communicate with its API.

However, there are a few possible dangers to be aware of:

- Schema mismatch: Verify that your Cassandra data model’s data structures and relationships are appropriately reflected in the Bigtable schema.

- Consistency of data: To prevent data loss and guarantee consistency of data, carefully plan and oversee the data migration procedure.

Prepare for the Bigtable migration

Are you prepared to see for yourself the advantages of Bigtable? A smooth transition from Cassandra to Bigtable is now possible with Google Cloud, which uses Dataflow as the main dual-write tool. Your data replication pipeline’s setup and operation are made easier with this Apache Cassandra to Bigtable template. Begin your adventure now to realize the possibilities of an extremely scalable, efficient, and reasonably priced database system.