ML Predictions in Spanner based only on SQL and Vertex AI Integration

Do you want to produce ML predictions as quickly as possible without moving your data or creating and maintaining separate applications? What if we could do anything with just SQL queries, without having to worry about performance, security, or scalability? The way businesses store and manage data at any scale transparently and securely has been completely transformed by Google Cloud Spanner, a globally distributed and highly consistent database.

With the use of generative AI, artificial intelligence, and machine learning, Vertex AI has completely changed the way we utilize data to get insightful knowledge and make defensible judgments. With Spanner’s Vertex AI integration, you can make predictions more quickly and easily than ever before utilizing your transactional data and Vertex AI models.

This implies that you may do away with the need to access the Vertex AI endpoint and Cloud Spanner data independently. In the past, data would be retrieved from the database and sent to the model via various application modules, an external service, or a function. After that, it would either be served to the user or sent back to the database. The benefit of this is that you can now aggregate these findings without accessing the application layer by doing so in a single step in the Spanner database, where your data is stored, using well-known SQL.

The following are some advantages of integrating Spanner with Vertex AI:

Better latency and performance are achieved when Spanner communicates with the Vertex AI service directly, cutting out extra round trips between the Vertex AI service and the Spanner client.

Increased throughput and parallelism: Cloud Spanner’s distributed query processing architecture, which enables highly parallelizable query execution, is the foundation upon which Spanner Vertex AI integration operates.

Easy user experience: The Cloud Spanner scale’s ability to support data transformation and ML serving scenarios via a single, clear-cut, comprehensible, and accustomed SQL interface reduces the barrier to entry for ML and makes for a much more seamless user experience.

Lower costs: By combining the output of ML calculations with SQL query execution, Spanner Vertex AI integration leverages Cloud Spanner compute power, removing the need to supply an extra compute.

We will look at how to use a model that has been implemented in Vertex AI to conduct machine learning predictions from Spanner in this blog post. This may be accomplished by registering the previously deployed ML model in Spanner with a Vertex AI endpoint.

Utilize case

We will utilize our well-known movie viewer score prediction use case for which we already have a model developed using both Vertex AI and BQML techniques to provide this functionality. On a scale of 1 to 10, the movie score model forecasts a film’s success score, or its IMDB rating, based on a number of variables such as duration, genre, production company, director, cast, cost, etc. The codelabs containing the steps to develop this model are accessible, and the URLs to them are provided in the blog post that follows.

Requirements

Google Cloud project: Prior to starting, confirm that a Google Cloud project has been established, that billing has been enabled, that Spanner access control has been configured to provide access to Vertex AI endpoints, and that the required APIs (BigQuery, Vertex AI, and BigQuery Connection APIs) have been enabled.

Model ML: Through the use of Vertex AI Auto ML or BigQuery ML, familiarize yourself with the process of creating a movie prediction model by consulting the codelabs linked here or here.

ML model deployed: Create and implement a movie score prediction model in the Vertex AI endpoint. This blog provides instructions on how to create and use the model.

The implementation of this use case will incur expenses. Use the BQML model that Vertex AI has installed and choose the Spanner free-tier instance for the data if you wish to do this task for free.

Application

We will use the model developed using Vertex AI Auto ML and implemented in Vertex AI to forecast the movie success score for the data in Cloud Spanner.

1.The steps are as follows:

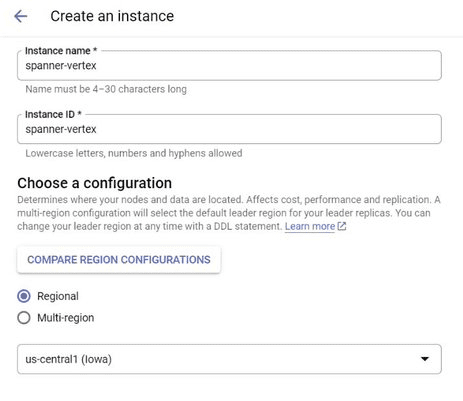

- First, make an instance of a spanner.

- Navigate to the Google Cloud interface, type in “Spanner,” and choose the appropriate product.

- You have the option to choose the free instance or create one.

- Give the region, instance name, ID, and standard setup information. Now let’s choose the area to match your BigQuery and Vertex datasets. This is us-central in my instance. We’ll refer to this example as spanner-vertex.

Step2: Establish a database.

You should be able to create a database by entering the database name and dialect (choose Google Standard SQL) from the instance page. You may possibly make a table there as well, but let’s save it for the next stage. Please click CREATE now.

Make a table in step three.

Go to the database overview page after the database has been created.

“Step 3: Create a table” portion. By going to the “Datasets” area of the Vertex AI interface and selecting the ANALYZE tab of the model, you can compare this to the schema structure of the model dataset.

Step 4: Add a trial dataset

Let’s add some rows to the newly established table to serve as test data now that it has been built.

Navigate to the database overview page and choose movies from the available table list.

Click Spanner Studio on the left pane of the TABLE page to launch the studio on the right.

To enter a record, open the editor tab and RUN the following command:

INSERT INTO films (id, name, runtime, budget, company, genre, year, release, score, director, writer, star, nation, and data_cat)

VALUES

(

7637, ‘India\’s Most Wanted’, ‘Not Rated’, ‘Action’, 2019, ‘5/24/2019’, null, ‘Raj Kumar Gupta’, ‘Raj Kumar Gupta’, ‘Arjun Kapoor’, ‘India’, 57531050, ‘Fox STAR Studios’, 123, ‘TEST’

);

5.Register the Vertex AI AutoML model

As stated in the requirements section, you have to have previously developed and implemented the classification/regression model in the Vertex AI endpoint.

If you haven’t already, use the codelab in the “prerequisites” section to develop the classification model for predicting the movie success rating.

Let’s register the model in Spanner now that it has been developed so you may use it in your apps. Execute the following command from the editor tab:

Develop or replace the movies_score_model model.

REMOVABLE OPTIONS

(endpoint = ‘https://us-central1-aiplatform.googleapis.com/v1/projects/<your_project_id>/locations/us-central1/endpoints/<your_model_endpoint_id>’); Insert values from your deployed Vertex AI model endpoint for <your_project_id> and <your_model_endpoint_id>. In a few seconds, you ought to observe the completion of the model construction stage.

Keep in mind that your table’s schema structure and the model’s should match. In this instance, he previously took care of it while he was constructing the table by ensuring that the DDL’s columns and types matched the Vertex AI model dataset’s schema. Check out this blog post for the DDL’s

Step 6: Construct Forecasts

Using the model we just registered in Spanner, all that’s left to do is forecast the movie user score for the test movie data that we entered into the movies database.

Try the following query:

SELECT * FROM ML.PREDICT (MODEL movies_score_model, (SELECT * FROM movies WHERE id=7637)); Spanner uses ML.PREDICT to forecast the desired result based on the information provided. The model name and the prediction input data are the two arguments required by this procedure.

In our scenario, the test data that we added into the movies database for prediction is retrieved using the subquery “select * from movies where data_cat = ‘TEST'”.

That is all. The prediction result ought to appear in the “value” box for your test data.

Step 7: Update the Spanner database with the predicted findings.

You have the option to enter the forecast result straight into the table; this will be helpful if your application has to update the target in real time. To update the outcome while forecasting, use the following query:

UPDATED films score is defined as follows: MODEL movies_score_model, (SELECT * FROM movies, SELECT value FROM ML.PREDICT WHERE 7637 is the ID WHERE 7637

[…] Hero’s GitHub and Vertex AI […]

[…] do the embeddings for the next step using the PaLM2 text-bison and gecko-embedding models from the Vertex AI SDK for […]

[…] AI journey where Vertex AI’s latest innovations are available to all entry points. Visit our Vertex AI Conversation and Contact Center AI […]

[…] Vertex AI Advantage: Powering Reliability […]

[…] AI journey where Vertex AI’s latest innovations are available to all entry points. Visit our Vertex AI Conversation and Contact Center AI […]