Presenting Vertex AI RAG Engine: Easily expand your Vertex AI RAG workflow. Deploying generative AI for corporate successfully requires bridging the gap between stunning model demos and real-world performance. Even though generative AI has amazing industry potential, many developers and businesses may find it difficult to “productionize” AI because of this perceived divide. Retrieval-augmented generation (RAG) becomes indispensable at this point since it fortifies your corporate applications by fostering confidence in its artificial intelligence results.

Vertex AI’s RAG Engine, a fully managed service that assists you in creating and implementing RAG implementations using your data and techniques, is now generally available. You may do the following using Google Cloud’s Vertex AI RAG Engine:

Vertex AI RAG Engine

Any architecture can be used

Select the data sources, vector databases, and models that best suit your use case. Because of its adaptability, RAG Engine will work with your current infrastructure rather than requiring you to change it.

Change as your use case does

Simple configuration modifications can be used to add new data sources, update models, or modify retrieval settings. As you expand, the system adapts to new needs while preserving consistency.

Assess in easy stages

To determine which RAG engine best suits your use case, set up several with varying settings.

Vertex AI RAG Engine is now available

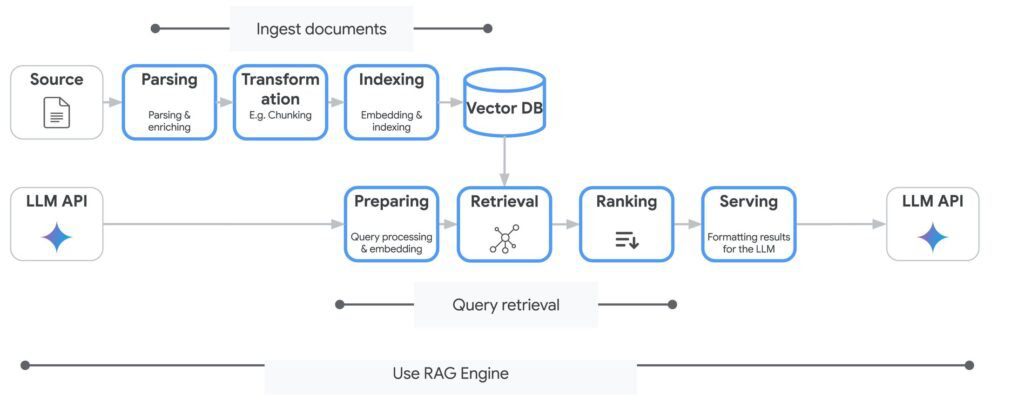

With the help of Vertex AI RAG Engine, a managed service, you may create and apply RAG using your own data and techniques. Imagine having a group of professionals that have previously resolved difficult infrastructure problems like accurate augmentation, intelligent chunking, efficient vector storage, and optimal retrieval algorithms, all while allowing you to modify for your own use case.

The RAG Engine from Vertex AI provides a thriving ecosystem with a variety of choices to meet a range of requirements.

Do-it-yourself skills

By combining several parts, DIY RAG enables customers to customise their solutions. Its simple-to-use API makes it ideal for low-to-medium complexity use cases, allowing for quick testing, proof-of-concept development, and RAG-based applications with only a few clicks.

Search capabilities

One notable example of a strong, fully managed solution is Vertex AI Search. It offers superb out-of-the-box quality, is easy to use, requires little maintenance, and covers simple to complex use cases.

Connectors

An increasing set of connectors lets you easily connect to local files, Jira, Slack, Cloud Storage, and Google Drive. RAG Engine has an easy-to-use interface to manage the ingestion process, even for many sources.

Improved scalability and performance

Large data quantities can be handled with very low latency because to Vertex AI Search’s architecture. Your RAG applications will run better and respond more quickly as a result, particularly when working with large or complicated knowledge bases.

Data handling made easier

You may expedite your data ingestion process by importing your data from several sources, including websites, BigQuery datasets, and Cloud Storage buckets.

Better quality of LLM output

You can make sure that your RAG application pulls the most pertinent data from your corpus by utilising Vertex AI Search’s retrieval capabilities. This will result in more precise and instructive LLM-generated results.

Personalisation

The ability to customise Vertex AI’s RAG Engine is one of its distinguishing features. You may adjust different components to precisely match your data and use case with this flexibility.

Parsing

Documents are divided into pieces when they are absorbed into an index. To accommodate various document formats, RAG Engine has the ability to adjust chunk size, chunk overlap, and other techniques.

Recovery

Pinecone may already be in use, or you may prefer Weaviate’s open-source features. Perhaps you would want to use Google Cloud’s Vector database or Vertex AI Vector Search. RAG Engine operates according to your preferences or, if you’d want, may handle vector storage management completely. This adaptability guarantees that, as your demands change, you won’t ever be forced to use a single strategy.

Generation

Vertex AI Model Garden offers hundreds of LLMs for you to select from, including Google’s Gemini, Llama, and Claude.

Utilise Gemini’s Vertex AI RAG tool

The RAG Engine from Vertex AI is a tool that is directly integrated with the Gemini API. RAG may be used to build grounded conversations with contextually appropriate responses. Just build up a RAG retrieval tool with certain parameters, such as how many documents to retrieve and whether to use an LLM-based ranker. A Gemini model is then given this tool.

As a retriever, use Vertex AI Search

Within your Vertex AI RAG Engine applications, Vertex AI Search offers a way to manage and retrieve data. You may increase scalability, speed, and integration simplicity by utilising Vertex AI Search as your retrieval backend.

Improved scalability and performance

Large data quantities can be handled with very low latency because to Vertex AI Search’s architecture. Your RAG applications will run better and respond more quickly as a result, particularly when working with large or complicated knowledge bases.

Data handling made easier

You may expedite your data ingestion process by importing your data from several sources, including websites, BigQuery datasets, and Cloud Storage buckets.

Smooth integration

You may choose Vertex AI Search as the corpus backend for your RAG application with Vertex AI’s built-in interaction with Vertex AI Search. In addition to ensuring the best possible compatibility between components, this streamlines the integration process.

Better quality of LLM output

You can make sure that your RAG application pulls the most pertinent data from your corpus by utilising Vertex AI Search’s retrieval capabilities. This will result in more precise and instructive LLM-generated results.