AI’s efficacy depends on generating dependable results as it is incorporated into additional sectors and use cases. Trustworthy AI has grown in value as a commercial asset.

Important components that guarantee reliable inputs and outputs are necessary to build trustworthy AI. In order to create trustworthy AI systems, this article will examine the functions of accountability, explicability, transparency, data lineage, and security. With hard drives serving as the foundation of storage required to continuously provide these advantages, each of these components supports the data integrity and dependability crucial to AI’s success.

AI data pipelines that employ trustworthy inputs and provide trustworthy insights are referred to as trustworthy AI. Data that satisfies the following requirements is the foundation of trustworthy AI:

- Superior quality and precision

- Certain provenance, ownership, and legitimacy

- Safekeeping and safeguarding

- The algorithm’s traceable and explicable changes

- Results from the data processing that are dependable and consistent.

Trustworthy AI is supported by scalable storage infrastructure, which makes it easier to handle, store, and protect the massive volumes of data needed by AI systems.

Trustworthy AI in large-scale data centres

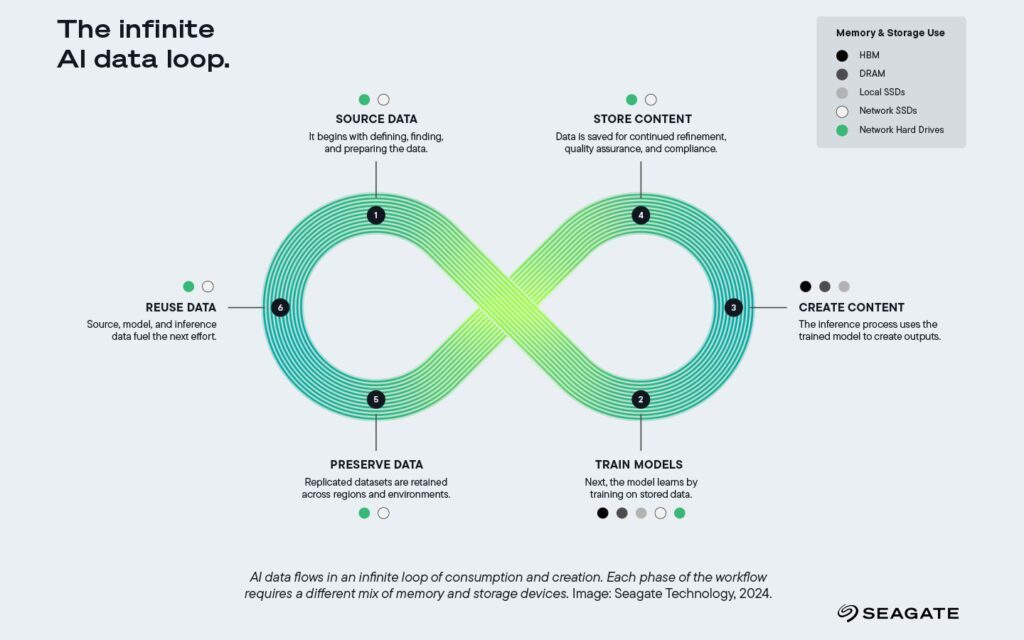

Large volumes of data are involved in AI operations, which need for a strong infrastructure to handle well. Data centres supporting AI workloads are outfitted with scalable storage clusters that provide object stores and data lakes to handle these enormous volumes. The complete AI data loop, from obtaining raw data to saving model outputs for later use, is supported by this infrastructure.

Since the capacity to store and retrieve enormous datasets is essential to AI’s success, its potential would be constrained in the absence of data centres’ size and effectiveness.

Compute, storage, and networking layers are all balanced in modern AI-optimized systems. High-performance computing at scale is made possible by AI environments that are built on data lakes and object stores, which frequently use many storage tiers. For AI systems to have access to both archive and urgently needed data, storage infrastructure is essential. Massive scalability is a key consideration in the design of AI architectures. The ability of AI systems to function effectively and scale in response to demand is driven by the trade-off between storage capacity and performance.

Key elements of trustworthy AI

Scalable designs, however, are insufficient. Transparency, data lineage, explainability, accountability, and security are all necessary for trustworthy AI.

Transparency: making AI understandable

Scalable transparency is essential for reliable AI. It ensures that AI system judgements are comprehensible, accessible, reproducible, and rectifiable. Transparency, for instance, enables consumers to comprehend the reasoning behind an AI system’s movie recommendation by pointing out that it is based on transparent data, such as the user’s watching interests and history.

Scalable storage clusters in data centres facilitate transparency by offering unambiguous documentation of each decision-making stage in the AI data lifecycle. Organizations may increase accountability by using these infrastructures to track data from its source to processing and output.

Transparency is important in many different businesses. Three instances:

- AI systems are used in healthcare to evaluate medical pictures and help spot diseases early. The accuracy of the diagnosis increases with data transparency.

- Trading algorithms help portfolio managers better comprehend and improve investment strategies by processing and filtering market data more quickly. Reliable data can increase investment profits.

- Consumer enquiries are answered by chatbots using NLP. Businesses are held accountable when their data lineage is transparent.

By making the data, choices, and model outputs more understandable, the transparency lens encourages more responsibility.

Data lineage: tracking the origins and applications of data

The capacity to determine the provenance and utilization of information throughout the AI process is known as data lineage, and it is essential for comprehending how models make judgements.

Data lineage, for instance, indicates the information sources and helps trace which datasets were utilised to establish diagnosis in Healthcare AI Models.

Data lineage enables businesses to confirm the source and use of datasets by offering a transparent record of the path data takes from input to output, guaranteeing that AI models are based on reliable data. Data lineage helps both corporate accountability and regulatory compliance by monitoring data through every processing step, making AI systems completely auditable. By safely recording every change, hard drives provide data lineage and let developers examine old data records that show the whole range of AI decision-making processes.

Explainability: making AI decision-making more understandable.

Explainability guarantees that AI judgements are comprehensible and supported by verifiable and traceable evidence. This is particularly important in high-stakes sectors like healthcare and finance, where knowing the logic behind AI choices may have a significant impact on investments and lives. Developers can examine different phases of model development by keeping checkpoints on hard drives, which helps them evaluate how modifications to data inputs or configurations impact results. This method increases the transparency and understandability of AI systems, which promotes increased usage and confidence.

Accountability: making AI processes auditable

In AI, accountability guarantees that stakeholders may review and validate models. Hard drives offer an audit trail that records AI progress from data intake to output through checkpointing and data lineage, enabling companies to examine the elements influencing AI-generated choices. This audit trail guarantees customers that AI technologies are based on dependable, repeatable procedures and assists organisations in meeting regulatory requirements. Accountability allows us to hold AI systems accountable for their activities by identifying precise checkpoints where decisions were taken.

Data integrity is protected by security

Trustworthy AI is supported by security, which guards against manipulation and unauthorised access to data. AI models rely on real, unmodified data with secure storage options like encryption and integrity checks. By keeping data in a stable, regulated environment, hard drives aid in security by assisting businesses in preventing tampering and facilitating adherence to strict security rules. Businesses can preserve confidence in the integrity of AI operations by protecting data at every turn.

Mechanisms that enable trustworthy AI

It need strong systems that promote data integrity, security, and accountability to achieve these aspects of trustworthy AI. These techniques, which range from hashing and mass storage systems to checkpointing and governance principles, guarantee that AI systems satisfy the exacting requirements required for trustworthy decision-making.

Checkpointing supports many components

The practice of preserving an AI model’s state at predetermined, brief intervals throughout training is known as checkpointing. Iterative procedures that can take minutes to days are used to train AI models on massive datasets.

At various stages of training, checkpoints serve as snapshots of the model’s current state, including its data, parameters, and settings. The snapshots, which are saved to storage devices every minute to a few minutes, let developers keep track of the model’s development and prevent them from losing important work because of unforeseen disruptions.

Checkpoints facilitate reliable AI by fulfilling a number of vital functions:

- protection of power. By protecting training jobs against crashes, power outages, and system failures, checkpoints enable models to continue from the most recent stored state rather than beginning from scratch.

- Enhancement and optimisation of the model. Developers may examine previous states, adjust model parameters, and improve performance over time by storing checkpoints.

- adherence to the law and safeguarding of intellectual property. Checkpoints give businesses a visible record that aids in protecting unique processes and adhering to legal frameworks.

- establishing openness and trust. By keeping track of model states, checkpointing helps explainability by enabling the traceability and comprehension of AI choices.

Governance policies: data management responsibility

The structure that governs the management, protection, and usage of data throughout the AI lifecycle is established by governance policies. By ensuring that AI systems follow internal and regulatory standards, these rules foster an atmosphere where data is handled safely and ethically. In order to promote security and accountability in AI processes, governance policies provide access constraints, data retention plans, and compliance protocols. Organisations may guarantee that AI systems are transparent, dependable, and based on good data management practices by establishing these criteria.

Secure data lineage with hashing

By giving each piece of data a distinct digital fingerprint, hashing is essential to preserving data lineage. Organisations may use these fingerprints to confirm that data hasn’t been changed or tampered with during the AI process. AI systems may strengthen security and promote transparency by ensuring that data inputs are consistent and uncorrupted through the hashing of datasets and checkpoints. These hashed data records are stored on hard drives, allowing businesses to confirm the validity of the data and preserve confidence in AI processes.