OpenAI Responses API guide

OpenAI platform is being developed to assist developers and businesses in creating dependable and practical agents.

OpenAI is launching the initial building blocks today to assist developers and businesses in creating dependable and practical agents. OpenAI models can now handle the intricate, multi-step tasks needed to create agents with the new model features it has added over the past year, including multimodal interactions, enhanced reasoning, and novel safety procedures. Customers have noted that it can be difficult to transform these capabilities into production-ready agents, frequently necessitating lengthy, rapid iterations and unique orchestration logic without adequate visibility or integrated assistance.

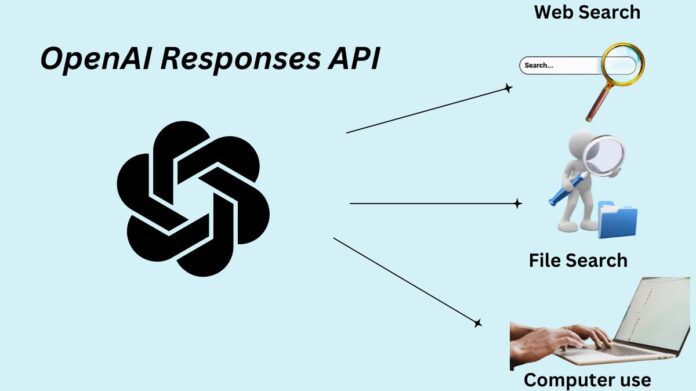

OpenAI is introducing a new suite of tools and APIs created especially to make the creation of agentic apps easier to address these issues:

- The new Responses API combines the Assistants API’s tool-use capabilities for constructing agents with the Chat Completions API’s simplicity.

- Included are built-in capabilities such as computer use, file search, and online search.

- To coordinate single-agent and multi-agent operations, use the new Agents SDK.

- Observability tools have been integrated to track and examine the execution of agent workflows.

Developers can begin creating agents much more easily with these new tools, which simplify basic agent logic, orchestration, and interactions. OpenAI intends to offer more tools and features in the upcoming weeks and months to make developing agentic apps on its platform more easier and faster.

Introducing the Responses API

OpenAI’s new API primitive for using its integrated tools to create agents is called Responses API. The Responses API will give developers creating agentic applications a more flexible base as model capabilities continue to advance. It blends the ease of use of Chat Completions with the tool-use features of the Assistants API. Developers will be able to use different tools and model approaches to accomplish more complicated problems with a single Responses API request.

First, new built-in features like computer use, file search, and online search will be supported via the Responses API. Together, these techniques are intended to link models to the physical environment, increasing their use for task completion. A unified item-based architecture, simplified polymorphism, user-friendly streaming events, and SDK utilities like response.output_text to readily access the model’s text output are just a few of the usability enhancements it offers.

Without having to deal with the hassle of connecting several APIs or outside suppliers, developers can effortlessly include OpenAI models and built-in tools into their products with the Responses API. Additionally, the API facilitates data storage on OpenAI, allowing developers to assess agent performance through features like evaluations and tracing. Just to remind you, even when business data is saved on OpenAI, it do not automatically use it to train its models. Tokens and tools are billed at standard rates listed on its pricing page and the API is free for all developers to use as of right now.

What this implies for current APIs

The Chat Completions API: It is still its most popular API, and it is determined to keep adding new features and models to support it. Developers can continue using Chat Completions with confidence if they don’t need the built-in tools. As soon as a model’s capabilities are independent of built-in tools or numerous model calls, it will continue to release new models to Chat Completions. It advises beginning with the Responses API for new integrations, though, as it is a superset of Chat Completions with the same excellent performance.

Assistants API: It made significant enhancements to the Responses API, making it more user-friendly, quick, and versatile, based on developer input from the Assistants API beta. OpenAI is complete feature parity between the Assistants and the Responses API, which includes the Code Interpreter tool and support for Assistant-like and Thread-like objects.

OpenAI will formally publish the Assistants API’s deprecation once this is finished, with a target sunset date of mid-2026. It will offer a comprehensive migration path from the Assistants API to the Responses API upon deprecation, enabling developers to move their apps and save all of their data. It will keep adding new models to the Assistants API until it publicly announces the deprecation. The Responses API is the next step in the development of agents on OpenAI.

Introducing the Responses API’s built-in tools

OpenAI Web search

The web now provides developers with quick, current responses that include concise, pertinent citations. When utilising gpt-4o and gpt-4o-mini, web search is a tool in the Responses API that can be used in conjunction with other tools or function calls.

const response = await openai.responses.create({

model: "gpt-4o",

tools: [ { type: "web_search_preview" } ],

input: "What was a positive news story that happened today?",

});

console.log(response.output_text);Developers use web search in early testing to create applications for a range of use cases, such as research agents, vacation booking agents, and shopping assistants any application that needs up-to-date web information.

The same model that powers ChatGPT search also powers web search in the API. On the benchmark SimpleQA, which assesses how well LLMs respond to brief, factual questions, GPT‑4o search preview and GPT‑4o mini search preview receive scores of 90% and 88%, respectively.

Users can learn more by clicking on links to news stories and blog posts that are included in the responses produced by web search in the API. Users may interact with information in a new way and content owners can reach a wider audience with these unambiguous, inline citations.

Any publisher or website has the option to show up in web search within the API.

OpenAI is providing developers direct access to its refined search models in the Chat Completions API through gpt-4o-search-preview and gpt-4o-mini-search-preview, and the web search tool is accessible to all developers in preview in the Responses API. The starting prices for GPT-4o search and 4o-mini search are $30 and $25 per thousand searches, respectively.

OpenAI File Search

Now, developers may use the enhanced file search tool to quickly find essential information in vast amounts of documents. It can provide quick, precise search results with support for a variety of file kinds, query optimisation, metadata filtering, and custom reranking. Additionally, integrating the Responses API merely requires a few lines of code.

const productDocs = await openai.vectorStores.create({

name: "Product Documentation",

file_ids: [file1.id, file2.id, file3.id],

});

const response = await openai.responses.create({

model: "gpt-4o-mini",

tools: [{

type: "file_search",

vector_store_ids: [productDocs.id],

}],

input: "What is deep research by OpenAI?",

});

console.log(response.output_text);A legal assistant can use the file search tool to swiftly look up previous cases for a qualified professional, a customer service agent can use it to quickly access frequently asked questions, and a coding agent can use it to query technical material, among other real-world use cases.

All developers have access to this tool through the Responses API. File storage is $0.10/GB/day, with the first GB free, and usage is billed at $2.50 per thousand queries. Lastly, it has added a new search endpoint to Vector Store API objects to directly query your data for usage in other applications and APIs. The tool is still accessible through the Assistants API.

OpenAI Computer Use

Powered by the same Computer-Using Agent (CUA) concept that makes Operator possible, developers may now utilise the computer use tool in the Responses API to create agents that can perform activities on a computer. With success rates of 38.1% on OSWorld for tasks requiring full computer use, 58.1% on WebArena, and 87% on WebVoyager for web-based interactions, this research preview model set a new state-of-the-art record.

Developers can automate computer use chores by converting mouse and keyboard motions produced by the model into executable commands inside their environments with the integrated computer use tool.

const response = await openai.responses.create({

model: "computer-use-preview",

tools: [{

type: "computer_use_preview",

display_width: 1024,

display_height: 768,

environment: "browser",

}],

truncation: "auto",

input: "I'm looking for a new camera. Help me find the best one.",

});

console.log(response.output);The computer tool can be used by developers to automate browser-based processes, such as data entry jobs across legacy systems or quality assurance on web applications.

Select developers in usage tiers 3-5 can now access the computer use tool as a research preview in the Responses API. The cost of usage is $3/1M for input tokens and $12/1M for output tokens.

Agents SDK

Developers must manage agentic workflows in addition to creating the fundamental logic of agents and providing them with tools to make them functional. Compared to Swarm, an experimental SDK, OpenAI published last year that was broadly embraced by the developer community and successfully deployed by numerous customers, its new open-source Agents SDK streamlines the orchestration of multi-agent processes and delivers notable improvements.

Improvements consist of:

- Agents: Easily customisable LLMs with integrated tools and unambiguous instructions.

- Handoffs: Transfer control between agents intelligently with handoffs.

- Guardrails: Adjustable safety measures to verify input and output.

- Tracing & Observability: To troubleshoot and improve performance, visualise agent execution traces.

from agents import Agent, Runner, WebSearchTool, function_tool, guardrail

@function_tool

def submit_refund_request(item_id: str, reason: str):

# Your refund logic goes here

return "success"

support_agent = Agent(

name="Support & Returns",

instructions="You are a support agent who can submit refunds [...]",

tools=[submit_refund_request],

)

shopping_agent = Agent(

name="Shopping Assistant",

instructions="You are a shopping assistant who can search the web [...]",

tools=[WebSearchTool()],

)

triage_agent = Agent(

name="Triage Agent",

instructions="Route the user to the correct agent.",

handoffs=[shopping_agent, support_agent],

)

output = Runner.run_sync(

starting_agent=triage_agent,

input="What shoes might work best with my outfit so far?",

)Customer support automation, multi-step research, content creation, code review, and sales prospecting are just a few of the real-world uses for the Agents SDK.

In addition to the Responses and Chat Completions APIs, the Agents SDK is compatible with models from other providers, provided that the model has an API endpoint in the Chat Completions style. With Node.js support on the horizon, developers may incorporate it into their Python codebases right away.

The outstanding work of other community members, such as Pydantic, Griffe, and MkDocs, served as inspiration for its team while it designed the Agents SDK.

Next steps: creating the agent platform

OpenAI is launching the first building blocks with today’s releases to enable developers and businesses to create, implement, and scale dependable, high-performing AI agents more readily. Its objective is to provide developers with a smooth platform experience so they can create agents that can assist with a range of tasks in any sector.