The NVIDIA GH200 Grace Hopper Superchip, which debuted on the MLPerf industry benchmarks, completed all data center inference tests while maintaining the industry-leading performance of NVIDIA H100 Tensor Core GPUs.

The final outcomes demonstrated the NVIDIA AI platform’s excellent performance and adaptability from the cloud to the network edge.

In a separate announcement, NVIDIA described inference software that would significantly improve users’ performance, energy efficiency, and total cost of ownership.

In MLPerf, the GH200 Superchips excel

The GH200 is a superchip that combines a Hopper GPU and a Grace CPU. More memory, bandwidth, and the capability of automated power switching between the CPU and GPU for performance optimization are provided by the combination.

Separately, on every MLPerf Inference test in this round, NVIDIA HGX H100 systems with eight H100 GPUs had the maximum throughput.

All of MLPerf’s data center tests, including inference for computer vision, voice recognition, and medical imaging in addition to the more difficult use cases of recommendation systems and the large language models (LLMs) used in generative AI, were won by Grace Hopper Superchips with H100 GPUs.

Overall, the outcomes maintain NVIDIA’s track record of consistently outperforming competitors in AI training and inference since the MLPerf benchmarks were introduced in 2018.

Updated tests of recommendation systems and the first inference benchmark on GPT-J, an LLM with six billion parameters that serves as an approximate gauge of an AI model’s size, were also added in the most recent round of MLPerf.

TensorRT-LLM Boosts Deduction

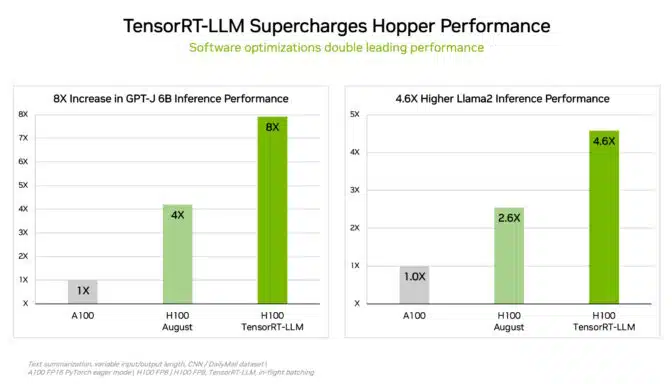

TensorRT-LLM is generative AI software that NVIDIA created to efficiently handle complicated workloads of all sizes. Customers may more than quadruple the inference performance of their previously bought H100 GPUs with the open-source library, which was not completed in time for August submission to MLPerf. This enhancement comes at no additional cost.

According to internal studies conducted by NVIDIA, running GPT-J 6B without TensorRT-LLM on H100 GPUs results in performance improvements of up to 8x.

The software was developed as a result of NVIDIA’s cooperation with top businesses including Meta, AnyScale, Cohere, Deci, Grammarly, Mistral AI, MosaicML (now a part of Databricks), OctoML, Tabnine, and Together AI to accelerate and optimize LLM inference.

On top of TensorRT-LLM, MosaicML implemented functionalities that it required and incorporated them into its current serving stack. Naveen Rao, vice president of engineering at Databricks, stated, “It’s been a breeze.”

TensorRT-LLM is user-friendly, feature-rich, and effective, according to Rao. By using NVIDIA GPUs, it provides cutting-edge performance for LLM serving and enables us to transfer on the cost advantages to our consumers.

The most recent example of ongoing innovation on NVIDIA’s full-stack AI platform is TensorRT-LLM. Users benefit from performance that expands over time without additional expense and is adaptable across various AI workloads thanks to continual software advancements.

Inference on Mainstream Servers is Boosted by L4

The most recent MLPerf testing showed that NVIDIA L4 GPUs performed well across the board while handling the complete spectrum of workloads.

As an example, L4 GPUs operating in small, 72W PCIe accelerators provided up to 6x the performance of CPUs with a roughly 5-fold greater power need.

Additionally, in NVIDIA’s studies, L4 GPUs with specialized media engines and CUDA software accelerated computer vision by up to 120x.

Many system builders, like Google Cloud, provide L4 GPUs to their clients, who work in fields ranging from consumer internet services to medicine development.

Gains in Performance at the Edge

Separately, NVIDIA used a new model compression technique to show that executing the BERT LLM on a L4 GPU may increase speed by up to 4.7 times. The outcome was in MLPerf’s infamous “open division,” a division for displaying novel skills.

The method is anticipated to be applicable to all AI workloads. It may be particularly useful when using models on edge devices that have size and power limitations.

The NVIDIA Jetson Orin system-on-module demonstrated performance enhancements of up to 84% relative to the last round in object identification, a computer vision use case prevalent in edge AI and robotics situations, as another illustration of the company’s leadership in edge computing.

Software using the most recent cores of the processor, such as a programmable vision accelerator, an NVIDIA Ampere architecture GPU, and a specialized deep learning accelerator, was responsible for the Jetson Orin advancement.

Variety of Performance and Wide Ecosystem

Users may depend on the findings of the MLPerf benchmarks, which are transparent and impartial, to make wise purchasing choices. Users can be certain that they will get performance that is both reliable and flexible to deploy since they span a broad variety of use cases and situations.

[…] to Expand AMD & NVIDIA AI Chip CoWoS wrapping Facilities […]

[…] NVIDIA Hopper architecture GPUs established a record in a new generative AI test this round, finishing a training […]