SLMs

Azure are pleased to present the Phi 3 family of open AI models, which was created by Microsoft. Phi 3 models outperform models of the same size and next size up across a variety of language, reasoning, coding, and math benchmarks, making them the most capable and economical small language models (SLMs) on the market. With this update, users can now choose from a wider range of high-quality models, providing them with more useful options for creating and developing generative AI applications.

Phi 3

- Phi-3-mini, a 3.8B language model, is now accessible on Hugging Face, Ollama, and Microsoft Azure AI Studio.

- There are two context-length variations of Phi-3-mini: 4K and 128K tokens. With little effects on quality, it is the first model in its class to provide a context window of up to 128K tokens.

- It is instruction-tuned, which means that it has been taught to comply with various directives that mimic human speech patterns. This guarantees that the model is operational right out of the box.

- To utilise the deploy-eval-finetune toolchain, it is available on Azure AI. Alternatively, developers can execute it locally on their computers using Ollama.

- With support for Windows DirectML and cross-platform compatibility for CPU, graphics processing unit (GPU), and even mobile hardware, it has been tuned for ONNX Runtime.

- It can also be deployed anywhere as an NVIDIA NIM microservice with a conventional API interface. and has been NVIDIA GPU optimised.

Phi language model

The Phi 3 family will see the addition of new variants in the upcoming weeks to provide customers with even greater flexibility across the quality-cost curve. Soon, Phi-3-small (7B) and Phi-3-medium (14B) will be accessible in various model gardens and the Azure AI model catalogue.

Microsoft is still providing the top models across the quality-cost curve, and the Phi-3 release today adds cutting-edge tiny models to the lineup.

Revolutionary performance in a compact package

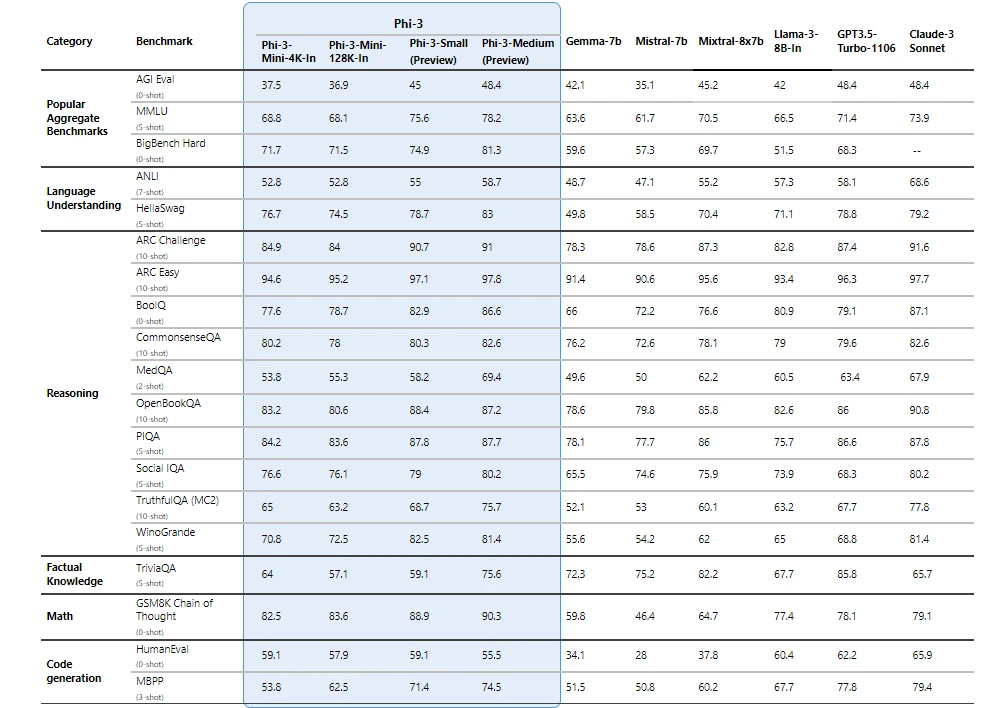

On important benchmarks, Phi 3 models perform much better than language models of the same and bigger sizes (see benchmark numbers below, higher is better). Phi-3-medium and Phi-3-small perform better than much larger models, such as GPT-3.5T, and Phi-3-mini outperforms models twice its size.

To guarantee comparability, the same pipeline is used to produce all reported numbers. These figures may therefore vary slightly from other published figures as a result of variations in the assessment process. Their technical document contains more information about benchmarks.

Note: Because Phi 3 models have a smaller model size, their ability to recall facts is reduced, which explains why they perform worse on factual knowledge benchmarks (like TriviaQA).

Microsoft Phi Model

The Microsoft Responsible AI Standard, a set of guidelines applicable to the entire organization and founded on six guiding principles accountability, transparency, fairness, reliability and safety, privacy and security, and inclusivity was followed in the development of Phi-3 models. To assist guarantee that Phi 3 models are responsibly built, tested, and deployed in accordance with Microsoft’s standards and best practices, these models undergo stringent safety measurement and evaluation, red-teaming, sensitive usage review, and adherence to security recommendations.

Enhancing their previous Phi model work (“Textbooks Are All You Need”), Phi-3 models are trained with high-quality data likewise. Extensive safety post-training was implemented to further improve them, including automated testing and evaluations across dozens of harm categories, manual red-teaming, and reinforcement learning from human feedback (RLHF). their technical paper describes their approach to safety training and evaluations, and the model cards include a list of suggested uses and limits. View the sample card set.

Gaining access to new abilities

Because of Microsoft’s experience delivering copilots and helping clients use Azure AI to use generative AI to transform their businesses, the requirement for different-size models across the quality-cost curve for various jobs is becoming more and more necessary. Phi 3 and other little language models are particularly useful for:

- Settings with limited resources, such as offline and on-device inference situations.

- Situations with latency constraints where quick reactions are essential.

- Use cases with less resources, especially those involving easier activities.

- See their Microsoft Source Blog for more information on small language models.

Phi-3 models can be applied in inference situations with limited computing power because of their reduced size. Specifically, Phi-3-mini can be utilized on-device, especially after being further optimized for cross-platform availability using ONNX Runtime. Phi-3 models’ reduced size also makes fine-tuning or customization simpler and less expensive. They are also a less expensive solution with significantly improved latency due to their reduced processing requirements. Taking in and deciphering vast text content documents, web pages, code, and more is made possible by the longer context window. Phi-3-mini is a strong contender for analytical work because of its high logic and reasoning ability.

With Phi 3, customers are already developing solutions. Agriculture is one industry where Phi 3 is already proving its worth, as internet access may not always be convenient. Farmers can now use powerful tiny models like Phi-3 and Microsoft copilot templates at any time, along with the added benefit of lower operating costs, further democratizing AI technologies.

As part of their ongoing partnership with Microsoft, ITC, a well-known Indian corporate conglomerate, is utilizing Phi 3 on the copilot for Krishi Mitra, an app that targets farmers and has over a million users.

Phi Model

Phi models were first developed by Microsoft Research and have since been widely utilized; Phi-2 has been downloaded more than 2 million times. The Phi series of models has produced impressive results through creative scaling and thoughtful data curation. A model for Python coding called Phi-1 is followed by Phi-1.5, which improves reasoning and comprehension, and Phi-2, a 2.7 billion-parameter model that outperforms models up to 25 times its size in language comprehension. Every iteration has pushed the boundaries of traditional scaling rules by utilizing superior training data and knowledge transfer strategies.