AWS unveils the Pixtral Large 25.02 model for the serverless Amazon Bedrock platform.

Pixtral Large in Amazon Bedrock

The Pixtral Large 25.02 model is now offered as a fully managed, serverless solution on Amazon Bedrock. The first significant cloud provider to provide Pixtral Large as a serverless, fully managed approach is AWS.

In order to properly manage the computational needs of working with big foundation models (FMs), considerable infrastructure design, specialised knowledge, and continuous optimization are frequently needed. When implementing these advanced models, many clients are forced to manage complicated environments or make trade-offs between cost and performance.

Mistral AI’s Pixtral Large model is their first multimodal model that blends strong language comprehension with sophisticated visual capabilities. It is perfect for sophisticated visual reasoning tasks because of its 128K context window. The model’s usefulness in document analysis, chart interpretation, and natural image understanding is demonstrated by its outstanding performance on important benchmarks such as MathVista, DocVQA, and VQAv2.

Multilingualism is Pixtral Large’s strength. The approach supports several languages, including English, French, German, Spanish, Italian, Chinese, Japanese, Korean, Portuguese, Dutch, and Polish, for global teams and apps. It can write and read programme in over 80 languages, including Python, Java, C, C++, JavaScript, Bash, Swift, and Fortran.

With integrated function calling and JSON output formatting, the model’s agent-centric design will appeal to developers as it makes integrating it with current systems easier. When working with huge context scenarios and Retrieval Augmented Generation (RAG) applications, its robust system rapid adherence enhances reliability.

You can now access this sophisticated model without needing to provision or maintain any infrastructure to Pixtral Large in Amazon Bedrock. Without making any prior commitments or capacity planning, the serverless strategy enables you to expand consumption in accordance with actual demand. With no unused resources, you only pay for what you use.

Cross-Region Deduction

Due to cross-region inference, Pixtral Large is now accessible in Amazon Bedrock across several AWS Regions.

For worldwide applications, Amazon Bedrock cross-Region inference allows you to access a single FM across several geographic regions while preserving high availability and low latency. When a model is deployed in both the US and European regions, for instance, it can be accessed via region-specific API endpoints with different prefixes: us.model-id for US regions and eu.model-id for European regions.

By restricting data processing within predetermined geographic bounds, this method helps Amazon Bedrock to comply with regulations while lowering latency by routing inference requests to the endpoint that is nearest to the user. Without needing you to monitor the specific Regions where the model is actually implemented, the system provides seamless scalability and redundancy by managing load balancing and traffic routing across these Regional deployments automatically.

How it works?

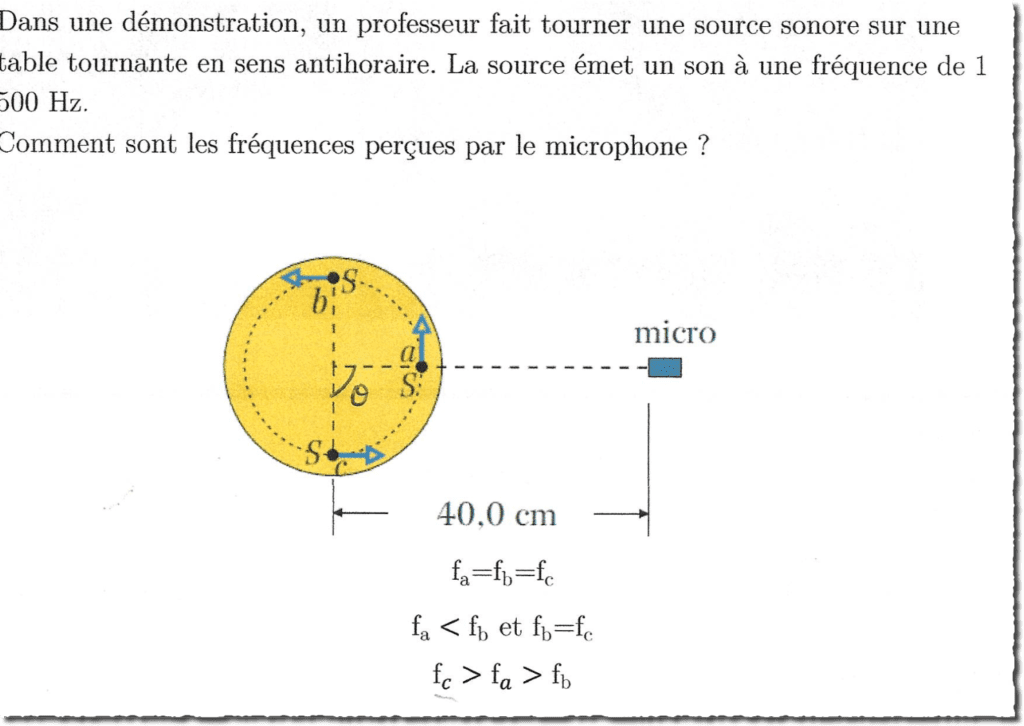

Always investigating how the newest capabilities may address practical issues in my role as a developer advocate. It is recently asked for assistance with the physics exam preparation, which gave me the ideal opportunity to test the new multimodal capabilities in the Amazon Bedrock Converse API.

It was having trouble figuring out how to tackle these issues. It then understood that this was the ideal use for the multimodal features that had recently introduced. It was pictures of a particularly difficult problem sheet with a number of graphs and mathematical symbols, and then to used the Converse API to make a basic application that could interpret the pictures. After uploading the physics test materials together, then asked the model to describe the answer methodology.

There were both impressed by what transpired next. The model deciphered the schematics, identified the mathematical notation and the French language, and explained each problem step-by-step. The program created a tutoring experience that seemed remarkably natural by keeping context throughout to whole chat and by asked follow-up questions about particular subjects.

Its ready and confident by the time of this test, and to had a strong real-world illustration of how Amazon Bedrock’s multimodal capabilities can provide users with meaningful experiences.

Start now

The US East (Ohio, N. Virginia), US West (Oregon), and Europe (Frankfurt, Ireland, Paris, Stockholm) Regional API endpoints offer the new approach. By reducing latency, this regional availability enables you to satisfy data residency requirements.

With the model ID mistral.pixtral-large-2502-v1:0, you can begin accessing the model programmatically using the AWS Command Line Interface (AWS CLI) and AWS SDK, or through the AWS Management Console.

With this introduction, developers and organizations of all sizes may now use powerful multimodal AI, marking a huge advancement. You can now concentrate on creating creative applications without worrying about the underlying complexity to the combination of AWS serverless infrastructure and Mistral AI’s state-of-the-art model.