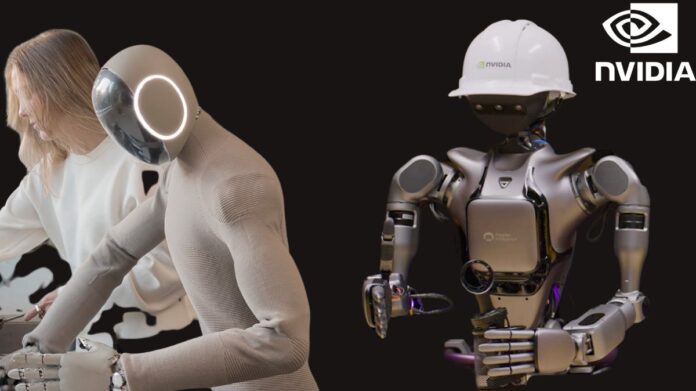

NVIDIA Announces the World’s First Open Isaac GR00T N1. Foundation Model and Simulation Frameworks for Humanoid Robots to Accelerate Robot Development. The Fully Customizable Foundation Model, which is already available, gives humanoid robots generalized abilities and reasoning. Disney Research, Google DeepMind, and NVIDIA Work Together to Create the Next-Generation Open-Source Newton Physics Engine.

Jumpstart Physical AI Data Flywheel: A New Omniverse Blueprint for Synthetic Data Generation and Open-Source Dataset.

GTC: The world’s first open, fully customizable foundation model for generalized humanoid reasoning and capabilities, NVIDIA Isaac GR00T N1, is part of a portfolio of technologies the company today revealed to accelerate the creation of humanoid robots.

Simulation frameworks and blueprints, including the NVIDIA Isaac GR00T Blueprint for creating synthetic data, and Newton, an open-source physics engine being developed with Google DeepMind and Disney Research specifically for creating robots, are among the other technologies.

The GR00T N1, which is currently available, is the first of a series of fully customizable models that NVIDIA will pretrain and make available to robotics developers globally, speeding up the transformation of industries beset by a labour crisis that is predicted to affect over 50 million people worldwide.

“The next frontier in the age of artificial intelligence will be opened by robotics developers worldwide with the NVIDIA Isaac GR00T N1 and new data-generation and robot-learning frameworks.”

GR00T N1 Advances Humanoid Developer Community

The dual-system architecture of the GR00T N1 foundation model is based on ideas from human cognition. “System 1” is a quick-thinking action model that mimics human intuition or reactions. “System 2” is a meticulous, slow-thinking approach to decision-making.

System 2 uses a vision language model to plan activities by reasoning about its surroundings and the commands it has been given. These designs are subsequently converted into precise, continuous robot motions by System 1. Both human demonstration data and a vast amount of synthetic data produced by the NVIDIA Omniverse platform are used to train System 1.

In addition to performing multistep tasks that call for extensive context and combinations of broad skills, GR00T N1 can readily generalize across typical tasks including gripping, manipulating objects with one or both arms, and transferring goods from one arm to another. These features are applicable to a variety of use cases, including inspection, packing, and material handling.

For their particular humanoid robot or task, developers and researchers can post-train GR00T N1 using synthetic or real data.

Huang used a post-trained strategy based on GR00T N1 to demonstrate 1X’s humanoid robot automatically completing household cleaning tasks during his GTC keynote. The autonomous capabilities of the robot are the outcome of 1X and NVIDIA’s AI training partnership.

“Adaptability and learning are key to the future of humanoids,” stated Bernt Børnich, CEO of 1X Technologies. “NVIDIA’s GR00T N1 significantly improves robot thinking and skills to build customized models. Having completely deployed on NEO Gamma with little post-training data, furthering the objective of building robots that are companions rather than merely tools that can help people in significant, incalculable ways.

Other top humanoid developers throughout the world, like Boston Dynamics, Mentee Robotics, NEURA Robotics, and Agility Robotics, have early access to GR00T N1.

NVIDIA, Google DeepMind and Disney Research Focus on Physics

Focus on Physics: NVIDIA, Google DeepMind, and Disney Research NVIDIA announced a partnership with Google DeepMind and Disney Research to create Newton, an open-source physics engine that enables robots to learn how to perform intricate jobs more accurately.

Newton, which is based on the NVIDIA Warp framework, will be interoperable with simulation frameworks like NVIDIA Isaac Lab and Google DeepMind’s MuJoCo and optimized for robot learning. The three businesses also intend to allow Newton to utilize Disney’s physics engine.

Google DeepMind and NVIDIA are working together to create MuJoCo-Warp, which will be accessible to developers via Newton and Google DeepMind’s MJX open-source toolkit. It is anticipated to speed up robotics machine learning tasks by more than 70x.

In order to develop its robotic character platform, which drives next-generation entertainment robots like the expressive Star Wars-inspired BDX droids that accompanied Huang on stage during his GTC keynote, Disney Research will be among the first to employ Newton.

The BDX droids are only the first step. The objective is to bring more characters to life in ways that have never been seen before, and the collaboration with Disney Research, NVIDIA, and Google DeepMind is a crucial component of that goal. “With the help of this partnership, will be able to develop a new generation of robotic characters that are more captivating and expressive than ever before and engage with visitors in ways that are unique to Disney.”

An further partnership to develop OpenUSD pipelines and best practices for robotics data processing was announced by NVIDIA, Disney Research, and Intrinsic.

More Data to Advance Robotics Post-Training

Although they are expensive to collect, large, diversified, high-quality datasets are essential for robot development. Real-world human demonstration data for humanoids is constrained by an individual’s 24-hour day.

This problem is addressed by the NVIDIA Isaac GR00T Blueprint for synthetic manipulation motion creation, which was unveiled today. Using a limited number of human demonstrations, the blueprint enables developers to produce exponentially massive volumes of synthetic motion data for manipulation tasks, based on the Omniverse and NVIDIA Cosmos Transfer world foundation models.

In just 11 hours, NVIDIA produced 780,000 synthetic trajectories the equivalent of 6,500 hours, or nine continuous months, of human demonstration data using the earliest components available for the blueprint. The performance of the GR00T N1 was then enhanced by 40% by NVIDIA by merging the synthetic and actual data, as opposed to relying just on real data.

As part of a wider open-source physical AI dataset that was previously revealed at GTC and is currently accessible on Hugging Face, NVIDIA is making the GR00T N1 dataset available to the developer community in order to further provide them with useful training data.

Accessibility

You can now get the NVIDIA GR00T N1 training data and task evaluation scenarios from GitHub and Hugging Face. You can now get the NVIDIA Isaac GR00T Blueprint for synthetic manipulation motion creation from GitHub or view an interactive demo on build.nvidia.com.

Additionally unveiled today at GTC, the NVIDIA DGX Spark personal AI supercomputer offers developers a turnkey system to extend the capabilities of GR00T N1 for additional robots, tasks, and settings without requiring a significant amount of bespoke programming.

Later this year, the Newton physics engine should be accessible.

Watch the NVIDIA GTC keynote to find out more, and sign up to attend important Humanoid Developer Day seminars such as:

A closer look at these new technologies and how Google uses AI models to train AI-powered humanoids for real-world tasks can be found in “An Introduction to Building Humanoid Robots,” which delves deeply into the NVIDIA Isaac GR00T; “Insights Into Disney’s Robotic Character Platform,” which explains how Disney Research redefines entertainment robotics with BDX droids; and “Announcing Mujoco-Warp and Newton: How Google DeepMind and NVIDIA Are Supercharging Robotics Development.”