NERSC

The National Energy Research Scientific Computing Center (NERSC), which serves as the U.S. Department of Energy’s primary open science facility, conducted performance and energy consumption measurements on four key high-performance computing (HPC) and AI applications. These tests were conducted on Perlmutter, a supercomputer equipped with NVIDIA GPU’s.

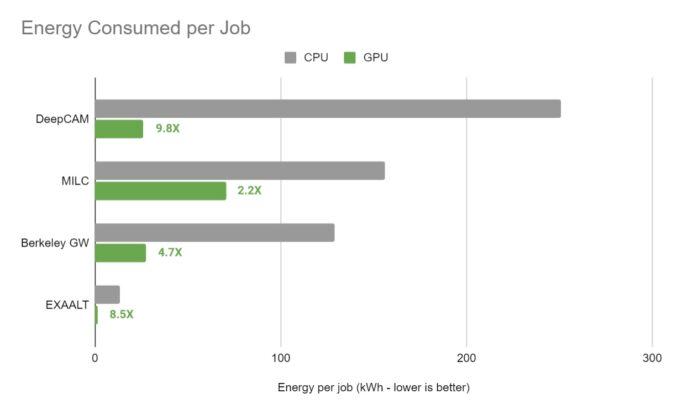

The results were compelling. By leveraging NVIDIA A100 Tensor Core GPUs for acceleration, energy efficiency improved by an average of 5 times, with a weather forecasting application achieving a remarkable 9.8 times improvement.

The efficiency gains were evident when comparing a server with four A100 GPUs to a dual-socket x86 server. NERSC achieved speedups of up to 12 times with the GPU-accelerated system. At the similar level of performance, the GPU-accelerated system consumed 588 megawatt-hours less energy per month related to a CPU-only system. Still, running the same workload on a four-way NVIDIA A100 cloud instance for a month could lead to over $4 million in savings compared to a CPU-only instance.

These results are particularly significant as they are based on real-world applications rather than synthetic benchmarks. The impact of these efficiency gains is substantial, empowering over 8,000 scientists using Perlmutter to tackle larger challenges and facilitating breakthroughs.

Perlmutter, with its 7,100+ A100 GPUs, supports a wide range of applications. Scientists are utilizing these resources to explore subatomic interactions for discovering new green energy sources.

NERSC tested applications from various fields, including molecular dynamics, material science, and weather forecasting. For example, MILC is used to simulate the forces that bind particles within an atom, contributing to advancements in quantum computing and the study of dark matter and the universe’s origins. BerkeleyGW focuses on simulating and predicting the optical properties of materials and nanostructures, essential for developing more efficient batteries and electronic devices.

EXAALT, which achieved an 8.5 times efficiency gain with A100 GPUs, addresses fundamental challenges in molecular dynamics, providing researchers with atomic movement simulations in the form of short videos. DeepCAM, the fourth application, utilizes A100 GPUs for improved energy efficiency, aiding in hurricane and atmospheric river detection in climate data with a 9.8 times gain.

The potential energy savings with GPU-accelerated systems extend beyond the scientific community. Switching all CPU-only servers running AI to GPU-accelerated systems globally could save an astounding 10 trillion watt-hours of energy per year, equivalent to the energy consumption of 1.4 million homes in a year.

Accelerated computing is not limited to scientific research. Industries such as pharmaceutical companies and automakers like BMW Group are harnessing GPU-accelerated simulation and AI for accelerated drug discovery and modeling entire factories, respectively. This demonstrates the growing adoption of accelerated computing and AI in various enterprise domains.

The NERSC results align with previous analyses showcasing the potential savings achievable through accelerated computing. In NVIDIA’s separate analysis, GPUs delivered 42 times better energy efficiency for AI inference compared to CPUs.

The combination of accelerated computing and AI is driving an industrial HPC revolution, as stated by NVIDIA founder and CEO Jensen Huang. With its proven benefits in terms of performance and energy efficiency, accelerated computing is transforming HPC and AI applications across industries.

Conclusion

Research from the U.S. Department of Energy’s NERSC is highlighted in NVIDIA’s blog post, showing that in high-performance computing and AI applications, NVIDIA GPUs provide noticeably higher energy efficiency than CPUs.

Using NVIDIA A100 GPUs increased energy efficiency by an average of five times, according to testing on real-world scientific applications. This results in significant cost and energy savings, freeing up scientists to work on more challenging issues. The results also point to wider business ramifications, showing that GPU acceleration may result in significant energy savings outside of the realm of science. Several businesses may use GPUs to process data more quickly while using a lot less energy.

In the end, the paper presents GPU-accelerated computing as a major force behind enhanced energy sustainability and scientific progress.

[…] conclusion, AMD is committed to improving energy efficiency in HPC and AI to reduce operating costs and promote sustainability in data centers. They aim to achieve […]

[…] Boosting Performance with NVIDIA GPUs […]