Using Red Hat OpenShift and 5th generation Intel Xeon Scalable Processors, Boost Your NLP Applications

Red Hat OpenShift AI

Her AI findings on OpenShift, where have been testing the new 5th generation Intel Xeon CPU, have really astonished us. Naturally, AI is a popular subject of discussion everywhere from the boardroom to the data center.

There is no doubt about the benefits: AI lowers expenses and increases corporate efficiency.

It facilitates the discovery of hitherto undiscovered insights in analytics and expands comprehension of business, enabling you to make more informed business choices more quickly than before.

Beyond only recognizing human voice for customer support, natural language processing (NLP) has become more valuable in business. These days, natural language processing (NLP) is utilized to improve machine translation, identify spam more accurately, enhance client Chatbot experiences, and even employ sentiment analysis to ascertain social media tone. It is expected to reach a worldwide market value of USD 80.68 billion by 2026 , and companies will need to support and grow with it quickly.

Her goal was to determine how Red Hat OpenShift‘s NLP AI workloads were affected by the newest 5th generation Intel Xeon Scalable processors.

The Support Red Hat OpenShift Provides for Your AI Foundation

Red Hat OpenShift is an application deployment, management, and scalability platform built on top of Kubernetes containerization technology. Applications become less dependent on one another as they transition to a containerized environment. This makes it possible for you to update and apply bug patches in addition to swiftly identifying, isolating, and resolving problems. In particular, for AI workloads like natural language processing, the containerized design lowers costs and saves time in maintaining the production environment. AI models may be designed, tested, and generated more quickly with the help of OpenShift’s supported environment. Red Hat OpenShift is the best option because of this.

The Intel AMX Modified the Rules

Intel released the Intel AMX, or fourth generation Intel Xeon Scalable CPU, almost a year ago. The CPU may optimize tasks related to deep learning and inferencing thanks to Intel AMX, an integrated accelerator.

The CPU can switch between AI workloads and ordinary computing tasks with ease thanks to Intel AMX compatibility. Significant performance gains were achieved with the introduction of Intel AMX on 4th generation Intel Xeon Scalable CPUs.

After Intel unveiled its 5th generation Intel Xeon Scalable CPU in December 2023, they set out to measure the extra value that this processor generation offers over its predecessor.

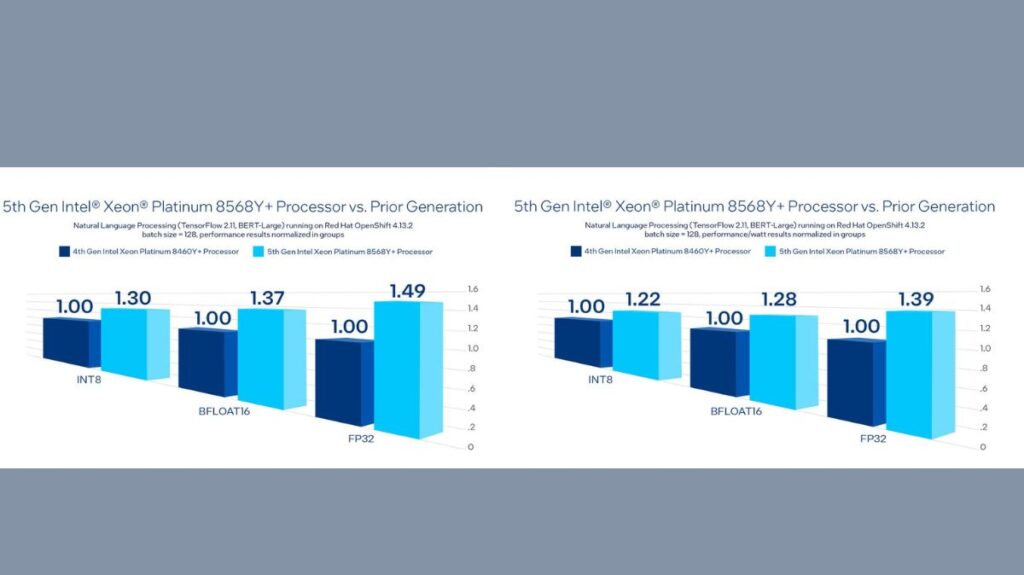

Because BERT-Large is widely utilized in many business NLP workloads, they explicitly picked it as deep learning model. With Red Hat OpenShift 4.13.2 for Inference, the graph below illustrates the performance gain of the 5th generation Intel Xeon 8568Y+ over the 4th generation Intel Xeon 8460+. The outcomes are Amazing

These Intel Xeon Scalable 5th generation processors improved its predecessors in an assortment of remarkable ways.

- Performing on OpenShift upon a 5th generation Intel Xeon Platinum 8568the value of Y+ with INT8 produces up to 1.3x improved natural-language processing inference capability (BERT-Large) than previous versions with Inverse.

- OpenShift on a 5th generation Intel Xeon Platinum 8568Y with BF16 yields 1.37x greater Natural Language Processing inference performance (BERT-Large) compared to previous generations with BF16.

- OpenShift on a 5th generation Intel Xeon Platinum 8568Y+ with FP32 yields 1.49x greater Natural Language Processing inference performance (BERT-Large) compared to previous generations with FP32.

- They evaluated power usage as well, and the new 5th Generation has far greater performance per watt.

- Natural Language Processing inference performance (BERT-Large) running on OpenShift on a 5th generation Intel Xeon Platinum 8568Y+ with INT8 has up to 1.22x perf/watt gain compared to previous generation with INT8.

- Natural Language Processing inference performance (BERT-Large) running on OpenShift on a 5th generation Intel Xeon Platinum 8568Y+ with BF16 is up to 1.28x faster per watt than on a previous generation of processors with BF16.

- Natural Language Processing inference performance (BERT-Large) running on OpenShift on a 5th generation Intel Xeon Platinum 8568Y+ with FP32 is up to 1.39 times faster per watt than it was on a previous generation with FP32.

Methodology of Testing

Using an Intel-optimized TensorFlow framework and a pre-trained NLP model from Intel AI Reference Models, the workload executed a BERT Large Natural Language Processing (NLP) inference job. With Red Hat OpenShift 4.13.13, it evaluates throughput and compares Intel Xeon 4th and 5th generation processor performance using the Stanford Question Answering Dataset.

FAQS:

What is OpenShift and why it is used?

Developing, deploying, and managing container-based apps is made easier with OpenShift. Faster development and release life cycles are made possible by the self-service platform it offers you to build, edit, and launch apps as needed. Consider pictures as molds for cookies, and containers as the cookies themselves.

What strategy was Red Hat OpenShift designed for?

Red Hat OpenShift makes hybrid infrastructure deployment and maintenance easier while giving you the choice of fully managed or self-managed services that may operate on-premise, in the cloud, or in hybrid settings.