Memory Machine

MySQL Databases Are Accelerated by Intelligent Tiering

Using Memory Machine X Server Expansion software for intelligent tiering. Using memory page movement, their Memory Machine X QoS policies adjust to different application workloads in order to maximise bandwidth or latency.

Based on the “temperature” of memory pages that is, the frequency of access latency tiering intelligently places data across heterogeneous memory devices to maximise performance. In order to lower the average latency of memory accesses and improve application performance, the MemVerge QoS engine transfers hot pages to DRAM and cool pages to CXL memory.

Hot pages are moved to DRAM by the MemVerge QoS engine so they can be easily accessed. CXL memory is where cold pages are stored. MySQL on Memory Machine X yields reduced latency along with increased TPS, QPS, and CPU utilisation.

The most widely used Open-Source SQL database management system worldwide is called MySQL. MemVerge compared the performance of MySQL utilising the Memory Machine Server Expansion latency policy vs MySQL using Transparent Page Placement in the kernel using TPC-C benchmark tests.

Memory Machine allowed for 20–40% lower latency (bottom left chart), which in turn allowed MySQL to provide better CPU utilisation, queries per second (QPS), and transactions per second (TPS).

Cloth-Attached CXL Memory Quickens Ray

GISMO and Ray (Global Shared Memory Objects IO-free)

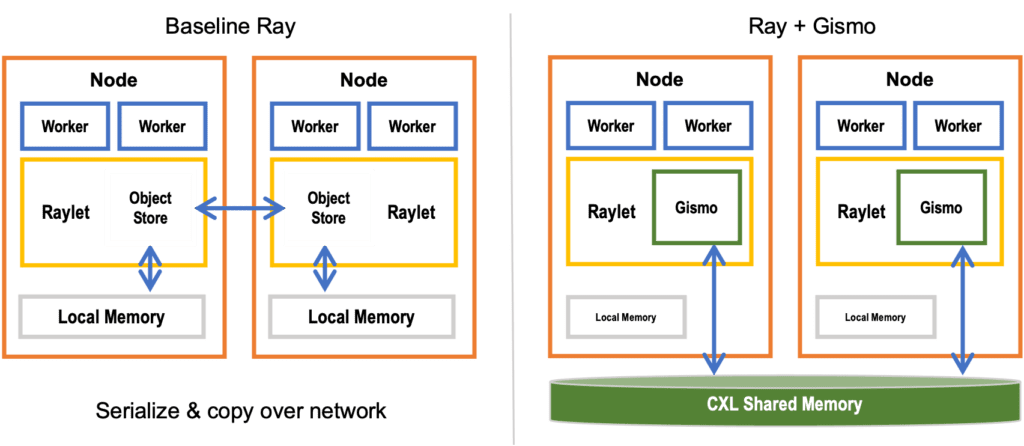

Fabric Attached Memory (FAM) software features are included in MemVerge Memory Machine X for a range of database, machine learning, and artificial intelligence applications. It comes with an API for memory object stores called GISMO, which enables memory semantics-based memory object creation and access across multiple nodes for applications.

GISMO allows applications to access data directly from the shared memory pool and maintains cache coherence between processors in different servers, hence reducing or eliminating the need for data transfers over the network, which is the most expensive stage in network-based message passing.

Three steps are involved in using message passing to share data across processes in a baseline Ray environment:

- Data writing to node A’s local memory

- Transmission of the message over the network

- Putting the information in node B’s local memory

Node A writes to shared memory while Node B reads from it using GISMO. In situations with single writers and numerous readers, like Ray-based AI, GISMO provides high throughput and low latency while preserving cache coherence among the nodes.

280% faster shuffle across 4 nodes and 675% faster remote get

Memory Machine X: Attached Fabric Ray clusters become IO-free thanks to memory, which removes the need for object serialisation and network transfers for remote object access. A zero-copy environment is likewise produced by Memory Machine X. No more copies of identical objects on various nodes. Additionally, each node accessing the memory pool has less object spilling and data skewing thanks to the fabric-attached memory software.

Memory Machine X Fabric Attached Memory software demonstrated the same access time for a local get object, 675% faster access time for a remote get object, and 280% better performance for a shuffle across 4 nodes in MemVerge testing conducted using software emulation of a pooled CXL memory sharing environment.

Using CXL Memory, MemVerge and Micron Increase NVIDIA GPU Utilisation

A FlexGen high-throughput generation engine and an OPT-66B large language model running on a Supermicro Petascale Server outfitted with an AMD Genoa CPU, Nvidia A10 GPU, Micron DDR5-4800 DIMMs, CZ120 CXL memory modules, and MemVerge Memory Machine X intelligent tiering software were showcased in the demonstration, which was led by engineers from MemVerge and Micron.

The demonstration produced remarkable effects. When compared to traditional NVMe storage techniques, the FlexGen benchmark accomplished tasks in less than half the time by using tiered memory. Thanks to MemVerge Memory Machine X software’s transparent data tiering management across GPU, CPU, and CXL memory, GPU utilisation increased simultaneously from 51.8% to 91.8%.

MemVerge, Micron, and Supermicro’s partnership represents a critical turning point in the development of AI workloads, allowing businesses to attain previously unheard-of levels of performance, efficiency, and time-to-insight. Through the use of CXL memory and intelligent tiering, enterprises can unleash novel avenues for innovation and expedite their path towards AI-driven prosperity.

“Micron is able to show the significant advantages of CXL memory modules to increase effective GPU throughput for AI applications, resulting in a quicker time to insights for customers thanks to our partnership with MemVerge.

CXL Offers 300% Greater Capacity at 50% Lower Cost

Use case and cost analysis presentations at the Q1’24 Memory Fabric Forum.

50% lower expenses

When growing to multi-terabyte configurations, CXL technology provides a less expensive option than 128GB DIMMs for server memory, if cost reduction is a priority for you.

Up to eight 64GB or DDR4 DIMMs at a cheaper cost are supported via a new class of PCIe Add-In Cards (AICs). With a mixed memory configuration, you can expand capacity up to 8TB for half the price, as you can see in the table below.

The Memory Machine X Server Expansion software automatically moves your data to the proper memory tier for optimal performance and determines whether CXL memory is suitable for your application environment.

300% increased capacity

- A 2-socket server can be configured with up to 11.26TB of memory using a combination of DIMMs and AICs, which is 38% more than the 8TB capacity achievable with DIMMs alone.

- A 4-socket server can be configured with up to 32TB of memory using a combination of DIMMs and AICs, which is 300% more memory than can be achieved with only DIMMs.

The Memory Machine X Server Expansion program automatically places your data in the proper memory tier for maximum performance and advises you on whether adding CXL memory is appropriate for your application environment.

Vector Databases Are Accelerated by Intelligent Tiering

Presentation of use case and Weaviate test findings at Q1’24 Memory Fabric Forum.

Using Memory Machine X Server QoS Feature for intelligent tiering

Using memory page movement, our Memory Machine X QoS policies adjust to different application workloads in order to maximise bandwidth or latency. Through careful placement and movement of data between DRAM and CXL memory, bandwidth-optimized memory placement and movement aims to maximise system bandwidth by taking into account the bandwidth requirements of each application.

To maintain a balance between bandwidth and latency, the bandwidth policy engine will make use of all DRAM and CXL memory devices’ available bandwidth, with a user-selectable DRAM to CXL ratio.

- Using weighted interleaving, random page selection, intelligent page selection, and equal interleaving, the MemVerge QoS engine transfers hot and cold data on DRAM and CXL.

- Memory Machine X uses the AI-native Weaviate vector database to provide higher QPS and lower latency.

- By giving generative AI chatbots an external knowledge base and assisting in ensuring that they give reliable information, vector databases support generative AI models.

Developers utilise Weaviate, an AI-native vector database, to build dependable and user-friendly AI-powered applications. Weaviate benchmark tests are provided to gauge latency and the number of queries per second.

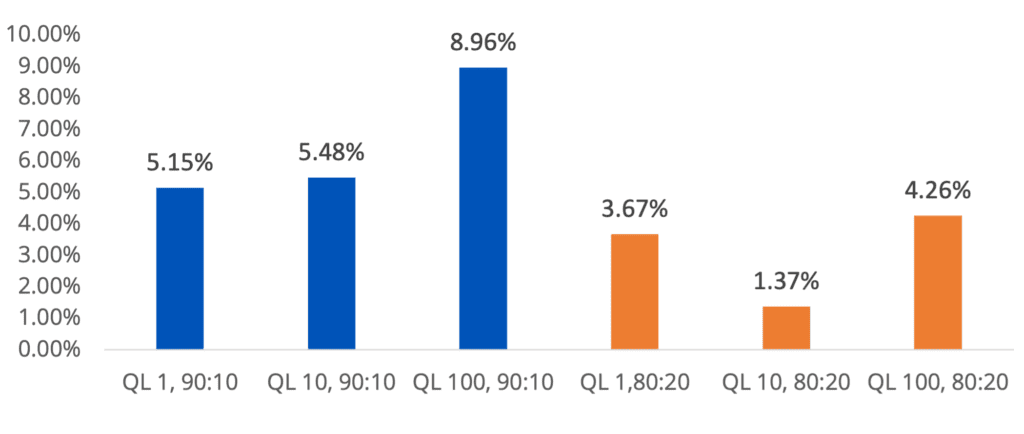

MemVerge’s QPS testing is displayed below. Weaviate was able to deliver up to 7.35% more enquiries per second thanks to Memory Machine X, which used 10% and 20% CXL memory across multiple query limits (QL).