LLMs have grown rapidly in the fast-growing field of artificial intelligence. Chatbots and sophisticated code generating tools rely on these models, which have grown exponentially. But this expansion also presents serious difficulties, especially when attempting to use these models on hardware with constrained memory and processing capacity. Herein lies the opportunity for the novel discipline of LLM quantization to provide a more efficient means of scaling AI.

The difficulty of massive language models

Flagship LLMs like GPT-4 and Llamav3-70B have been more popular in recent years. Nevertheless, these models are too big to run on low-power edge devices, with tens to hundreds of billions of parameters. Smaller LLMs, such as Llamav3-8B phi-3, can still run on low-power edge devices and still produce great results, although they still need a lot of memory and processing power. Deployment on low-power edge devices, which have less processing power and working memory than cloud-based systems, is problematic because of this.

Quantized meaning

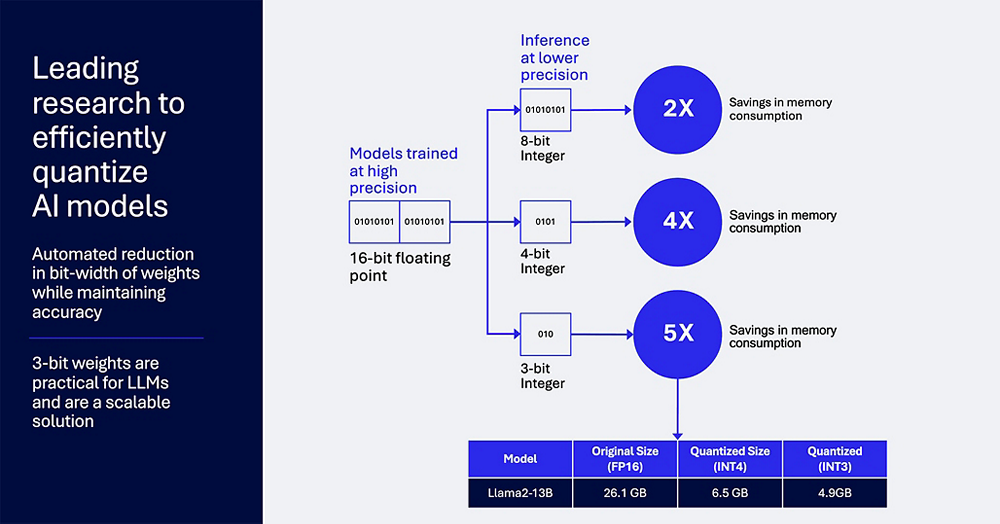

The process of quantization involves lowering the precision of the values utilised in calculations, which reduces the size of the model and the resources required to operate it. Quantization speeds up processing and drastically lowers memory needs, making it possible to run LLMs on edge devices by reducing floating-point representations to lower-bit integers.

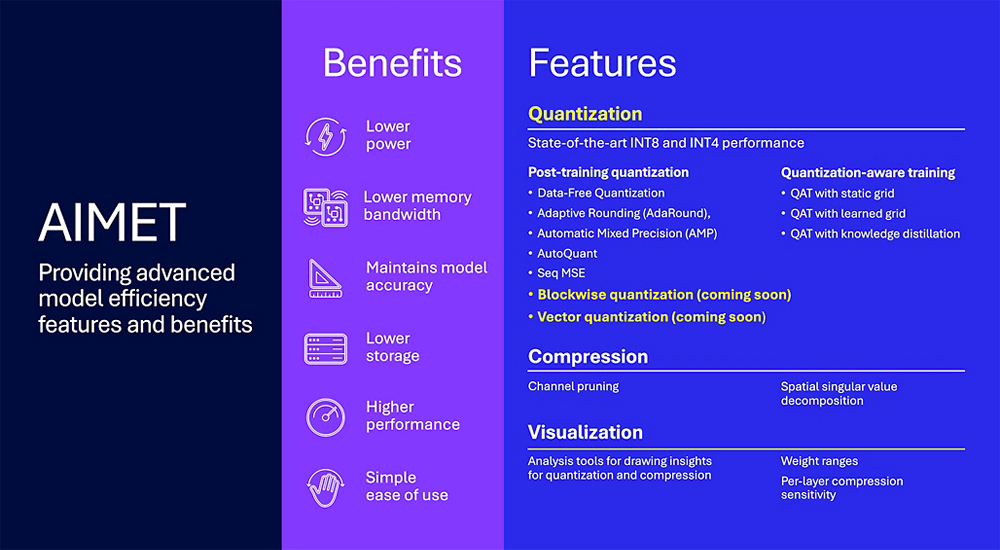

For many years, Qualcomm AI Research has been doing cutting-edge research on model efficiency and publishing its findings. Among the quantization methods Qualcomm study are quantization-aware training (QAT) and post-training quantization (PTQ). Through the use of quantization in the training process, QAT enables the model to adjust to decreased precision while maintaining higher accuracy. With the use of PTQ, a pre-trained model may be quickly and easily transformed into a lower precision format, resulting in a technique that is quicker but less precise. How can we combine the greatest aspects of both worlds?

Helping developers with the quantization of their models

Developers can now choose from a library of more than 100 pre-optimized AI models that are ready to be deployed, resulting in up to four times faster inferencing, as well as properly compress, quantize, and visualise their models thanks to the Qualcomm Innovation Center’s open-source AI Model Efficiency Toolkit and the recently released Qualcomm AI Hub portal.

A few of the more recent methods made available to the AI community are knowledge distillation and sequential mean squared error (MSE), which find a weight quantization scale that minimises output activation MSE and enhance QAT by training a smaller “student” model to imitate a larger “teacher” model. A detailed look into this subject can be found in Qualcomm AI Research’s study on low-rank QAT for LLMs.

Vector quantization

The part vector quantization plays in LLMs

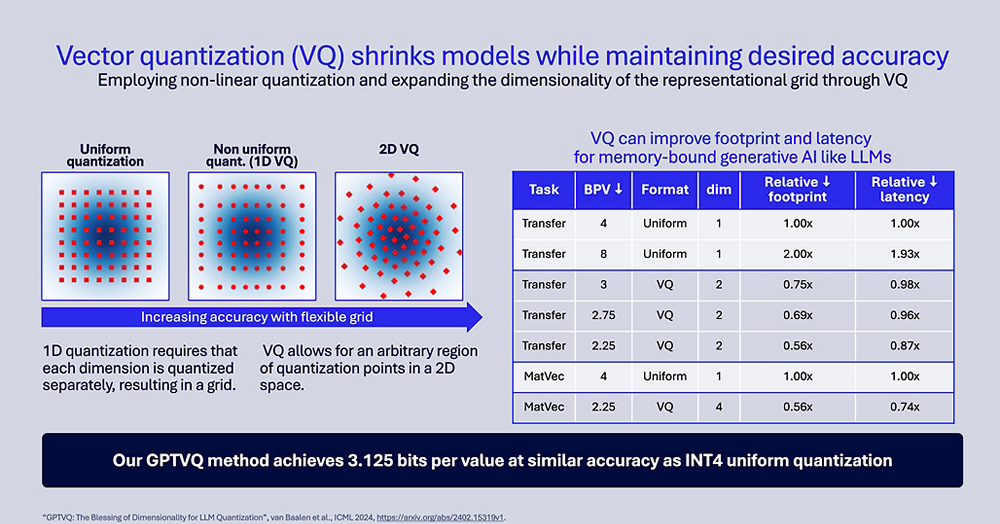

Vector quantization (VQ) is a promising development that Qualcomm has been working on. VQ takes into account the joint distribution of parameters, as opposed to conventional techniques that quantize each parameter separately, resulting in more effective compression and less information loss.

By quantizing groupings of parameters collectively rather than separately, Qualcomm’s innovative method, GPTVQ, uses vector quantization to LLMs. This approach minimises the models’ size more successfully than conventional quantization techniques while maintaining the models’ correctness. For example, Qualcomm’s tests demonstrated that GPTVQ could reach nearly original model accuracy with a substantially smaller model size, which would change the game for implementing strong AI models on edge devices.

Consequences for the scaling of AI

Effective LLM quantization has a wide range of effects. AI applications can function more smoothly on a larger range of devices, from smartphones to Internet of Things (IoT) devices, by decreasing the size and computational requirements of LLMs. This opens up access to sophisticated AI capabilities for anyone, spurring a fresh round of innovation in AI applications across sectors.

Furthermore, as data may be processed locally without having to be transferred to a centralised server, the ability to execute LLMs on edge devices also overcomes privacy and latency issues associated with cloud computing.

The capacity to expand LLMs effectively will be essential for the next generation of AI applications as the technology develops. The problems of memory and latency in AI on low-power edge devices may be solved by quantization, especially with methods like Qualcomm AI Research’s vector quantization. AI appears to be becoming more accessible, efficient, and clever in the future thanks to continued study and development.

LLM quantization

Large language models (LLMs) can be compressed using a technique called LLM quantization, which lowers the weights’ precision. In essence, this implies storing the same amount of data in less memory.

A summary of LLM quantization is provided below:

The benefits:

- Reduced model size: LLMs need less memory and storage space because they use data types with less precision.

- Faster inference: LLMs can operate on hardware with less processing power since smaller sizes result in faster calculations.

- Reduced energy consumption: Models that are smaller and require less power to operate are more energy-efficient.

- Distribution across a larger spectrum of devices: Quantization makes it possible for LLMs to operate on embedded systems and cellphones, which have constrained resources.

The exchanges:

- Accuracy loss: A minor drop in the LLM’s accuracy may result from reducing precision.

- Depending on the particular quantization method employed, this loss can vary in severity.

How it functions:

FP32 or FP16 full or half precision floating-point numbers are commonly used by LLMs for their weights. These weights are quantized into representations with less accuracy, including integer formats (INT8). As a result, the model size is decreased because fewer bits are needed to store each weight.

Regarding LLM quantization, there are two primary methods:

- Quantizing a previously trained LLM is known as post-training quantization, or PTQ. Although easier to use, there could be a greater loss of accuracy.

- Training that takes quantization into account while it is being conducted is known as quantization-aware training (QAT). While QAT needs more training data and time than PTQ, it can attain higher accuracy.

All things considered, huge language models can be run on a greater variety of devices and become more efficient with the help of LLM quantization. In selecting a quantization technique, it is crucial to take into account the possible loss of accuracy.