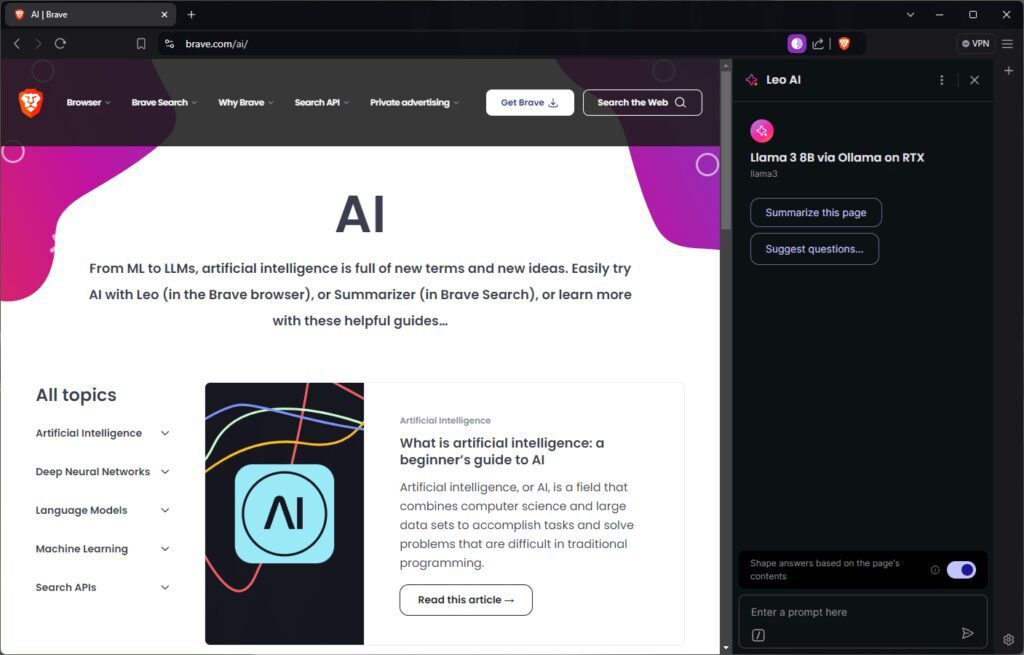

Brave New World: RTX-Accelerated Local LLMs for Brave Browser Users Presented by Leo AI and Ollama.

Leo AI

Using local models in well-known apps is now simpler than ever with RTX-accelerated community tools.

AI is being incorporated into apps more and more to improve user experiences and increase productivity. These applications range from productivity tools and software development to gaming and content creation apps.

These increases in productivity also apply to routine chores like surfing the web. Recently, Brave, a privacy-focused online browser, introduced Leo AI, a smart AI assistant that assists users with summarizing articles and movies, surfacing insights from documents, answering queries, and more.

The hardware, libraries, and ecosystem software that Brave and other AI-powered products are built using are tuned for the particular requirements of artificial intelligence.

The Significance of Software

Whether it’s operating on a desktop computer locally or in a data center, NVIDIA GPUs power the world’s AI. Tensor Cores, which are particularly designed to speed up AI applications like Leo AI by performing the enormous amount of computations required for AI concurrently rather than one at a time via massively parallel number crunching, are present in them.

However, excellent hardware is only useful if apps can effectively use it. Enabling the quickest and most responsive AI experience also depends on the software that runs on top of GPUs.

The AI inference library, which is the initial layer, functions as a translator, taking requests for typical AI tasks and translating them into precise instructions that the hardware can follow. Well-known inference libraries include llama.cpp, which is used by Brave and Leo AI via Ollama, Microsoft’s DirectML, and NVIDIA TensorRT.

An open-source framework and library is called llama.cpp. Tensor Core acceleration for hundreds of models, including well-known large language models (LLMs) like Gemma, Llama 3, Mistral, and Phi, is made possible via CUDA, the NVIDIA software application programming interface that allows developers to optimize for GeForce RTX and NVIDIA RTX GPUs.

Applications often employ a local inference server to ease integration on top of the inference library. Instead of the application having to, the inference server manages processes like downloading and configuring certain AI models.

The open-source Ollama project gives users access to the library’s functionalities by sitting on top of llama.cpp. It facilitates the delivery of local AI capabilities via an ecosystem of apps. In order to provide RTX users with quicker, more responsive AI experiences, NVIDIA optimizes tools such as Ollama for NVIDIA hardware across the whole technological stack.

NVIDIA optimizes every aspect of the technology stack, including the hardware, system software, inference libraries, and tools that allow apps to provide RTX users with AI experiences that are quicker and more responsive.

Ollama

Local vs Cloud

Via Ollama, Brave’s Leo AI may operate locally on a PC or on the cloud.

Using a local model for inference processing has several advantages. The experience remains private and accessible at all times since it doesn’t transmit requests to an external server for processing. Users of Brave, for example, may get financial or medical assistance without transferring any data to the cloud. Additionally, paying for unlimited cloud access is eliminated while operating locally. Compared to other hosted services, which sometimes support just one or two variations of the same AI model, Ollama offers consumers access to a greater selection of open-source models.

Additionally, users may interact with models with a variety of specializations, including code generation, compact size, bilingualism, and more.

When AI is executed locally, RTX makes it possible for a quick, responsive experience. When using llama.cpp and the Llama 3 8B model, customers may anticipate replies up to 149 tokens per second, or around 110 words per second. This translates into quicker answers to queries, requests for material summaries, and more when used Brave with Leo AI and Ollama.

Brave Leo AI

Launch Brave Using Ollama and Leo AI

The Ollama installer may be easily installed by downloading it from the project website and leaving it to run in the background. Users may download and install a broad range of supported models from a command prompt, and then use the command line to interact with the local model.

Leo AI will utilize the locally hosted LLM for prompts and inquiries when it is set up to link to Ollama. Additionally, users may always swap between local and cloud models.