NVIDIA TensorRT

Thanks to NVIDIA RTX and GeForce RTX technology, the AI PC age is arrived. Along with it comes a new language that can be difficult to understand when deciding between the many desktop and laptop options, as well as a new method of assessing performance for AI-accelerated tasks. This article is a part of the AI Decoded series, which shows off new RTX PC hardware, software, tools, and accelerations while demystifying AI by making the technology more approachable.

While frames per second (FPS) and related statistics are easily understood by PC gamers, new measures are needed to measure AI performance.

Emerging as the Best

Trillions of operations per second, or TOPS, is the initial baseline. The key term here is trillions; the processing power required for generative AI jobs is truly enormous. Consider TOPS to be a raw performance indicator, like to the horsepower rating of an engine.

Take Microsoft’s recently unveiled Copilot+ PC series, for instance, which has neural processing units (NPUs) capable of up to 40 TOPS. For many simple AI-assisted tasks, such as asking a nearby chatbot where yesterday’s notes are, 40 TOPS is sufficient.

However, a lot of generative AI tasks are more difficult. For all generative tasks, the NVIDIA RTX and GeForce RTX GPUs offer performance never seen before; the GeForce RTX 4090 GPU offers more than 1,300 TOPS. AI-assisted digital content production, AI super resolution in PC gaming, image generation from text or video, local large language model (LLM) querying, and other tasks require processing power comparable to this.

Put in Tokens to Start Playing

TOPS is just the start of the tale. The quantity of tokens produced by the model serves as a gauge for LLM performance.

The LLM’s output is tokens. A word in a sentence or even a smaller piece like whitespace or punctuation might serve as a token. The unit of measurement for AI-accelerated task performance is “tokens per second.”

Batch size, or the quantity of inputs processed concurrently in a single inference pass, is another crucial consideration. The ability to manage many inputs (e.g., from a single application or across multiple apps) will be a critical distinction, as an LLM will be at the basis of many modern AI systems. Greater batch sizes demand more memory even though they perform better for concurrent inputs, particularly when paired with larger models.

NVIDIA TensorRT-LLM

Because of their massive amounts of dedicated video random access memory (VRAM), Tensor Cores, and TensorRT-LLM software, RTX GPUs are incredibly well-suited for LLMs.

High-speed VRAM is available on GeForce RTX GPUs up to 24GB and on NVIDIA RTX GPUs up to 48GB, allowing for larger models and greater batch sizes. Additionally, RTX GPUs benefit from Tensor Cores, which are specialised AI accelerators that significantly accelerate the computationally demanding tasks necessary for generative AI and deep learning models. Using the NVIDIA TensorRT software development kit (SDK), which enables the highest-performance generative AI on the more than 100 million Windows PCs and workstations powered by RTX GPUs, an application can quickly reach that maximum performance.

RTX GPUs achieve enormous throughput benefits, particularly as batch sizes increase, because to the combination of memory, specialised AI accelerators, and optimised software.

Text to Image More Quickly Than Before

Performance can also be assessed by measuring the speed at which images are generated. Stable Diffusion, a well-liked image-based AI model that enables users to quickly translate text descriptions into intricate visual representations, is one of the simplest methods.

Users may easily build and refine images from text prompts to get the desired result with Stable Diffusion. These outcomes can be produced more quickly when an RTX GPU is used instead of a CPU or NPU to process the AI model.

When utilising the TensorRT extension for the well-liked Automatic1111 interface, that performance increases even further. With the SDXL Base checkpoint, RTX users can create images from prompts up to two times faster, greatly simplifying Stable Diffusion operations.

TensorRT Acceleration

TensorRT acceleration was integrated to ComfyUI, a well-liked Stable Diffusion user interface, last week. Users of RTX devices may now create images from prompts 60% quicker, and they can even utilise TensorRT to transform these images to videos 70% faster utilising Stable Video Diffuson.

The new UL Procyon AI Image Generation benchmark tests TensorRT acceleration and offers 50% faster speeds on a GeForce RTX 4080 SUPER GPU than the quickest non-TensorRT implementation.

The much awaited text-to-image model from Stable Diffusion 3 Stability AI will soon receive TensorRT acceleration, which will increase performance by 50%. Furthermore, even more performance acceleration is possible because to the new TensorRT-Model Optimizer. This leads to a 50% decrease in memory use and a 70% speedup over the non-TensorRT approach.

Naturally, the actual test is found in the practical application of refining an initial prompt. By fine-tuning prompts on RTX GPUs, users may improve image production much more quickly it takes seconds instead of minutes when using a Macbook Pro M3 Max. When running locally on an RTX-powered PC or workstation, users also benefit from speed and security with everything remaining private.

The Results Are Available and Can Be Shared

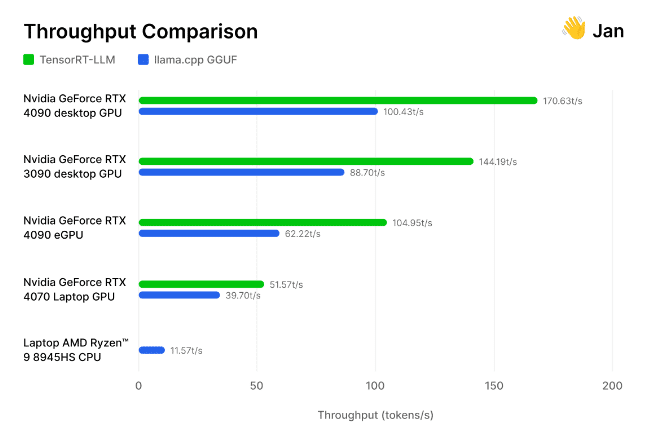

Recently, the open-source Jan.ai team of engineers and AI researchers integrated TensorRT-LLM into their local chatbot app, then put these optimisations to the test on their own system.

TensorRT-LLM

The open-source llama.cpp inference engine was utilised by the researchers to test TensorRT-LLM’s implementation on a range of GPUs and CPUs that the community uses. They discovered that TensorRT is more effective on consecutive processing runs and “30-70% faster than llama.cpp on the same hardware.” The group invited others to assess the performance of generative AI independently by sharing its methodology.