Generative AI, notably Large Language Models (LLMs), are ushering in a major technological shift. These models are leading information interaction transformation.

LLMs for content consumption and creation offer companies great potential. They may automate content development, improve quality, diversify services, and customize material. Explore the transformational influence and create your company plan now to uncover creative methods to boost your firm’s potential.

LLMs are used in many fields. Microsoft 365 Copilot, a new invention, simplifies data interactions to boost company efficiency. It summarizes email conversations in Microsoft Outlook, highlights significant discussion points, suggests action items in MicrosoftTeams, and lets users automate activities and develop chatbots in Microsoft Power Platform.

According to GitHub data, 88% of developers reported greater productivity and 73% reported reduced time spent looking for knowledge or examples.

Changes in search

Remember when we entered phrases into search boxes and clicked on multiple links to get information?

Modern search engines like Bing are altering things. They intelligently evaluate your inquiry and source from numerous online sources instead of delivering a long list of links. They also convey the material clearly and concisely with references.

Online search is becoming more user-friendly and useful. We’re shifting from countless links to simple solutions. Our internet search habits have changed.

If organizations could search, browse, and analyze internal data as easily and efficiently, it would alter them. This new paradigm would let workers quickly access company knowledge and use enterprise data. This simplified experience is made possible by Retrieval Augmented Generation (RAG), a combination of Azure Cognitive Search and Azure OpenAI Service.

Increasing LLMs and RAG: Bridging information access gaps

Natural language processing approach RAG integrates huge pre-trained language models with external retrieval or search algorithms. Adding external knowledge to the generating process lets models use information beyond their original training.

RAG is explained in detail:

- Input: A query is input to the system.

- Retrieval: The RAG system examines a corpus for relevant papers or sections before responding. Any input-related texts might be included in this corpus.

- Generation and augmentation: Recovered papers provide context to the original input. This data is given into the language model, which outputs.

RAG can access and use updated internal and external data without much training. A crucial benefit is using the newest information to make more accurate, educated, and contextually appropriate replies.

RAG in action: Business productivity revolution

RAG may boost staff productivity in certain situations:

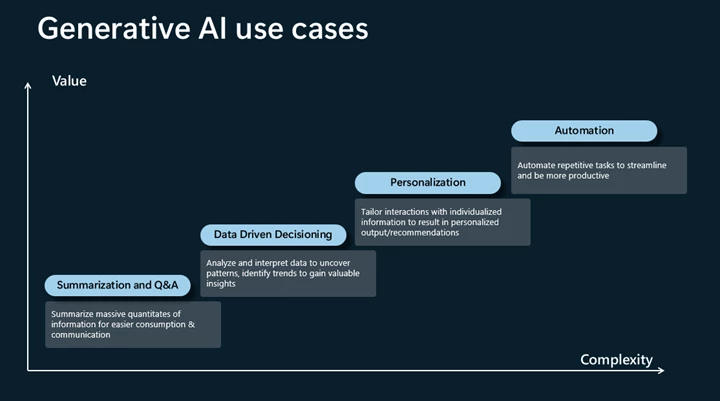

Summary and Q&A: Summarize large amounts of information for simpler consumption and sharing.

Data-driven decisioning: Find patterns and trends in data to get insights.

Personalization: Tailor interactions with individual data to get customised suggestions.

Automation: Automate repetitive operations to boost productivity.

AI is being used in more sectors as it evolves.

The RAG financial analysis method

Consider financial data analysis for a large organization, where accuracy, speed, and strategy are crucial. Let’s utilize Contoso, a fictional corporation, to demonstrate how RAG use cases improve financial analysis.

1. Summary/Q&A

Scenario: ‘Contoso’ has completed its fiscal year and produced a hundreds-page financial report. The board wants a key performance indicator-focused summary of this report.

Sample prompt: “Summarize ‘Contoso’s’ annual financial report’s main financial outcomes, revenue streams, and significant expenses.”

Results: The model summarizes Contoso’s annual revenue, main revenue sources, significant expenses, profit margins, and other financial data.

2. Data-driven choices

Situation: As the new fiscal year begins, ‘Contoso’ wants to assess its income streams and compare them to its primary rivals to better prepare for market supremacy.

Sample prompt: “Analyze ‘Contoso’s revenue breakdown from the past year and compare it to its three main competitors’ revenue structures to identify market gaps or opportunities.”

Result: The model shows that ‘Contoso’ leads in service income but trails in software licensing, where rivals have grown.

3. Customization

Scenario: ‘Contoso’ will send investors a customised report showing how the company’s success affects their money.

Sample prompt: “Using annual financial data, create a personalized financial impact report for each investor, detailing how ‘Contoso’s’ performance has affected their investment value.”

Result: The model generates investor-specific reports. An investor with a large interest in service revenue streams would notice how the company’s dominance has boosted their profits.

4. Automation

Scenario: ‘Contoso’ gets financial accounts and reports from its departments quarterly. Manually aggregating them for company-wide perspective would be immensely time-consuming.

Sample prompt: “Automatically collate and categorize the financial data from all departmental reports of ‘Contoso’ for Q1 into overarching themes like ‘Revenue’, ‘Operational Costs’, ‘Marketing Expenses’, and ‘R&D Investments’.”

Result: The model effectively aggregates the data to provide ‘Contoso’ a consolidated picture of its quarterly financial health, identifying strengths and weaknesses.

LLMs: Changing business content creation

Businesses may enhance staff productivity, optimize operations, and make data driven choices using RAG solutions. As we adopt and improve these technologies, their applications are almost endless.

[…] Providing straightforward access to complicated data for enterprise search, product discovery, and business process […]

[…] applications that create text, summarize, search, cluster, categorize, and make use of Retrieval Augmented Generation (RAG) are powered by […]