MLPerf 4.1 Benchmarks: Google Trillium Boosts AI Training with 1.8x Performance-Per-Dollar

The performance and efficiency of hardware accelerators are under unprecedented pressure due to the rapidly changing generative AI models. To meet the needs of next-generation models, Google introduced Trillium, its sixth-generation Tensor Processing Unit (TPU). From the chip to the system to its Google data center deployments, Trillium is specifically designed for performance at scale to support training on an incredibly huge scale.

Google’s first MLPerf training benchmark results for Trillium are presented today. According to the MLPerf 4.1 training benchmarks, Google Trillium offers an astounding 99% scaling efficiency (throughput) and up to 1.8x greater performance-per-dollar than previous-generation Cloud TPU v5p.

It provides a succinct performance study of Trillium in this blog, showing why it is the most effective and economical TPU training solution to date. Traditional scaling efficiency is the first system comparison metric Google briefly reviews. In addition to scaling efficiency, Google presents convergence scaling efficiency as an important parameter to take into account. It compares Google Trillium to Cloud TPU v5p and evaluates these two criteria in addition to performance per dollar. It wraps up by offering advice that will help you choose your cloud accelerators wisely.

Traditional performance metrics

Accelerator systems can be assessed and contrasted in a number of ways, including throughput scaling efficiency, effective throughput, and peak throughput. Although they are useful indications, none of these metrics account for convergence time.

Hardware details and optimal performance

Hardware characteristics like peak throughput, memory bandwidth, and network connectivity were the main focus of comparisons in the past. Although these peak values set theoretical limits, they are not very good at forecasting performance in the actual world, which is mostly dependent on software implementation and architectural design. The effective throughput of a system that is the right size for a given workload is the most important parameter because contemporary machine learning workloads usually involve hundreds or thousands of accelerators.

Performance of utilization

Utilization metrics that compare achieved throughput to peak capacity, such as memory bandwidth utilization (MBU) and effective model FLOPS usage (EMFU), can be used to measure system performance. However, business-value metrics like training time or model quality are not directly correlated with these hardware efficiency indicators.

Efficiency scaling and trade-offs

Both weak scaling (efficiency when increasing workload and system size proportionately) and strong scaling (performance improvement with system size for fixed workloads) are used to assess a system’s scalability. Although both metrics are useful indicators, the ultimate objective is to produce high-quality models as soon as possible, which occasionally justifies sacrificing scaling efficiency in favor of quicker training times or improved model convergence.

Convergence scaling efficiency is necessary

Convergence scaling efficiency concentrates on the core objective of training: effectively achieving model convergence, even while hardware usage and scaling indicators offer valuable system insights. The point at which a model’s output ceases to improve and the error rate stabilizes is known as convergence. The efficiency with which extra computing resources speed up the training process to completion is measured by convergence scaling efficiency.

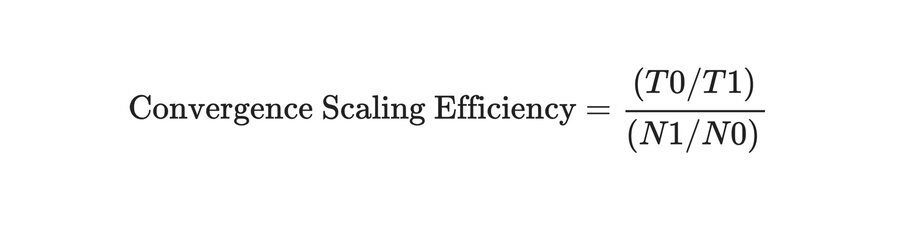

The base case, in which a cluster of N₀ accelerators converges in time T₀, and the scaled case, in which N₁ accelerators take time T₁ to converge, are the two key measurements we use to determine convergence scaling efficiency. The following is the ratio of the increase in cluster size to the speedup in convergence time:

When time-to-solution increases by the same ratio as the cluster size, the convergence scaling efficiency is 1. Therefore, a convergence scaling efficiency as near to 1 as feasible is preferred.

Let’s now use these ideas to comprehend our ML Perf submission for the Google Trillium and Cloud TPU v5p training challenge for GPT3-175b.

Google Trillium’s performance

Google submitted the GPT3-175b training results for three distinct Cloud TPU v5p configurations and four distinct Google Trillium configurations. For comparison, it group the results by cluster sizes with the same total peak flops in the analysis that follows. For instance, 4xTrillium-256 is compared to the Cloud TPU v5p-4096 configuration, 8xTrillium-256 to the Cloud TPU v5p-8192 configuration, and so forth.

MaxText, Google’s high-performance reference solution for Cloud TPUs and GPUs, provides the foundation for all of the findings in this investigation.

Weak scaling efficiency

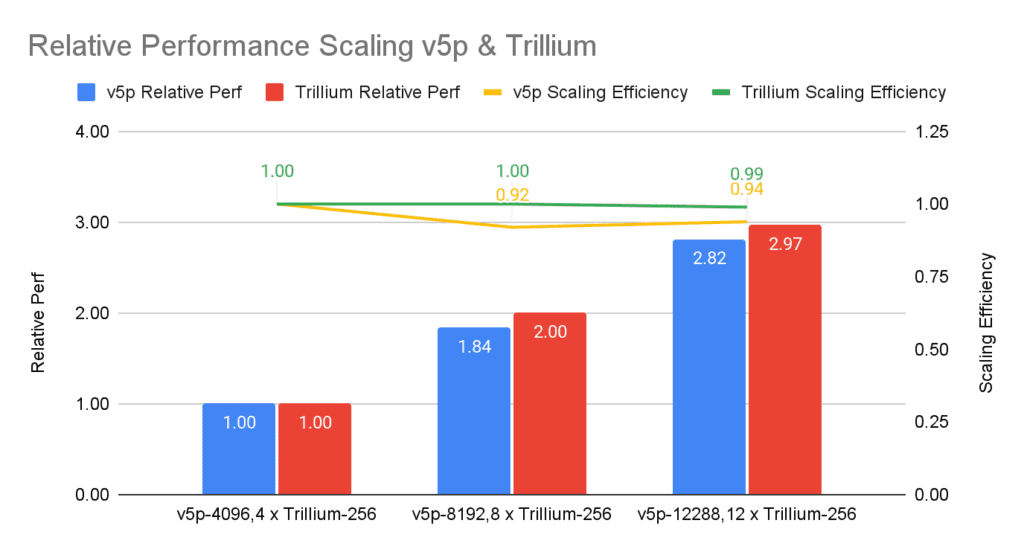

Trillium and TPU v5p both provide almost linear scaling efficiency for growing cluster sizes with correspondingly increased batch sizes:

Relative throughput scaling from the base arrangement is seen in the above Figure as cluster sizes grow. Even when using Cloud TPU multislice technology to operate across data-center networks, Google Trillium achieves 99% scaling efficiency, surpassing the 94% scaling efficiency of Cloud TPU v5p cluster within a single ICI domain. A base configuration of 1024 chips (4x Trillium-256 pods) was employed for these comparisons, creating a consistent baseline with the smallest v5p submission (v5p-4096; 2048 chips). In comparison to its simplest configuration, which consists of two Trillium-256 pods, Trillium retains a robust 97.6% scaling efficiency.

Convergence scaling efficiency

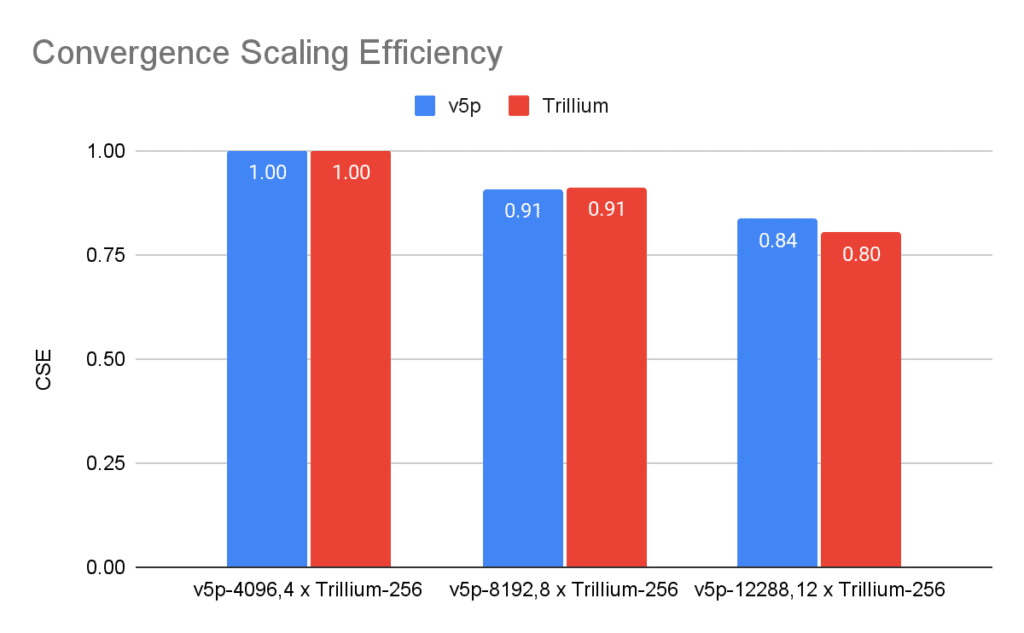

As previously mentioned, convergence scaling efficiency takes time-to-solution into account, whereas weak scaling is helpful but insufficient as a value indication.

It found that Google Trillium and Cloud TPU v5p have similar convergent scaling efficiency for the maximum cluster size. With a CSE of 0.8 in this case, the cluster size for the rightmost configuration was three times larger than the (base) configuration, and the time to convergence was 2.4 times faster than for the base configuration (2.4/3 = 0.8).

Although Google Trillium and TPU v5p have similar convergence scaling efficiency, Trillium excels in providing convergence at a reduced cost, which leads us to the final criteria.

Cost-to-train

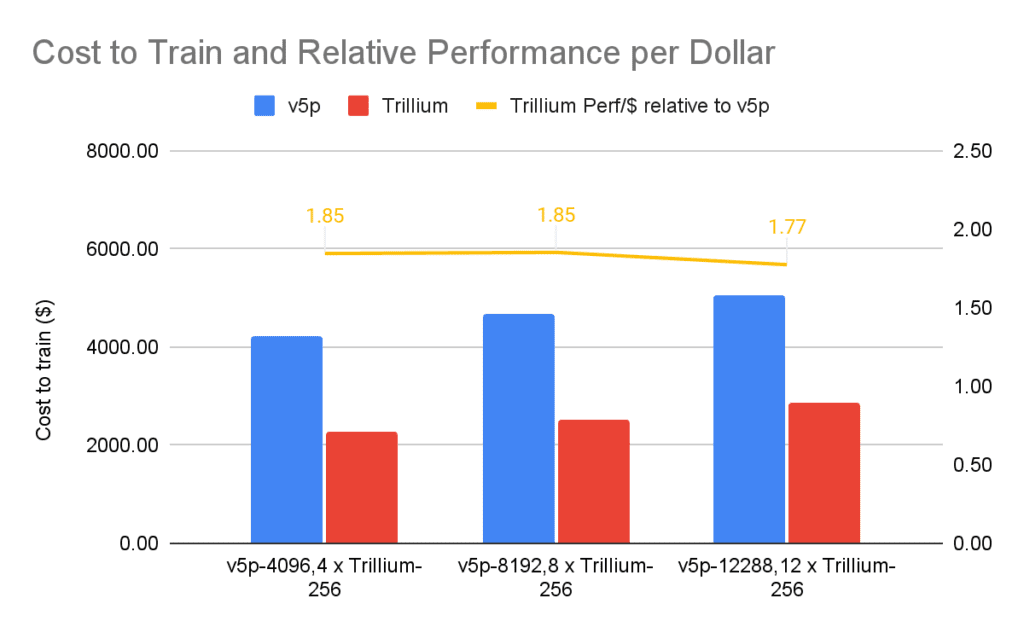

Google has not yet examined the most important parameter, which is the cost of training, even if weak scaling efficiency and convergence scaling efficiency show the scaling characteristics of systems.

Google Trillium achieves convergence to the same validation accuracy as TPU v5p while reducing the training cost by up to 1.8x (45%).

Making informed Cloud Accelerator choices

The complexity of comparing accelerator systems was examined in this paper, with a focus on the significance of examining more than just metrics to determine actual performance and efficiency. It discovered that whereas peak performance measures offer a place to start, they frequently fail to accurately forecast practical utility. Rather, measures such as Memory Bandwidth Utilization (MBU) and Effective Model Flops Utilization (EMFU) provide more insightful information about an accelerator’s performance.

In assessing how well systems function as workloads and resources increase, it also emphasized the crucial significance of scaling characteristics, including both strong and weak scaling. Convergence scaling efficiency, on the other hand, is the most objective metric it found since it guarantees that it is comparing systems based on their capacity to produce the same outcome rather than merely their speed.

Google Cloud demonstrated that Google Trillium reduces the cost-to-train while attaining comparable convergence scaling efficiency to Cloud TPU v5p and higher using these measures in its benchmark submission with GPT3-175b training to 1.8x greater performance per dollar. These findings emphasize how crucial it is to assess accelerator systems using a variety of performance and efficiency criteria.