Organizations frequently encounter operational difficulties when they attempt to use generative AI solutions at scale. GenOps, also known as MLOps for Gen AI, tackles these issues.

DevOps principles and machine learning procedures are combined with GenOps to deploy, track, and manage Gen AI models in production. It guarantees that Gen AI systems are dependable, scalable, and ever-improving.

Why does MLOps pose a challenge to Gen AI?

The distinct issues posed by Gen AI models render the conventional MLOps approaches inadequate.

- Scale: Specialized infrastructure is needed to handle billions of parameters.

- Compute: Training and inference require a lot of resources.

- Safety: Strong protections against hazardous content are required.

- Quick evolution: Regular updates to stay up to speed with new discoveries.

- Unpredictability: Testing and validation are made more difficult by non-deterministic results.

We’ll look at ways to expand and modify MLOps concepts to satisfy the particular needs of Gen AI in this blog.

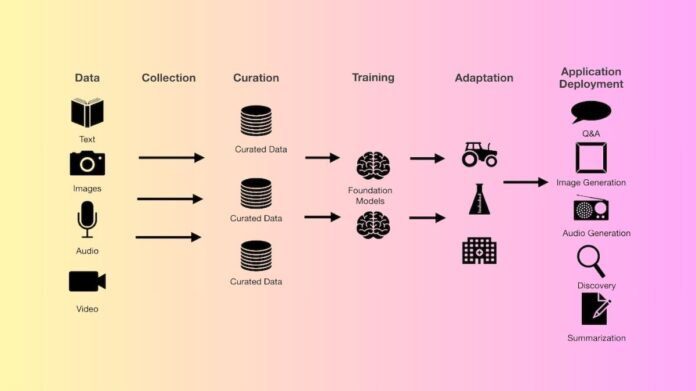

Important functions of GenOps

The many components of GenOps for trained and optimized models are listed below:

- Gen AI exploration and prototyping: Use open-weight models like Gemma2, PaliGemma, and enterprise models like Gemini and Imagen to experiment and create prototypes.

- Prompt: The following are the different actions that require prompts:

- Prompt engineering: Create and improve prompts to enable GenAI models to produce the intended results.

- Versioning prompts: Over time, manage, monitor, and regulate changes to prompts.

- Enhance the prompt: Use LLMs to create a better prompt that optimizes output for a particular job.

- Evaluation: Use metrics or feedback to assess the GenAI model’s responses for particular tasks.

- Optimization: To make models more effective for deployment, use optimization techniques like quantization and distillation.

- Safety: Install filters and guardrails. Safety filters are included into models like Gemini to stop the model from responding negatively.

- Fine-tuning: Apply further tuning on specialized datasets to pre-trained models in order to adapt them to certain domains/tasks.

- Version control: Handle several iterations of the datasets, prompts, and GenAI models.

- Deployment: Provide integrated, scalable, and containerized GenAI models.

- Monitoring: Keep an eye on the real-time performance of the model, the output quality, latency, and resource utilization.

- Security and governance: Defend models and data from intrusions and attacks, and make sure rules are followed.

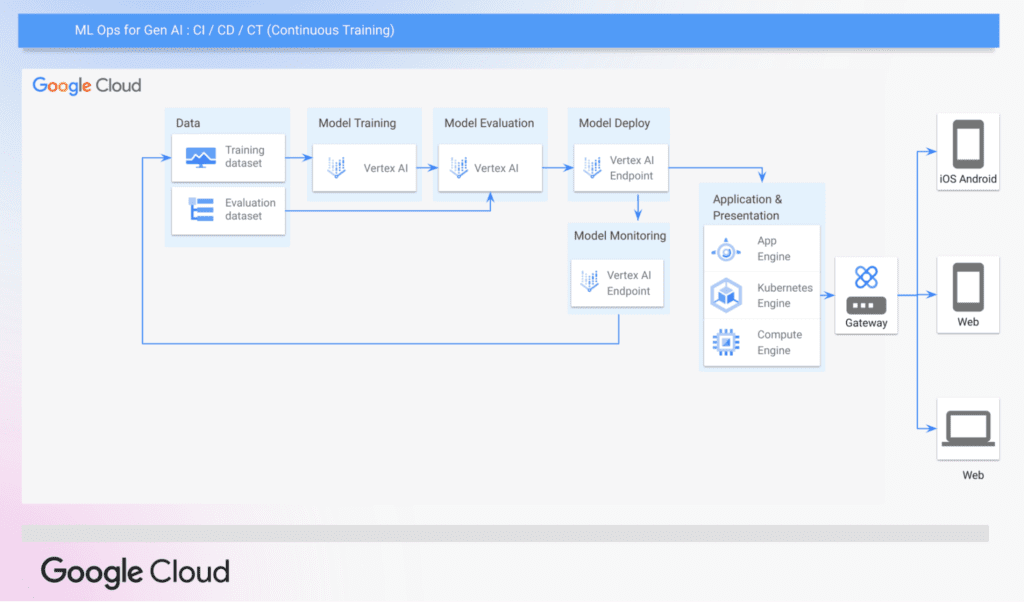

After seeing the essential elements of GenOps, let’s see how the traditional MLOps pipeline in VertexAI may be expanded to include GenOps. The process of deploying an ML model is automated via this MLOps pipeline, which combines continuous integration, continuous deployment, and continuous training (CI/CD/CT).

Continue MLOps to assist with GenOps

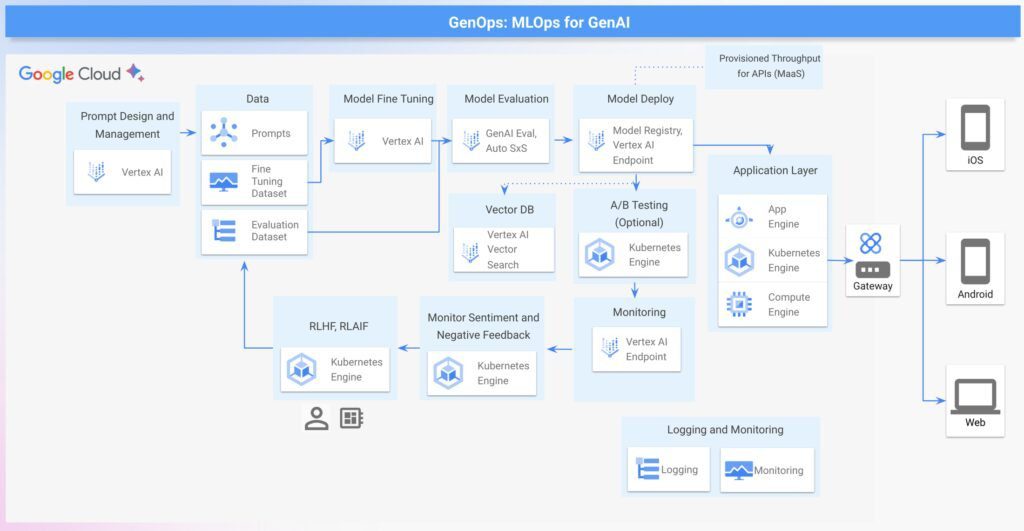

Let’s now examine the essential elements of a strong GenOps pipeline on Google Cloud, from the first testing of potent models across several modalities to the crucial factors of deployment, safety, and fine-tuning.

Let’s investigate how these essential GenOps building blocks can be implemented in Google Cloud.

Data: The route towards Generative AI begins with data

- Examples of few shots: Model output structure, phrasing, scope, and pattern are guided by the examples provided by few-shot prompts.

- Supervised fine-tuning dataset: A pre-trained model is adjusted to a particular task or domain using this labeled dataset.

- The golden evaluation dataset is used to evaluate how well a model performs in a certain activity. For that particular objective, this labeled dataset can be used for both metric-based and manual evaluation.

Prompt management: Vertex AI Studio facilitates the cooperative development, testing, and improvement of prompts. Within shared projects, teams can enter text, choose models, change parameters, and save completed prompts.

Model fine-tuning: Using the fine-tuning data, this stage entails adapting the Gen AI model that has already been trained to certain tasks or domains. The two Vertex AI techniques for fine-tuning models on Google Cloud are listed below:

- When labeled data is available and the job is well-defined, supervised fine-tuning is a viable choice. It works especially well for domain-specific applications where the content or language is very different from the huge model’s original training set.

- Strengthening Human feedback is used in learning from human feedback (RLHF) to fine-tune a model. In situations where the model’s output is intricate and challenging to explain, RLHF is advised. It is advised to use supervised fine-tuning if defining the model’s output isn’t challenging.

Visualization tools such as TensorBoard and embedding projector can be used to identify irrelevant questions. These prompts can then be utilized to prepare answers for RLHF and RLAIF.

UI can also be developed utilizing open source programs like Google Mesop, which facilitates the evaluation and updating of LLM replies by human assessors.

Model evaluation: GenAI models with explainable metrics are assessed using Vertex AI’s GenAI Evaluation Service. It offers two main categories of metrics:

- Model-based measures: A Google-exclusive model serves as the judge in these metrics. Pairwise or pointwise measurements of model-based metrics are made with it.

- Metrics based on computation: These metrics (like ROUGE and BLEU) compare the model’s output to a reference or ground truth by using mathematical formulas.

A paired model-based evaluation method called Automatic Side-by-Side (AutoSxS) is used to assess how well pre-generated forecasts or GenAI models in the VertexAI Model Registry perform. To determine which model responds to a prompt the best, it employs an autorater.

Model implementation:

- Models that do not require deployment and have controlled APIs: Gemini, one of Google’s core Gen AI models, has managed APIs and is capable of accepting commands without requiring deployment.

- Models that must be implemented: Before they may take prompts, other Gen AI models need to be deployed to a VertexAI Endpoint. The model needs to be available in the VertexAI Model Registry in order to be deployed. There are two varieties of GenAI models in use:

- Models that have been refined through the use of customized data to adjust a supported foundation model.

- Models using GenAI without controlled APIs Numerous models in the VertexAI Model Garden, such as Gemma2, Mistral, and Nemo, can be accessed through the Open Notebook or Deploy button.

For further control, some models allow for deployment to Google Kubernetes Engine. Online serving frameworks, such as NVIDIA Triton Inference Server and vLLM, which are memory-efficient and high-throughput LLM inference engines, are used in GKE to serve a single GPU model.

Monitoring: Continuous monitoring examines important metrics, data patterns, and trends to evaluate the performance of the deployed models in real-world scenarios. Google Cloud services are monitored through the use of cloud monitoring.

Organizations can better utilize the promise of Gen AI by implementing GenOps techniques, which help create systems that are effective and in line with business goals.