Best-in-class performance, lightning-fast hardware compatibility, and simple integration with other AI tools are all features of Gemma 2.

AI has the capacity to solve some of the most important issues facing humanity, but only if everyone gets access to the resources needed to develop with it. Because of this, Google unveiled the Gemma family of lightweight, cutting-edge open models earlier this year. These models are constructed using the same technology and research as the Gemini models. With CodeGemma, RecurrentGemma, and PaliGemma all of which offer special capabilities for various AI jobs and are readily accessible thanks to connections with partners like Hugging Face, NVIDIA, and Ollama Google have continued to expand the Gemma family.

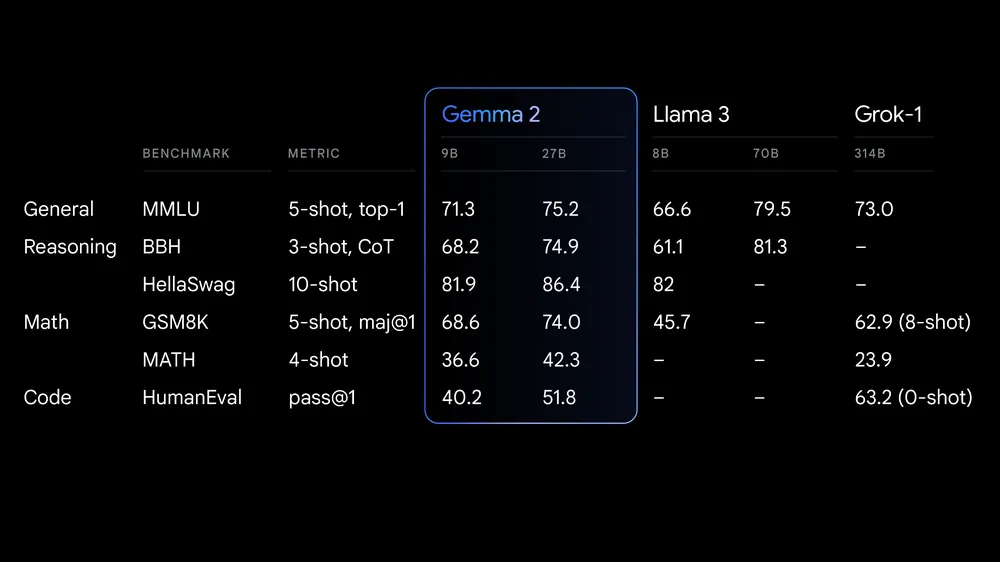

Google is now formally making Gemma 2 available to academics and developers throughout the world. Gemma 2, which comes in parameter sizes of 9 billion (9B) and 27 billion (27B), outperforms the first generation in terms of performance and efficiency at inference, and has notable improvements in safety. As late as December, only proprietary versions could produce the kind of performance that this 27B model could, making it a competitive option to machines more than twice its size. And that can now be accomplished on a single NVIDIA H100 Tensor Core GPU or TPU host, greatly lowering the cost of deployment.

A fresh open model benchmark for effectiveness and output

Google updated the architecture upon which they built Gemma 2, geared for both high performance and efficient inference. What distinguishes it is as follows:

Excessive performance: Gemma 2 (27B) offers competitive alternatives to models over twice its size and is the best performing model in its size class. Additionally, the 9B Gemma 2 model outperforms other open models in its size group and the Llama 3 8B, delivering class-leading performance. See the technical report for comprehensive performance breakdowns.

Superior effectiveness and financial savings: With its ability to operate inference effectively and precisely on a single Google Cloud TPU host, NVIDIA A100 80GB Tensor Core GPU, or NVIDIA H100 Tensor Core GPU, the 27B Gemma 2 model offers a cost-effective solution that doesn’t sacrifice performance. This makes AI installations more affordable and widely available.

Lightning-fast inference on a variety of hardware: Gemma 2 is designed to operate incredibly quickly on a variety of hardware, including powerful gaming laptops, top-of-the-line desktop computers, and cloud-based configurations. Try Gemma 2 at maximum precision in Google AI Studio, or use Gemma.cpp on your CPU to unlock local performance with the quantized version. Alternatively, use Hugging Face Transformers to run Gemma 2 on an NVIDIA RTX or GeForce RTX at home.

Designed with developers and researchers in mind

In addition to being more capable, Gemma 2 is made to fit into your processes more smoothly:

- Open and accessible: Gemma 2 is offered under our commercially-friendly Gemma licence, allowing developers and academics to share and commercialise their inventions, much like the original Gemma models.

- Wide compatibility with frameworks: Because Gemma 2 is compatible with popular AI frameworks such as Hugging Face Transformers, JAX, PyTorch, and TensorFlow via native Keras 3.0, vLLM, Gemma.cpp, Llama.cpp, and Ollama, you can utilise it with ease with your preferred tools and processes. Moreover, Gemma is optimised using NVIDIA TensorRT-LLM to operate as an NVIDIA NIM inference microservice or on NVIDIA-accelerated infrastructure. NVIDIA NeMo optimisation will follow. Today, Hugging Face and Keras might help you fine-tune. More parameter-efficient fine-tuning options are something Google is constantly working on enabling.

- Easy deployment: Google Cloud users will be able to quickly and simply install and maintain Gemma 2 on Vertex AI as of next month.

Discover the new Gemma Cookbook, which is a compilation of useful examples and instructions to help you develop your own apps and adjust Gemma 2 models for certain uses. Learn how to utilise Gemma with your preferred tooling to do typical tasks such as retrieval-augmented generation.

Responsible AI development

Google’s Responsible Generative AI Toolkit is just one of the tools Google is dedicated to giving academics and developers so they may create and use AI responsibly. Recently, the LLM Comparator was made available to the public, providing developers and researchers with a thorough assessment of language models. As of right now, you may execute comparative assessments using your model and data using the associated Python library, and the app will display the results. Furthermore, Google is working hard to make our text watermarking technique for Gemma models, SynthID, open source.

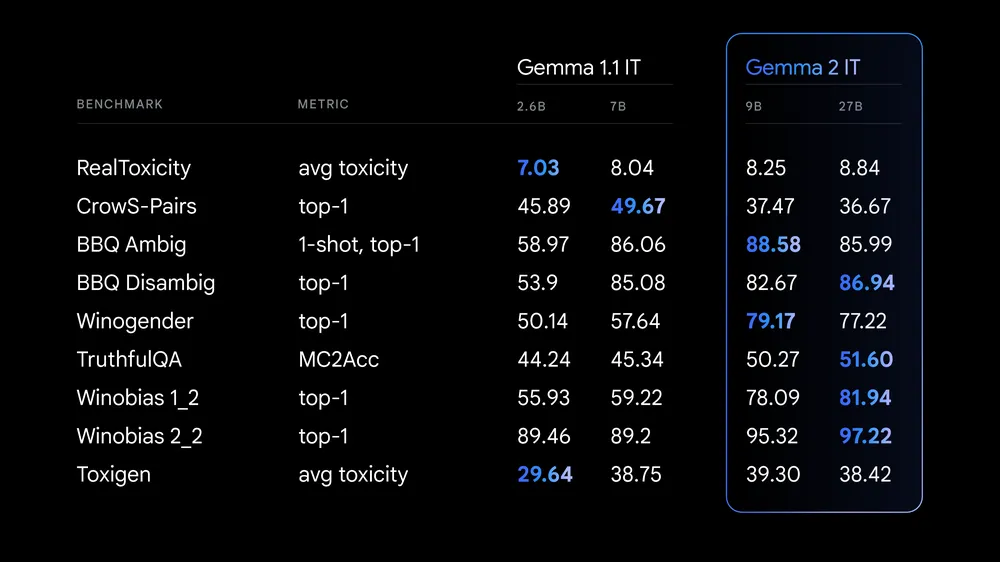

In order to detect and reduce any biases and hazards, Google is trained Gemma 2 using their strict internal safety procedures, which include screening pre-training data, conducting thorough testing, and evaluating the results against a wide range of metrics. Google release their findings on a wide range of publicly available standards concerning representational hazards and safety.

Tasks completed with Gemma

Innumerable inspirational ideas and over 10 million downloads resulted from their initial Gemma launch. For example, Navarasa employed Gemma to develop a model based on the linguistic diversity of India.

With Gemma 2, developers may now launch even more ambitious projects and unleash the full potential and performance of their AI creations. Google will persist in investigating novel architectures and crafting customised Gemma versions to address an expanded array of AI assignments and difficulties. This includes the 2.6B parameter Gemma 2 model that will be released soon, which is intended to close the gap even further between powerful performance and lightweight accessibility. The technical report contains additional information about this impending release.

Beginning

You may now test out Gemma 2’s full performance capabilities at 27B without any hardware requirements by accessing it through Google AI Studio. The model weights for Gemma 2 can also be downloaded from Hugging Face Models and Kaggle, and Vertex AI Model Garden will be available soon.

In order to facilitate research and development, Gemma 2 can also be obtained for free via Kaggle or a complimentary tier for Colab notebooks. New users of Google Cloud can be qualified for $300 in credit. To expedite their research with Gemma 2, academic researchers can register for the Gemma 2 Academic Research Programme and obtain Google Cloud credits. The deadline for applications is August 9th.