AI Implementation at Netflix

Amer Ather, a Cloud and Studio Performance Engineer at Netflix, and Vrushabh Sanghavi, an End-to-End AI Manager at Intel, spoke at Intel Innovation 2023 about the benefits and performance acceleration that the combination of Intel Xeon processors and Intel Software brings to the entire AI lifecycle.

Ather discussed how Intel Xeon CPUs are the platform of choice for cost-effectiveness and flexibility across a wide range of services, how Netflix is using AI inference for video delivery and recommendations, how optimization work is being done at Netflix on video encoding and downsampling using the Intel oneAPI Deep Neural Network Library (oneDNN), and how the collaboration between Intel and Netflix on profiling and architectural analysis helps break through performance barriers.

The Netflix Performance Engineering Team’s strategies for enhancing the viewer experience while reducing cloud and streaming costs were covered in detail during this session, along with methods for utilizing Intel software optimizations to fully utilize Intel hardware capabilities.

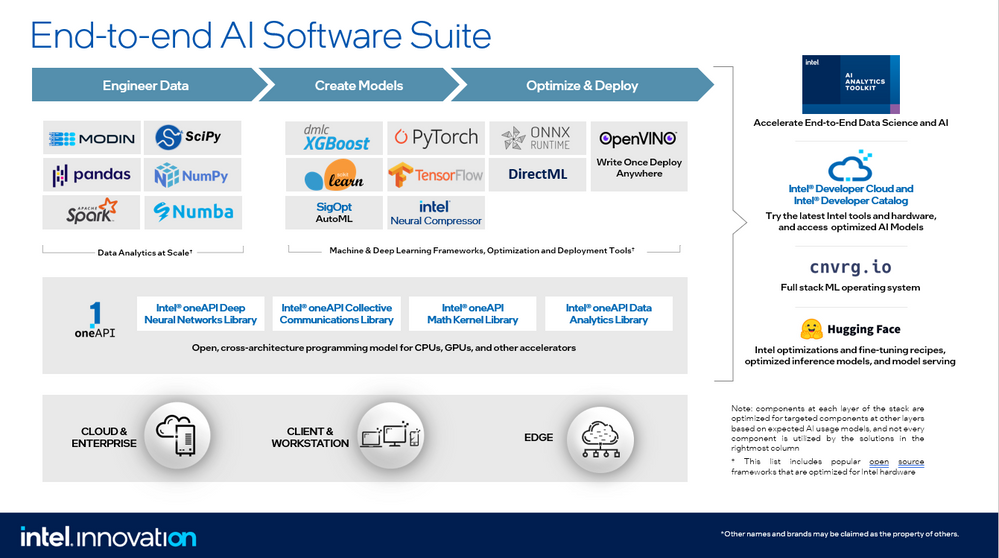

The most recent Intel software will enable you to get the greatest performance out of Intel technology, including multiarchitecture systems of CPUs, GPUs, FPGAs, and other accelerators as well as CPUs with built-in acceleration. When employing Intel’s extensions for various industry-standard frameworks, such as TensorFlow and PyTorch as well as Scikit-learn and Pandas, developers can, for example, obtain over a 10x performance boost to AI applications with just a single-line code update.

A replay of the entire session will be available soon. Some key takeaways:

The significance of comprehending the entire AI pipeline from beginning to conclusion to obtain the best performance.

The goal of Intel is to make AI ubiquitous by meeting the demands of end-to-end application performance rather than isolated DL or ML kernel performance. Data preparation, processing, traditional machine learning, training, fine-tuning, inference, management and movement of organized and unstructured data are just a few of the numerous and complicated jobs that make up AI. A system-level, all-encompassing approach is necessary for successful implementation.

Too frequently, AI deployments fail because there isn’t enough attention paid to the entire end-to-end AI pipeline and because people aren’t aware of the technologies that can fill in the gaps and release developer resources from the pricey, proprietary platforms that the business has become ensnared in.

The majority of AI workloads, including as data processing and analysis, are general-purpose workloads that are best suited for CPUs, despite the current news being dominated by generative AI, LLMs, and GPU scarcity. Additionally, to speed up AI workflows with various characteristics, 4th Gen Intel Xeon Scalable processors offer specialized hardware support in the form of Intel Accelerator Engines like Intel Advanced Matrix Extensions (Intel AMX) and optimized instruction set architectures like Intel Advanced Vector Extensions 512 (Intel AVX-512).

The complete range of the end-to-end pipeline, including engineering data, model building, optimization, tuning, and deployment, is covered by Intel Xeon processors when used in conjunction with Intel’s end-to-end AI software package.

Netflix offers numerous instances of how enterprise developers may improve a variety of applications by utilizing the whole Intel AI software portfolio, Intel Xeon CPU instruction sets, and analysis tools like VTune Profiler.

Enhancing Viewer Experience with Video Encoding

Performance is more crucial than ever in today’s digital environment, according to Ather. Users desire responsive and robust applications. Netflix streaming service users anticipate a high-quality, immersive video experience.

Every device that hosts the Netflix app has different capabilities, access profiles, and requirements. These devices function in a variety of network environments. Delivering high-quality content to our members across 190 countries depends on performance optimization and end-to-end dependability. The Netflix streaming experience is characterized by an enhanced user interface, individualized suggestions, quick streaming, and a vast library of interesting material.

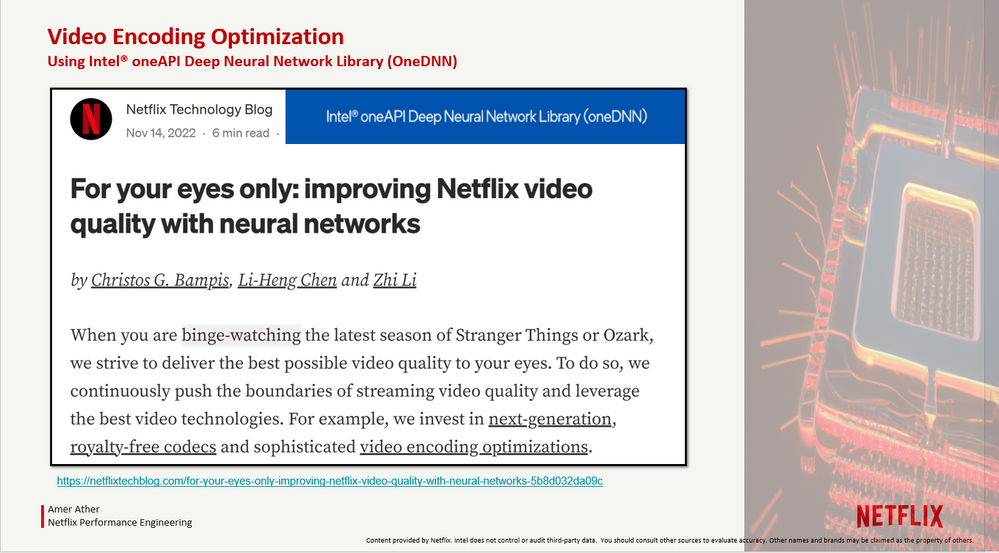

Ather explained how the first stage in Netflix’s machine learning journey to enhance the quality of their video encoding is downscaling via neural networks. Traditional filters like Lanczos are frequently used for downscaling. However, using data-driven or neural network-based downsampling techniques has enormous promise for enhancing the quality of compressed video.

Our entire encoding pipeline is powered by cloud-based Intel Xeon computers. In order to fully utilize the instruction-level parallelism support offered by AVX-512 and matrix multiplication speedup, common neural network operation via VNNI, and the more recent AMX acceleration built into the Sapphire Rapids platform (4th Gen Intel Xeon Scalable processors), FFmpeg, VMAF (Video Multimethod Assessment Fusion), and other pertinent encoded libraries are vectorized with assistance from Intel engineering.

Ten convolutional layers make up the Downsampler convolutional neural network, which Netflix just began employing on a small scale. This network was created expressly for the purpose of downsampling for adaptive video streaming. The first five layers are referred to as “preprocessing blocks” and the final five as “downscaling blocks.” Before passing it on to the downscaling block, which actually does the downscaling, the preprocessing block acquires essential knowledge.

According to Ather, downsampler A/B testing showed improved video encoding quality when compared to different encoders and upsamplers, and it performed well in terms of a number of quality criteria. In 77% of human tests, Downsampler’s video quality was preferred. He stated, “We saw an up to 2x performance boost for various encoding jobs with oneDNN and VNNI/AVX-512 enabled,” adding that performance gains in the form of decreased CPI hours or frames-per-second (fps) speedup to encode a title mean enormous savings in cloud infrastructure expense. This was further discussed by Ather in an earlier blog entry.

Improved Content Recommendations with AI Inference Optimization

Predicting viewer preferences is Netflix’s second objective. In order to tailor member home pages and offer pertinent titles to audiences worldwide, Netflix heavily utilizes machine learning. Every element of the home page is based on evidence and has undergone A/B testing, all supported by machine learning.

The Adaptive Row Ordering Service is one illustration of how users can quickly find pertinent content by creating personalized row ordering. Models choose what fresh material to recommend to users based on their watching patterns, location, and language, among other things. Another illustration is the Evidence Service, which is used to rank and choose assets, such artwork, plot summaries, and video clips, to present with program information on home pages based on user activities.

According to Ather, “Netflix hosts its inference services primarily on Intel Xeon processors because they are more practical and economical than GPUs.” He clarified that Netflix services have a pure Java version of XGBoost for inference and leverage performant Java-based inference with negligible penalty for JNI to TensorFlow. Our end-to-end pipeline, which is entirely developed in Java, includes a sizable chunk of feature encoding and creation. Offloading inference tasks to a GPU will therefore result in a cost increase with minimal latency gains.

Performance Bottlenecks Can Be Found and Solved Using Intel Profiling Tools

The mission of the Netflix Performance Engineering team is to assist Netflix in lowering cloud and streaming expenses while enhancing the viewing experience. In order to achieve this objective, Ather said that “Netflix is working closely with Intel as our strategic technology partner in optimizing our cloud infrastructure and software stacks.”

As part of that partnership, Netflix has been effective in identifying regressions and improving the performance of production workloads using Intel profiling tools, including VTune Profiler, Intel PerfSpect, and Intel Process Watch.

“Rich profiling tools from Intel…provide insightful analyses of system performance. We have improved performance in test and production environments by using these tools’ wide range of capabilities to find regressions.

Ather commended VTune in particular for its capacity to configure performance monitoring units (PMU) on Intel CPUs to trace hardware events, find challenging problems, and successfully root cause them. One such success story was reported on the Netflix blog, which described how the company was able to pinpoint the root cause of a glitch while consolidating or transferring important microservices to massive cloud instances thanks to VTune’s robust observability.

Ather remarked that VTune makes it simple to study the execution of the code and find places that can be enhanced to enhance CPU and memory use.

[…] AI strategy issue dominates C-suites and board rooms […]