Foundational models (FMs) are ushering in a new age in machine learning (ML) and artificial intelligence (AI), accelerating the development of AI that can be tailored to a variety of downstream tasks and applications.

Serving AI models at the corporate edge allows near-real-time predictions while meeting data sovereignty and privacy constraints as data processing becomes more important at work. Edge computing and IBM Watsonx data and AI platform for FMs allow organizations to conduct AI workloads for FM fine-tuning and inference at the operational edge. This lets organizations grow AI systems at the edge, saving time and money and improving reaction times.

Foundational models(FMs) are?

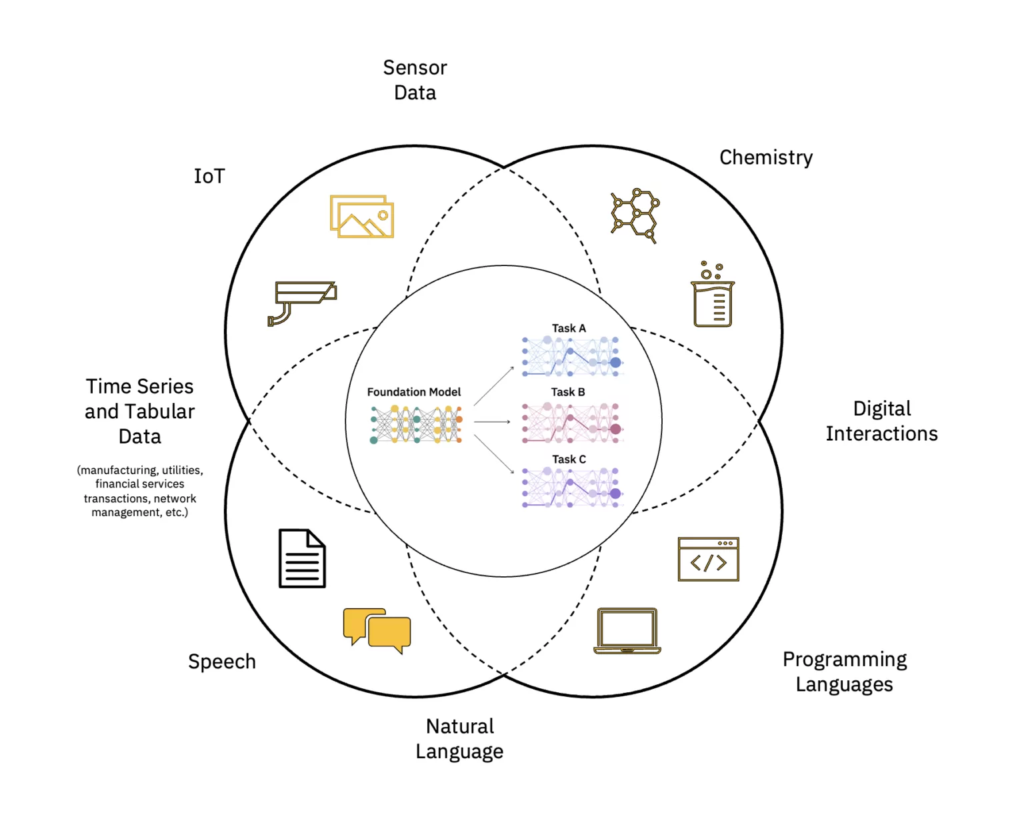

Modern AI applications use foundational models (FMs) trained on large amounts of unlabeled data. They may be customized for many downstream activities and applications. FMs learn more broadly and solve issues across domains and challenges, replacing modern AI models. FMs may support numerous AI model applications, as their name implies.

FMs solve two major obstacles to corporate AI deployment. First, organizations generate a lot of unlabeled data, but only a small portion is tagged for AI model training. Second, labeling and annotation need hundreds of hours of subject matter expert (SME) effort. It would need armies of SMEs and data professionals to scale across use cases, making it expensive. FMs have addressed these obstacles and enabled enterprise-wide AI deployment by consuming massive volumes of unlabeled data and adopting self-supervised model training. These huge volumes of data in any firm are ready to produce insights.

What are large language models?

Large linguistic models (LLMs) are fundamental models (FM) with layers of neural networks trained on enormous quantities of unlabeled data. Self-supervised learning techniques let them do natural language processing (NLP) tasks like humans (Figure 1).

Scale and accelerate AI’s influence

Building and implementing a basic model requires multiple processes. Data intake, selection, pre-processing, FM pre-training, model tuning to one or more downstream tasks, inference serving, and data and AI model governance and lifecycle management are FMOps.

IBM Watsonx, an enterprise-ready AI and data platform, provides organizations with the tools and capabilities to use these FMs. IBM Watsonx includes:

- IBM watsonx.ai is a sophisticated AI studio that integrates FMs and ML to enable generative AI.

- IBM Watsonx.data is a versatile open lakehouse data storage that scales AI workloads for all your data, everywhere.

- IBM Watsonx.governance is an end-to-end automated AI lifecycle governance solution for responsible, transparent, and explainable AI operations.

The growth of corporate edge computing in industrial, manufacturing, retail, and telecom edge sites is another major vector. For near-real-time analysis, AI at the business edge processes data where work is done. AI can give quick, meaningful business insights at the enterprise edge, where massive volumes of enterprise data are created.

Serving AI models at the edge allows near-real-time predictions while managing data sovereignty and privacy. This greatly decreases inspection data capture, transmission, transformation, and processing delay. Working at the edge protects critical company data and reduces data transmission costs with quicker reaction times.

Scaling edge AI installations is difficult due to data (heterogeneity, volume, and regulation) and resource (compute, network connection, storage, and IT skills) constraints. These fall into two categories:

Time/cost to deploy: Each deployment requires installing, configuring, and testing many layers of hardware and software. Today, a service professional might take a week or two to install at each site, limiting how quickly and cost-effectively organizations can expand installations.

Day-two management: Due to the large number of deployed edges and their global locations, local IT staff at each site to monitor, manage, and upgrade them may be too costly.

Edge AI installations

IBM’s edge architecture solves these issues by integrating HW/SW appliances into edge AI installations. It has various principles that help scale AI deployments:

- Zero-touch, policy-based software stack provisioning.

- Continuous edge system health monitoring

- Manage and deliver software/security/configuration upgrades to many edge locations from a cloud-based location for day-2 management.

Scale corporate AI installations at the edge using a distributed hub-and-spoke architecture with a central cloud or enterprise data center as the hub and an edge-in-a-box device as the spoke. This hub-and-spoke architecture for hybrid cloud and edge settings best depicts the balance required to optimize FM operational resources.

Self-supervised pre-training of basic large language models (LLMs) and other foundation models on big unlabeled datasets requires GPU resources and is best done at a hub. The cloud’s nearly endless computational capabilities and enormous data stacks enable pre-training of big parameter models and continuous accuracy improvement.

However, a few GPUs at the corporate edge can tune these basic FMs for downstream activities that just need a few tens or hundreds of labeled data samples and inference providing. This keeps sensitive labeled data (or company crown-jewel data) secure in the enterprise operating environment and reduces data transmission expenses.

Data scientists may fine-tune, test, and deploy models using a full-stack strategy for edge deployment. We can do this in one environment and reduce the development lifecycle for new AI models for end users. Red Hat OpenShift Data Science (RHODS) and the newly launched Red Hat OpenShift AI enable quick development and deployment of production-ready AI models in distributed cloud and edge settings.

Finally, delivering the fine-tuned AI model at the corporate edge decreases data collecting, transmission, transformation, and processing delay. Decoupling cloud pre-training from edge fine-tuning and inference reduces operating expenses by lowering inference job time and data transportation costs. Operational edge-in-a-box FM finetuning and inference value proposition. An FM model used by a construction engineer to identify defects in real time using drone footage.

A three-node edge (spoke) cluster was used to fine-tune and deploy an exemplar vision-transformer-based foundation model for civil infrastructure (pre-trained using public and custom industry-specific datasets) to showcase this value proposition end-to-end. The software stack featured Red Hat OpenShift Container Platform and Data Science. This edge cluster was linked to a cloud-based RHACM hub.

Touchless provisioning

Red Hat Advanced Cluster Management for Kubernetes (RHACM) rules and placement tags bound edge clusters to software components and settings for policy-based, zero-touch provisioning. These software components spanning the complete stack and encompassing computing, storage, network, and AI were deployed using OpenShift operators, application service provisioning, and S3 Bucket.

The pre-trained fundamental model (FM) for civil infrastructure was fine-tuned in a Jupyter Notebook in Red Hat OpenShift Data Science (RHODS) using labeled data to identify six concrete bridge issues. A Triton server showed fine-tuned FM inference serving. Aggregating hardware and software observability data through Prometheus to the cloud-based RHACM dashboard allowed this edge system’s health to be monitored. These FMs may be deployed at edge sites and used with drone footage to identify problems in near real time, decreasing the expense of transporting vast amounts of high-definition data to and from the Cloud.

Summary

With an edge-in-a-box appliance and IBM Watsonx data and AI platform capabilities for foundation models (FMs), companies may execute AI workloads for FM fine-tuning and inferencing at the operational edge. This appliance creates the hub-and-spoke structure for centralized administration, automation, and self-service and handles complicated use cases out of the box. Repeatable success, resilience, and security cut edge FM installations from weeks to hours.

[…] Read more on […]

[…] language models (LLMs) serve as foundation models that generate text, translate between languages, and write various forms of material using […]

[…] of writing smart contracts in Solidity. Similar to how Google Cloud’s Codey or other foundation models, such as Duet AI for Google Cloud, which is accessible in the Integrated Development Environment […]

[…] the process while preserving privacy and security. The service offers a selection of high-performing foundation models (FMs) from top AI companies, such as AI21 Labs, Anthropic, Cohere, Stability AI, Amazon, and now […]

[…] the cooperation announced today, Boehringer Ingelheim and IBM will be able to employ IBM’s foundation model technology to find new candidate antibodies for the development of effective […]

[…] pleased to announce that Anthropic’s Claude 2.1 foundation model (FM) is now available in Amazon Bedrock. Anthropic unveiled its newest model, Claude 2.1, last week. It […]

[…] development while upholding privacy and security. It offers a selection of high-performing foundation models (FMs) from top AI firms, such as AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon. With this […]

[…] neural network models known as “foundation models” are capable of producing text, images, speech, code, and other types of high-quality output […]