BigQuery and Document AI combine to provide use cases for generative AI and document analytics

Organizations are producing enormous volumes of text and other document data as the digital transition quickens; this data has the potential to provide a wealth of insights and fuel innovative generative AI use cases. They are thrilled to introduce an interface between BigQuery and Document AI to help you better use this data. This integration will make it simple to draw conclusions from document data and create new large language model (LLM) applications.

Thanks to Google’s state-of-the-art foundation models, BigQuery users may now develop Document AI Custom Extractors that they can modify depending on their own documents and information. With the ease and power of SQL, these bespoke models can then be called from BigQuery to extract structured data from documents in a controlled and safe way.

Some clients attempted to build autonomous Document AI pipelines prior to this connection, which required manually curating extraction algorithms and schema. They had to create custom infrastructure in order to synchronize and preserve data consistency since there were no native integration capabilities.

Because of this, every document analytics project became a major endeavor requiring a large financial outlay. With the help of this connection, clients may now quickly and simply build remote models in BigQuery for their unique extractors in Document AI. These models can then be used to execute generative AI and document analytics at scale, opening up new possibilities for data-driven creativity and insights. An integrated and controlled data to AI experience

In the Document AI Workbench, creating a custom extractor just takes three steps:

- Specify the information that must be taken out of your papers. This is known as document schema, and it can be accessed via BigQuery and is kept with every version of the custom extractor.

- Provide more documents with annotations as examples of the extraction, if desired.

- Utilizing the basic models offered by Document AI, train the custom extractor’s model.

Document AI offers ready-to-use extractors for costs, receipts, invoices, tax forms, government IDs, and a plethora of other situations in the processor gallery, in addition to bespoke extractors that need human training. You don’t need to follow the previous procedures in order to utilize them immediately. After the custom extractor is complete you can use.

BigQuery Studio to perform the next four stages for SQL document analysis:

SQL is used to register a BigQuery remote model for the extractor. The custom extractor may be called, the results can be parsed, and the model can comprehend the document schema that was built above.

SQL may be used to create object tables for the documents kept in cloud storage. By establishing row-level access controls, which restrict users’ access to specific documents and, therefore, limit AI’s ability to protect privacy and security, you can control the unstructured data in the tables.

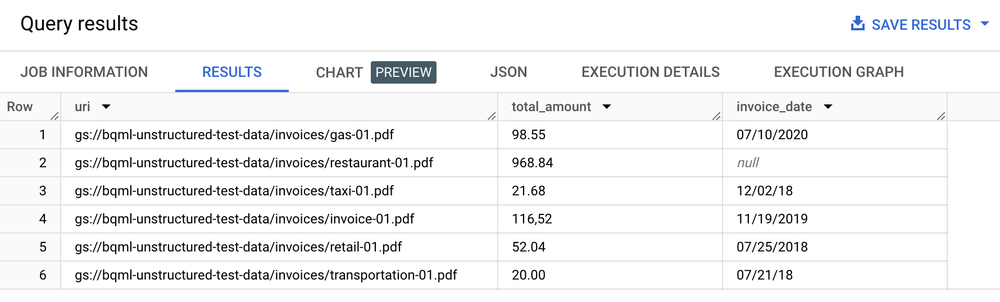

To extract pertinent information, use the ML.PROCESS DOCUMENT function on the object table to make inference calls to the API endpoint. Outside of the function, you can also use a “WHERE” clause to filter out the documents for the extractions. A structured table containing extracted fields in each column is the result of the function.

To integrate structured and unstructured data and produce business values, join the extracted data with other BigQuery.

Use cases for summarization, text analytics, and other document analysis

Following text extraction from your documents, there are many methods you may use to carry out document analytics:

Utilize BigQuery ML for text analytics: There are several methods for training and deploying text models with BigQuery ML. BigQuery ML, for instance, may be used to categorize product comments into distinct groups or to determine the emotion of customers during support conversations. In addition, BigQuery DataFrames for pandas and scikit-learn-like APIs for text analysis on your data are available to Python users.

To summarize the papers, use PaLM 2 LLM: The PaLM 2 model is called by BigQuery’s ML.GENERATE_TEXT function to produce texts that may be used to condense the documents. For example, you may combine PaLM 2 and Document AI to employ BigQuery SQL to extract and summarize client comments.

Combine structured data from BigQuery tables with document metadata: This enables the fusion of organized and unstructured data for more potent applications.

Big Query Table :

Put search and generative AI application cases into practice

BigQuery’s search and indexing features enable you to create indexes that are designed for needle-in-the-haystack searches, which opens up a world of sophisticated search potential after you have extracted structured text from your documents.

Additionally, by using SQL and unique Document AI models, this integration facilitates the execution of text-file processing for privacy filtering, content safety checks, and token chunking, hence opening up new generative LLM applications. When paired with other information, the retrieved text makes curation of the training corpus which is necessary to fine-tune big language models easy.

Additionally, you are developing LLM use cases on corporate data that is managed and supported by BigQuery’s vector index management and embedding creation features. You may develop retrieval-augmented generation use cases for a more regulated and efficient AI experience by synchronizing this index with Vertex AI.

[…] a high-speed Arm Mali-G57 graphics processing unit (GPU), and MediaTek’s multi-core artificial intelligence processor (APU). Because of this powerful combination, the RSB-3810 is able to do various AI tasks […]