BigQuery Omni Azure

What is BigQuery Omni?

BigQuery Omni is a multi-cloud data analytics solution that lets you use BigLake tables to perform BigQuery analytics on data kept in Azure Blob Storage or Amazon Simple Storage Service (Amazon S3). It offers a single interface for analyzing data from several public clouds, removing the need to relocate data and allowing you to learn from your data no matter where it is stored.

Many businesses use several public clouds to store their data. Due to the difficulty of gaining insights from all of the data, this data frequently becomes siloed. To evaluate the data, you need a multi-cloud data tool that is quick, affordable, and doesn’t contribute to the costs of decentralized data governance. With a single interface, BigQuery Omni helps us to lower these frictions.

Connecting to Amazon S3 or Blob Storage is a must for performing BigQuery analytics on your external data. You would need to establish a BigLake table that refers data from Blob Storage or Amazon S3 in order to query external data.

Additionally, data can be moved between clouds using cross-cloud transfer, or it can be queried between clouds using cross-cloud joins. One cross-cloud analytics option that BigQuery Omni provides is the freedom to replicate data as needed and the ability to examine data where it resides.

Google BigQuery Omni

Operating hundreds of separate applications across multiple platforms is not unusual in today’s data-centric enterprises. The enormous amount of logs generated by these applications poses a serious problem for log analytics. Furthermore, accuracy and retrieval are made more difficult by the widespread use of multi-cloud solutions, since the dispersed nature of the logs may make it more difficult to derive valuable insights.

In contrast to a traditional strategy, BigQuery Omni was created to help solve this problem and lower overall expenditures. We’ll go over the specifics in this blog post.

Log analysis includes a number of steps, including:

Gathering log data: gathers log data from the applications and/or infrastructure of the enterprise. A popular method for gathering this data is to save it in an object storage program like Google Cloud Storage in JSONL file format. It can be prohibitively expensive to move raw log data between clouds in a multi-cloud setup.

Normalization of log data: Various infrastructures and applications produce distinct JSONL files. The fields in each file are specific to the program or infrastructure that produced it. To make data analysis easier, these disparate fields are combined into a single set, which enables data analysts to do thorough and effective studies throughout the environment.

Indexing and storage: To lower storage and query expenses and improve query performance, normalized data should be stored effectively. Logs are often stored in a compressed columnar file format, such as Parquet.

Querying and visualization: Enable enterprises to run analytics queries to find known threads, abnormalities, or anti-patterns in the log data through querying and visualization.

Data lifecycle: While storage expenses persist, the usefulness of log data declines with age. A data lifecycle procedure must be established in order to maximize costs. Archiving logs after a month (it is rare to query log data older than a month) and deleting them after a year are usual practices. This strategy ensures that crucial data is always available while efficiently controlling storage expenses.

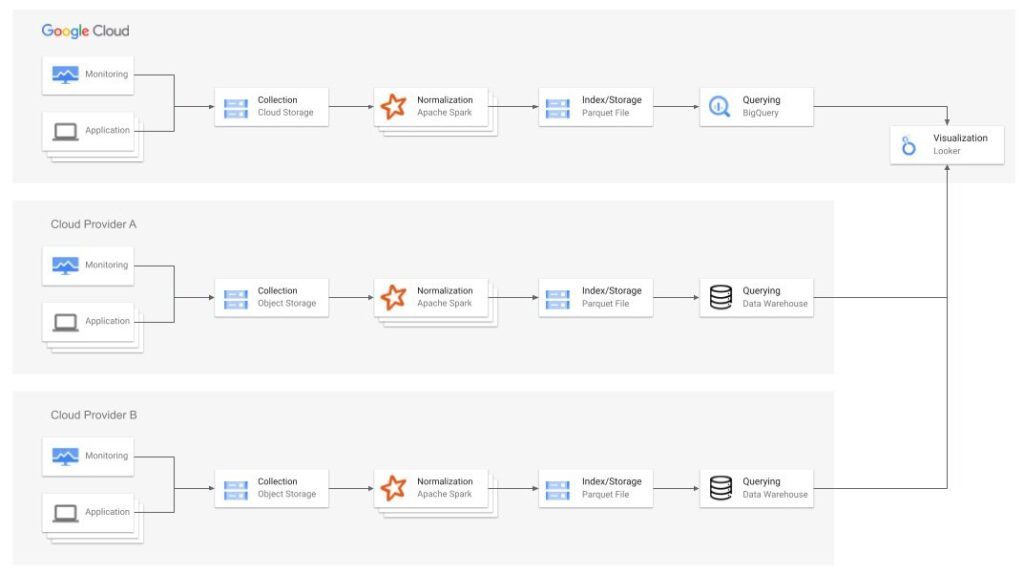

A common Architecture

Many businesses use the following architecture to apply log analysis in a multi-cloud setting:

This architecture has advantages and disadvantages.

On the Plus side:

Data lifecycle: By utilizing pre-existing functionality from object storage solutions, data lifecycle management may be implemented really easily. For instance, you can provide the following data lifecycle policy in Cloud Storage: You can use the following policies: (a) delete any item older than a week, which will remove any JSONL files that were available during the Collection process; (b) archive any object older than a month, which will also remove your Parquet files; and (c) delete any object older than a year, which will also remove your Parquet files.

Minimal egress costs: By storing the data locally, you can avoid transmitting large amounts of unprocessed information between cloud providers.

From the negative perspective:

Normalization of log data: You will code and manage an Apache Spark workload for every application with logs you gather. In a time when (a) engineers are in short supply and (b) the use of microservices is expanding quickly, it is wise to steer clear of this.

Querying: You can’t do as much analysis and visualization if you spread your data over several cloud providers.

Querying: Using WHERE clauses to prevent partitions with archived files requires human error and is not a simple solution for excluding archived files created earlier in the data lifetime. Managing the table’s manifest by adding and removing divisions as necessary is one way to work with Iceberg Table. However, it is difficult to play with the Iceberg Table manifest by hand, and using a third-party solution only makes things more expensive.

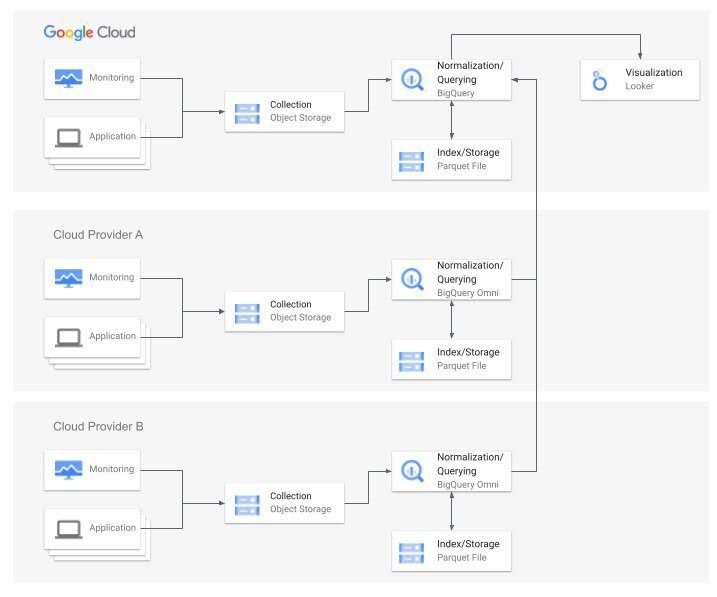

A better way to address all of these issues would be to use BigQuery Omni, which is shown in the architecture below.

This method’s primary advantage is the removal of several Spark workloads and the need for software engineers to code and maintain them. Having a single product (BigQuery) manage the entire process, aside from storage and visualization, is another advantage of this system. You gain from cost savings as well. Below, we’ll go into more detail about each of these points.

An streamlined procedure for normalizing

The ability of BigQuery to automatically determine the schema of JSONL files and generate an external table pointing to them is a valuable feature. This function is especially helpful when working with multiple log schema formats. The JSONL content of any application can be accessed by defining a simple CREATE TABLE declaration.

Once there, you may program BigQuery to export the JSONL external table into compressed Parquet files in Hive format that are divided into hourly segments. An EXPORT DATA statement that may be programmed to execute once every hour is shown in the query below. This query’s SELECT statement only records the log data that was ingested during the previous hour and transforms it into a Parquet file with columns that have been normalized.

DECLARE hour_ago_rounded_string STRING;

DECLARE hour_ago_rounded_timestamp DEFAULT DATETIME_TRUNC(TIMESTAMP_SUB(CURRENT_TIMESTAMP(), INTERVAL 1 HOUR), HOUR);

SET (hour_ago_rounded_string) = (

SELECT AS STRUCT FORMAT_TIMESTAMP(“%Y-%m-%dT%H:00:00Z”, hour_ago_rounded_timestamp, “UTC”)

);

EXPORT DATA

OPTIONS (

uri = CONCAT(‘[MY_BUCKET_FOR_PARQUET_FILES]/ingested_date=’, hour_ago_rounded_string, ‘/logs-*.parquet’),

format = ‘PARQUET’,

compression = ‘GZIP’,

overwrite = true)

AS (

SELECT [MY_NORMILIZED_FIELDS] EXCEPT(ingested_date)

FROM [MY_JSONL_EXTERNAL_TABLE] as jsonl_table

WHERE

DATETIME_TRUNC(jsonl_table.timestamp, HOUR) = hour_ago_rounded_timestamp

);

A uniform querying procedure for all cloud service providers

While using the same data warehouse platform across several cloud providers already improves querying, BigQuery Omni’s ability to perform cross-cloud joins is revolutionary for Log Analytics. Combining log data from many cloud providers was difficult prior to BigQuery Omni. Sending the raw data to a single master cloud provider results in large egress expenses due to the volume of data; yet, pre-processing and filtering it limits your capacity to do analytics on it. Cross-cloud joins allow you to execute a single query across several clouds and examine the outcomes.

Reduces TCO

This architecture’s ability to lower total cost of ownership (TCO) is its last and most significant advantage. There are 3 ways to measure this:

Decreased engineering resources: Apache Spark is eliminated from this procedure for two reasons. The first is that Spark code can be worked on and maintained without a software developer. By employing standard SQL queries, the log analytics team can complete the deployment process more quickly. The shared responsibility concept of BigQuery and BigQuery Omni, which are PaaS, is extended to data in AWS and Azure.

Lower compute resources: The most economical environment might not always be provided by Apache Spark. The application itself, the Apache Spark platform, and the virtual machine (VM) make up an Apache Spark solution. In comparison to Apache Spark, BigQuery uses slots (virtual CPUs, not virtual machines) and an export query that is transformed into C-compiled code during the export process can lead to quicker performance for this particular operation.

Lower egress expenses: BigQuery Omni eliminates the need to transfer raw data between cloud providers in order to get a consolidated view of the data by processing data in-situ and egressing only results through cross-cloud joins.

What is the best way to use BigQuery in this setting?

BigQuery has two compute pricing models for query execution:

On-demand pricing (per TiB): This pricing model charges you according to the quantity of bytes each query processes, but it does not charge you for the first 1 TiB of query data handled each month. Using this technique is not advised because log analytics tasks use a lot of data.

Capacity pricing (per slot-hour): Under this pricing model, you are billed for the amount of computing power that is utilized to execute queries over time, expressed in slots (virtual CPUs). This model utilizes editions of BigQuery. Slot commitments, which are dedicated capacity always available for your workloads, are less expensive than on-demand, and you can use the BigQuery autoscaler.

In order to conduct an empirical test, Google assigned 100 slots (baseline 0, maximum slots 100) to a project that aimed to export log JSONL data into a compressed Parquet format. This configuration allowed BigQuery to process 1PB of data daily without using up all 100 slots.

In order to enable the TCO reduction of Log Analytics workloads in a multi-cloud context, it proposed an architecture in this blog post that substitutes SQL queries running on BigQuery Omni for Apache Spark applications. Your particular data environment may benefit from this method’s ability to minimize overall DevOps complexity while lowering engineering, computation, and egress expenses.

BigQuery Omni pricing

Please refer to BigQuery Omni pricing for details on price and time-limited promotions.