Utilize BigQuery history-based optimizations to increase query performance by up to 100 times.

Users search everywhere for insights, saturating the data warehouse with a wide range of queries to uncover the answers they want. Many of those searches are very similar to one another, and some of them use a lot of computing power. Google Cloud created BigQuery history-based optimizations, a novel query optimization method that uses past executions of related queries to find and implement further query execution enhancements, in order to significantly accelerate query execution. They can improve a number of performance measures, including as elapsed time, slot time, and processed bytes, by speeding up and/or reducing the amount of resources used by queries.

BigQuery history-based optimization can confidently target high-reward optimizations that are well-suited for a particular workload with the advantage of actual, historical data from prior executions of similar queries.

Google observed cases where query speed on customer workloads increased by up to 100x during the public preview of BigQuery history-based optimization, and these enhancements are now widely accessible.

This blog post explains the BigQuery history-based optimization’ underlying technology, their advantages, and how to get started.

About history-based optimizations

In order to apply additional optimizations and further improve query performance, including slot time consumed and query latency, BigQuery history-based optimization leverage data from previously completed executions of similar queries. When history-based optimization is used, for instance, the initial query execution may take 60 seconds, but if a history-based optimization was found, the second query run may only take 30 seconds. Until no further optimizations need to be made, this process keeps on.

An illustration of how history-based optimizations using BigQuery operate is provided below:

| Execution count | Query slot time consumed | Notes |

|---|---|---|

| 1 | 60 | Original execution. |

| 2 | 30 | First history based-optimization applied. |

| 3 | 20 | Second history based-optimization applied. |

| 4 | 21 | No additional history based-optimizations to apply. |

| 5 | 19 | No additional history based-optimizations to apply. |

| 6 | 20 | No additional history based-optimizations to apply. |

Only when there is a high degree of confidence that the query performance will improve are history-based improvements used. Additionally, an optimization is revoked and not applied in subsequent query executions if it does not significantly increase query performance.

It simply works

To provide enhancements without requiring user input, Google Cloud created BigQuery history-based optimization. No more setups or actions are required from you because the infrastructure is self-tuning and self-correcting.

In order to find workload-specific optimizations that can be applied to the subsequent execution of a similar query, BigQuery examines the statistical data following each query execution. User workloads are unaffected by this background activity. More optimizations can be found and implemented after the subsequent execution confirms that the optimization is helpful, improving query performance incrementally and iteratively.

In the rare event that optimization does not considerably improve (or even impair) query performance, BigQuery history-based optimization is robust and will self-correct to prevent the use of that particular improvement for any subsequent query run. You don’t have to get involved!

Query matching

In order to minimize the danger of applying discovered optimizations to radically different questions, BigQuery employs an advanced query-matching technique to maximize the number of requests that can share them.

Simple query adjustments, such as changing whitespace or comment strings, reuse all optimizations that have already been found. The execution of queries is unaffected by such modifications.

Depending on whether BigQuery is certain that a particular optimization will benefit the changed query, nontrivial changes, such as changing the values of constants or query parameters, may reuse certain previously found optimizations and reject others.

BigQuery disregards all previously found optimizations if the query is significantly altered, for as by querying a different source table or fields. This is due to the fact that the statistical information that was utilized to determine the optimization is probably no longer relevant to the updated query.

At the moment, BigQuery history-based optimization is limited to a single Google Cloud project.

Types of optimization

Four optimization categories are currently supported by BigQuery history-based optimization, which enhance current optimization techniques.

Join pushdown

By performing very selected joins initially, join pushdown seeks to minimize the amount of data handled.

BigQuery may detect very selective joins when a query has completed running if the join’s result (number of rows) is substantially less than its input. BigQuery may decide to perform the selective join earlier if it comes after less selective operations like aggregations or other joins. As seen below in Figure, this essentially “pushes down” the “join” in the execution order. This can cut down on the quantity of data that the remainder of the query must process, saving resources and speeding up query performance.

History-based join pushdown uses more information about data distribution to further enhance performance and apply it to more queries, even if BigQuery already optimizes some queries without the use of statistics from previous executions.

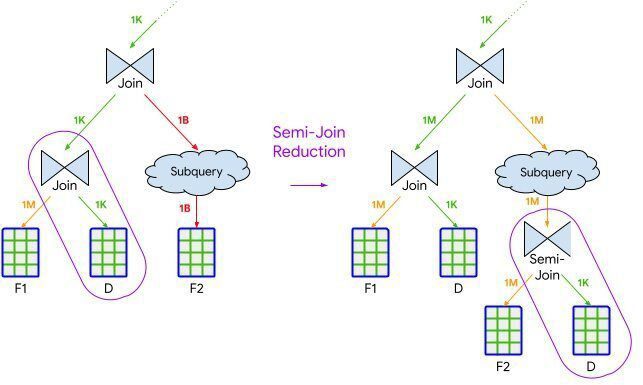

Semijoin reduction

By introducing selective semijoin operations throughout the query, semijoin reduction seeks to minimize the volume of data that BigQuery scans.

When a query has many parallel execution paths that are finally linked together, BigQuery may detect a very selective join (akin to join pushdown). Depending on the selective join, BigQuery may occasionally introduce new “semijoin” procedures that “reduce” the volume of data scanned and processed by those parallel execution routes, as illustrated in below Figure. Even though a semijoin is the logical model for this, partition pruning and other internal optimizations are typically used instead.

Join commutation

By switching the left and right sides of a join operation, join commutation seeks to minimize the amount of resources used.

The two sides of the join can be handled differently when performing a join operation. For instance, BigQuery might select one side of the join to create a hash table, which is then probed when scanning the other side of the join. In certain situations, BigQuery may determine that it could be more effective to switch the two sides of a join, lowering the resources used to do that join operation, because the two sides of a join can be “commutative” (the order does not alter the result).

Parallelism adjustment

By more effectively parallelizing the task, parallelism adjustment seeks to reduce query latency.

BigQuery runs queries in phases using a distributed parallel architecture. BigQuery selects an initial level of parallelism for each of these stages, but it may dynamically modify the parallelism level as it is being executed in reaction to patterns in the data that are noticed.

Given the known workload distribution of the query’s prior executions, BigQuery may now “adjust” the initial amount of “parallelism” with history-based optimizations. Especially for queries with big, compute-intensive phases, this enables BigQuery to parallelize work more effectively, decrease overhead, and achieve lower latency.

Give it a try

Optimizations based on history are now widely accessible. They will be activated by default for all clients over the next few months, but you can enable them sooner for your project or company. You have the ability to examine which BigQuery history-based optimization was used (if any) in INFORMATION_SCHEMA and comprehend how they affected your jobs.

This sample query can be used to test history-based optimizations:

Choose or start a project that hasn’t used this sample query yet; if not, you might only see the query that has already been optimized.

Turn on optimizations based on history for the chosen project.

Turn off the cached results retrieval.

Run the sample query below, which makes use of a public BigQuery dataset, and record the amount of time that has passed:

WITH

january_first_views AS (

SELECT * FROM bigquery-public-data.wikipedia.pageviews_2024

WHERE TIMESTAMP_TRUNC(datehour, DAY) = TIMESTAMP(‘2024-01-01’)

),

february_first_views AS (

SELECT * FROM bigquery-public-data.wikipedia.pageviews_2024

WHERE TIMESTAMP_TRUNC(datehour, DAY) = TIMESTAMP(‘2024-02-01’)

),

march_first_views AS (

SELECT * FROM bigquery-public-data.wikipedia.pageviews_2024

WHERE TIMESTAMP_TRUNC(datehour, DAY) = TIMESTAMP(‘2024-03-01’)

),

april_first_views AS (

SELECT * FROM bigquery-public-data.wikipedia.pageviews_2024

WHERE TIMESTAMP_TRUNC(datehour, DAY) = TIMESTAMP(‘2024-04-01’)

),

average_views AS (

SELECT

j.title,

SUM(j.views + f.views + m.views + a.views) / 4 AS average_views

FROM january_first_views AS j

INNER JOIN february_first_views AS f USING (wiki, title)

INNER JOIN march_first_views AS m USING (wiki, title)

INNER JOIN april_first_views AS a USING (wiki, title)

GROUP BY title

)

SELECT title, average_views.average_views

FROM average_views

INNER JOIN bigquery-public-data.wikipedia.wikidata AS wikidata

ON wikidata.en_wiki = average_views.title

WHERE wikidata.en_label = ‘Google’;

Run the demo query once again and compare the time difference between the initial and subsequent executions.

Using the task IDs for each execution, you can optionally verify if history-based optimizations were used for every run by following the instructions here.

Considering the future

BigQuery history-based optimization is more than just a static collection of four new improvements; they are a platform for continuous investment in BigQuery’s optimization capabilities. Without actual statistical data not just estimates from previous runs of comparable queries, a new class of optimizations would be impossible to execute. This technology makes this possible. Its capacity for self-learning and self-correction acts as a safeguard against unforeseen problems.

Its objective is to make BigQuery smarter by expanding current and upcoming history-based optimizations and allowing it to automatically adjust to shifting workloads. You won’t need to do anything; queries will simply execute more quickly and more cheaply. Follow the above instructions to give it a try today, and then use the BigQuery console to tell us what you think.