BigQuery blockchain data analysis

Through Google BigQuery public datasets, which we extended with six more in 2019, Google Cloud collaborated with the community to democratize blockchain data at the beginning of 2018. Eleven more of the most popular blockchains have been added to the BigQuery public datasets today. Additionally, we’re updating the program’s already-existing datasets.

We’re taking this action in response to feedback from blockchain foundations, Web3 analytics companies, partners, developers, and consumers who desire a more thorough overview of the cryptocurrency environment and the ability to query additional chains. They seek clarification on ambiguous statements and difficult inquiries such, “How many NFTs were minted today across three specific chains?” How do transaction costs vary across chains? likewise, “How many wallets are currently active on the top EVM chains?”

The Web3 community will be better able to respond to these and other queries by having a more comprehensive list of chains available through BigQuery and new methods to access data, all without the burden of running nodes or keeping an indexer. To understand the movement of assets from one wallet to another, the most popular tokens, and how users are engaging with smart contracts, customers may now query the whole on-chain transaction history off-chain.

Chain extension

The 11 popular chains we’re included in the BigQuery open datasets are listed below:

- Avalanche

- Arbitrum

- Cronos

- Ethereum (Görli)

- Fantom (Opera)

- Near

- Optimism

- Polkadot

- Polygon Mainnet

- Polygon Mumbai

- Tron

By adding Satoshis (sats) / Ordinals to the open-source blockchain-ETL datasets for developers to query, we are also enhancing the existing Bitcoin BigQuery dataset. In their most basic form, ordinals are a system of numeration for sats.

Datasets that Google Cloud manages

A variety of data alternatives are something we wish to provide users. We are developing first-party Google Cloud managed datasets that provide extra feature capabilities in addition to community maintained datasets on BigQuery. For instance, we developed a Google Cloud managed Ethereum dataset (goog_blockchain_ethereum_mainnet.us) that provides a complete representation of the data model native to Ethereum with curated tables for events, in addition to the already existing Ethereum community dataset (crypto_ethereum). Customers searching for deeper research on Ethereum will be able to quickly query wallet balances, transactions involving certain tokens (ERC20, ERC721, ERC1155), or interactions with smart contracts by accessing derived data.

For our clients and the Web3 community, we aim to provide solutions that are dependable and quick at the corporate level. As an example, consider the following query against the goog_blockchain_ethereum_mainnet.us database:

For example, suppose we are interested in learning “How many ETH transactions were executed daily (last 7 days)?”

SELECT DATE(block_timestamp) as date, COUNT(*) as txns FROM `bigquery-public-data.goog_blockchain_ethereum_mainnet_us.transactions`

WHERE DATE(block_timestamp) > DATE_SUB(CURRENT_DATE(), INTERVAL 7 DAY)

GROUP BY 1

ORDER BY 1 DESC;

SELECT DATE(block_timestamp) as date, COUNT(*) as txns FROM `bigquery-public-data.crypto_ethereum.transactions`

WHERE DATE(block_timestamp) > DATE_SUB(CURRENT_DATE(), INTERVAL 7 DAY)

GROUP BY 1

ORDER BY 1 DESC;

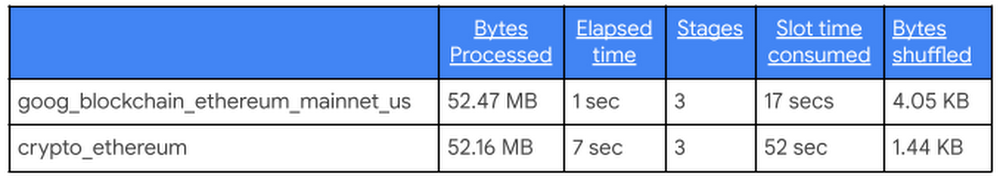

You can see from the results above how utilizing the goog_ dataset is quicker, uses less slot time, and is competitive in terms of bytes processed.

More information

We collected input from users and developers to better understand the community’s pain issues, and it became evident that features like numerical accuracy are crucial for more precisely determining the price of certain currencies. By introducing the UDF for greater UNIT256 integration and BIGNUMERIC1 support, we are increasing the accuracy of the blockchain datasets. Customers will have access to greater decimal digits for their blockchain data as a result, which will also lessen calculation rounding mistakes.

Facilitating off-chain access to on-chain data

Customers that are interested in blockchain data now need to first get access to the appropriate nodes, then create and maintain an indexer that converts the data into a queryable data model. They then carry out this procedure for any treatment that interests them.

Google are making on-chain data available off-chain for easy consumption and composability by using our substantial experience in scalable data processing, allowing developers to access blockchain data without nodes. Customers may now access blockchain data just as readily as they could their own data, according to this. Customers may get a holistic view of their users and their company by combining chain data with application data.

Last but not least, we have seen the usage of this information in various end-user programs like Looker and Google Sheets.

Growing together

Google have helped the community over the last five years by providing a public blockchain dataset, and we will keep advancing these efforts by providing a variety of data choices and user choice, ranging from community-owned to Google controlled high-quality alternatives and real-time data. Working with partners that want to make public data available to developers or monetize datasets for curated feeds and insights excites us. Google are also available to collaborate with new businesses and data suppliers that wish to create specific Web3 distribution and syndication channels that are cloud-native.

[…] our opinion here at Google Cloud, this would make it possible for analysts to speed up the incorporation of predictive analytics […]

[…] which takes its name from the Portuguese term for “cloud,” will strengthen network resilience across the Atlantic and support the expansion of digital […]

[…] artificial intelligence, but the academic community is closely examining the convergence of blockchain and AI, and the Web3 community hasn’t been afraid to explore. For […]

[…] Text Analysis: BigQuery‘s Cutting-Edge […]

[…] this, use the BigQuery API or query settings to set the job location to the secondary region. BigQuery automatically directs your queries to the primary replica’s location if you don’t […]