How to compare the application performance from the viewpoint of the user

How can you know what kind of performance your application has? More importantly, how well does your application function in the eyes of your end users?

Knowing how scalable your application is is not only a technical issue, but also a strategic necessity for success in this age of exponential growth and erratic traffic spikes. Naturally, giving end customers the best performance is a must, and benchmarking it is a crucial step in living up to their expectations.

To get a comprehensive picture of how well your application performs in real-world scenarios, you should benchmark full key user journeys (CUJs) as seen by the user, not just the individual components. Component-by-component benchmarking may miss certain bottlenecks and performance problems brought on by network latency, external dependencies, and the interaction of multiple components. You can learn more about the real user experience and find and fix performance problems that affect user engagement and satisfaction by simulating entire user flows.

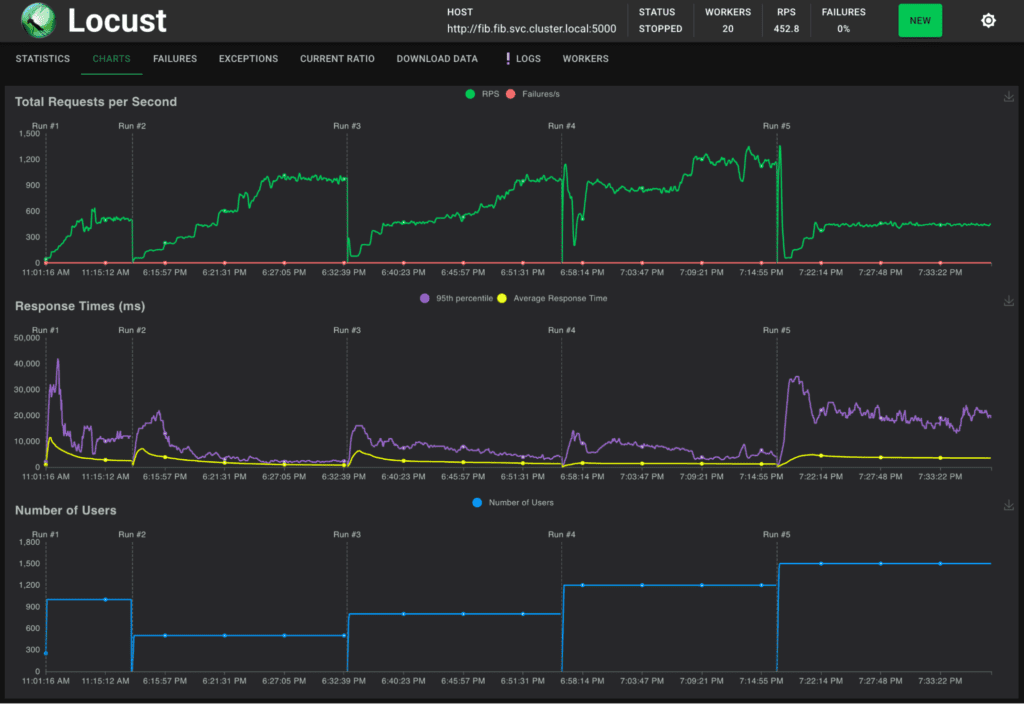

This blog will discuss the significance of integrating end-user-perceived performance benchmarking into contemporary application development and how to foster an organizational culture that assesses apps immediately and keeps benchmarking over time. Google Kubernetes Engine (GKE) also demonstrates how to replicate complicated user behavior using the open-source Locust tool for use in your end-to-end benchmarking exercises.

The importance of benchmarking

You should incorporate strong benchmarking procedures into your application development process for a number of reasons:

Proactive performance management: By identifying and addressing performance bottlenecks early in the development cycle, early and frequent benchmarking can help developers save money, speed up time to market, and create more seamless product launches. Furthermore, by quickly identifying and resolving performance regressions, benchmarking can be incorporated into testing procedures to provide a vital safety net that protects code quality and user experience.

Continuous performance optimization: Because applications are dynamic, they are always changing due to user behavior, scaling, and evolution. Frequent benchmarking makes it easier to track performance trends over time, enabling developers to assess the effects of updates, new features, and system changes. This keeps the application responsive and consistently performant even as things change.

Bridging the gap between development and production: A realistic evaluation of application performance in a production setting can be obtained as part of a development process by benchmarking real-world workloads, images, and scaling patterns. This facilitates seamless transitions from development to deployment and helps developers proactively address possible problems.

Benchmarking scenarios to replicate load patterns in the real world

Benchmarking your apps under conditions that closely resemble real-world situations, such as deployment, scalability, and load patterns, should be your aim as a developer. This method evaluates how well apps manage unforeseen spikes in traffic without sacrificing user experience or performance.

To test and improve cluster and workload auto scalers, the GKE engineering team conducts comprehensive benchmarking across a range of situations. This aids in the comprehension of how autoscaling systems adapt to changing demands while optimizing resource use and preserving peak application performance.

Application Performance tools

Locust for performance benchmarking and realistic load testing

Locust is an advanced yet user-friendly load-testing tool that gives developers a thorough grasp of how well an application performs in real-world scenarios by simulating complex user behavior through scripting. Locust makes it possible to create different load scenarios by defining and instantiating “users” that carry out particular tasks.

Locust in one example benchmark to mimic consumers requesting the 30th Fibonacci number from a web server. To maintain load balancing among many pods, each connection was closed and reestablished, resulting in a steady load of about 200 ms per request.

from locust import HttpUser, task

class Simple(HttpUser):

@task

def simple(self):

# Close the connection after each request (or else users won’t get load

# balanced to new pods.)

headers = {“Connection”: “close”}

self.client.get("/calculate", headers=headers)Simulating these complex user interactions in your application is comparatively simple with Locust. On a single system, it can produce up to 10,000 queries per second. It can also expand higher through unconventional distributed deployment. With users who display a variety of load profiles, it allows you to replicate real-world load patterns by giving you fine-grained control over the number of users and spawn rate through bespoke load shapes. It is expandable to a variety of systems, such as XML-RPC, gRPC, and different request-based libraries/SDKs, and it natively supports HTTP/HTTPS protocols for web and REST queries.

To provide an end-to-end benchmark of a pre-release autoscaling cluster setup, it has included a GitHub repository with this blog post. It is advised that you modify it to meet your unique needs.

Delivering outstanding user experiences requires benchmarking end users’ perceived performance, which goes beyond simply being a best practice. Developers may determine whether their apps are still responsive, performant, and able to satisfy changing user demands by proactively incorporating benchmarking into the development process.

You can learn more about how well your application performs in a variety of settings by using tools like Locust, which replicate real-world situations. Performance is a continuous endeavor. Use benchmarking as a roadmap to create outstanding user experiences.