Google Cloud and Swift pioneer advanced AI and federated learning technologies to assist in fighting payment fraud.

Traditional fraud detection techniques struggle to keep up with more complex criminal strategies. The identification of complex schemes involving several banks and jurisdictions is hampered by the fact that existing methods sometimes rely on the scant data of individual institutions.

Swift, a global provider of secure financial messaging services, is collaborating with Google Cloud to create anti-fraud technologies that leverage federated learning and sophisticated artificial intelligence to better fight fraud in cross-border payments.

In collaboration with 12 international financial institutions and with Google Cloud as a strategic partner, Swift intends to launch a sandbox using synthetic data in the first half of 2025 to test learning from historical fraud. Following a successful test with financial institutions in Europe, North America, Asia, and the Middle East, this effort expands upon Swift’s current Payment Controls Service (PCS).

The collaboration between Swift and Google Cloud

Along with technology partners Rhino Health and Capgemini, Google Cloud is working with Swift to create a safe, private solution that will help financial institutions fight fraud. This novel method enables shared intelligence without jeopardising proprietary data by combining privacy-enhancing technologies (PETs) with federated learning approaches.

Capgemini will oversee the solution’s integration and deployment, while Rhino Health will create and supply the essential federated learning platform.

The problem: Conventional fraud detection is lagging

Criminals may take advantage of the vulnerabilities created by the lack of visibility throughout the payment lifecycle. When it comes to fighting financial crimes, a collaborative approach to fraud modelling has many advantages over conventional techniques. Data sharing between institutions is necessary for this strategy to be successful, but it is frequently constrained due to privacy issues, legal restrictions, and intellectual property considerations.

Federated learning is the answer

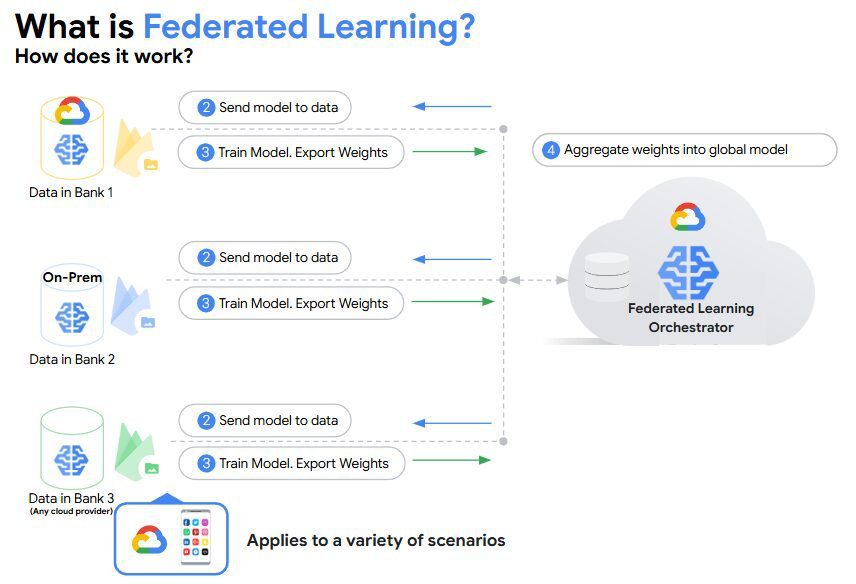

Federated learning provides an effective way to train AI models collaboratively without sacrificing secrecy or privacy. The model is trained using decentralized data inside financial institutions rather than forcing them to pool their sensitive data.

For Swift, it operates as follows:

- Every participating bank receives a copy of Swift’s anomaly detection model.

- This model is locally trained using data from each financial institution.

- Only the lessons learnt from this training, not the actual data, are sent back to a central server run by Swift for aggregation.

- These insights are combined by the central server to improve Swift’s global model.

This method guarantees that sensitive data stays in each financial institution’s secure environment while drastically reducing data travel.

The federated learning solution’s main advantages

Financial organizations can gain a number of advantages by implementing federated learning technologies, including:

By cooperating to exchange information on fraudulent actions, patterns, and trends, financial institutions build a decentralized data pool that is far richer and broader than any one institution could collect on its own. This is known as shared intelligence.

Improved detection and prevention can result from the collaborative global model’s ability to spot intricate fraud schemes that individual institutions might miss.

Decreased false positives: Information sharing aids in the improvement of fraud models, which results in fewer false alarms that interfere with legitimate activities and the customer experience and more accurate detection of real threats.

Quicker adaptation: New fraud trends and criminal strategies can be adopted more quickly thanks to the cooperative approach. The common knowledge pool enables all participants to swiftly modify their models and fraud prevention technologies in response to emerging threats.

Network effects: As additional institutions join, the data pool gets more complete, strengthening fraud prevention for all parties. This is known as a network effect.

Federated learning must smoothly connect with current financial systems and infrastructure in order to be widely adopted. This makes it simple for financial institutions to take part and gain from the collective intelligence without interfering with their daily operations.

Developing the global AI solution for fraud

The initial scope is still a synthetic data sandbox with a focus on learning from past payments fraud instances. The technology protects the privacy of sensitive transaction data while enabling several financial institutions to train a strong fraud detection model. It enables safe, multi-party machine learning without moving training data by utilizing federated learning and confidential computing approaches like Trusted Execution Environments (TEEs).

This solution consists of the following essential elements:

Federated server in TEE execution environment: An isolated, safe setting in which a federated learning (FL) server coordinates the cooperation of several clients by initially providing the FL clients with an initial model. In order to create a global model, the clients first train on their local datasets before sending the model changes back to the FL server for aggregation.

Federated client: Completes tasks, uses local datasets (such data from a single financial institution) for computation and learning, and then sends the results back to the FL server for safe aggregation.

Bank-specific encrypted data: Private, encrypted transaction data, including historical fraud labels, is kept by each bank separately. End-to-end data privacy is ensured by keeping this data encrypted during the entire process, including computation.

Global fraud-based model: A Swift anomaly detection model that has already been trained and is used as the foundation for federated learning.

Secure aggregation: To protect the privacy of each participant in the federated learning process, the server would only learn the historical fraud labels from participating financial institutions not the precise financial institution if these weighted averages were computed using a Secure Aggregation protocol.

Pooled weights and the trained model for global anomaly detection: The participating financial institutions receive a secure exchange of the enhanced anomaly detection model and its learned weights. After that, they can use this improved model locally to monitor their own transactions for fraud.

Creating a more secure financial system collectively

This coordinated fraud-fighting effort will make the financial environment safer. Federated learning and data privacy, security, platform interoperability, secrecy, and scalability can improve fraud prevention in fragmented globalized banking. It also shows a dedication to creating a more reliable and trustworthy financial world.