What Is Analog AI?

The process of encoding information as a physical quantity and doing calculations utilizing the physical characteristics of memory devices is known as Analog AI, or analog in-memory computing. It is a training and inference method for deep learning that uses less energy.

Features of analog AI

Non-volatile memory

Non-volatile memory devices, which can retain data for up to ten years without power, are used in analog AI.

In-memory computing

The von Neumann bottleneck, which restricts calculation speed and efficiency, is removed by analog AI, which stores and processes data in the same location.

Analog representation

Analog AI performs matrix multiplications in an analog fashion by utilizing the physical characteristics of memory devices.

Crossbar arrays

Synaptic weights are locally stored in the conductance values of nanoscale resistive memory devices in analog AI.

Low energy consumption

Energy use may be decreased via analog AI

Analog AI Overview

Enhancing the functionality and energy efficiency of Deep Neural Network systems.

Training and inference are two distinct deep learning tasks that may be accomplished using analog in-memory computing. Training the models on a commonly labeled dataset is the initial stage. For example, you would supply a collection of labeled photographs for the training exercise if you want your model to recognize various images. The model may be utilized for inference once it has been trained.

Training AI models is a digital process carried out on conventional computers with conventional architectures, much like the majority of computing nowadays. These systems transfer data to the CPU for processing after first passing it from memory onto a queue.

Large volumes of data may be needed for AI training, and when the data is sent to the CPU, it must all pass through the queue. This may significantly reduce compute speed and efficiency and causes what is known as “the von Neumann bottleneck.” Without the bottleneck caused by data queuing, IBM Research is investigating solutions that can train AI models more quickly and with less energy.

These technologies are analog, meaning they capture information as a changeable physical entity, such as the wiggles in vinyl record grooves. Its are investigating two different kinds of training devices: electrochemical random-access memory (ECRAM) and resistive random-access memory (RRAM). Both gadgets are capable of processing and storing data. Now that data is not being sent from memory to the CPU via a queue, jobs may be completed in a fraction of the time and with a lot less energy.

The process of drawing a conclusion from known information is called inference. Humans can conduct this procedure with ease, but inference is costly and sluggish when done by a machine. IBM Research is employing an analog method to tackle that difficulty. Analog may recall vinyl LPs and Polaroid Instant cameras.

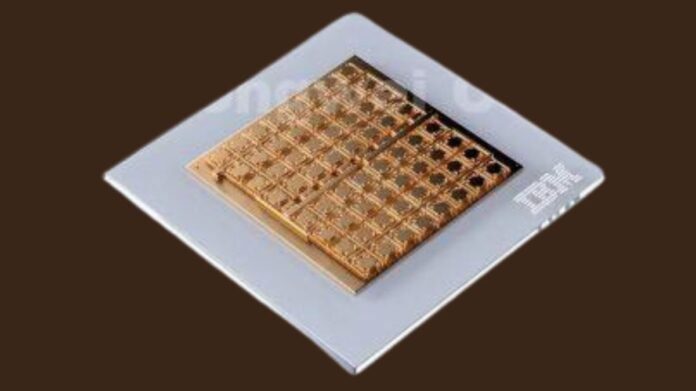

Long sequences of 1s and 0s indicate digital data. Analog information is represented by a shifting physical quantity like record grooves. The core of it analog AI inference processors is phase-change memory (PCM). It is a highly adjustable analog technology that uses electrical pulses to calculate and store information. As a result, the chip is significantly more energy-efficient.

As an AI word for a single unit of weight or information, its are utilizing PCM as a synaptic cell. More than 13 million of these PCM synaptic cells are placed in an architecture on the analog AI inference chips, which enables us to construct a sizable physical neural network that is filled with pretrained data that is, ready to jam and infer on your AI workloads.

FAQs

What is the difference between analog AI and digital AI?

Analog AI mimics brain function by employing continuous signals and analog components, as opposed to typical digital AI, which analyzes data using discrete binary values (0s and 1s).