Utilize TensorFlow and PyTorch for AI with Intel Tiber Developer Cloud

Deep Learning (DL) uses neural networks to replicate how human brains process complex information and situations. PyTorch and TensorFlow, the two most popular deep learning frameworks, manage massive volumes of data, but they execute code differently.

What Is Intel Tiber?

With the help of Intel Tiber Developer Cloud, developers can create and expedite AI applications with Intel-optimized software running on the newest Intel hardware. Using oneAPI libraries, Intel has also optimized popular deep learning and machine learning frameworks, resulting in top performance on all Intel platforms. Users may get optimal performance benefits over stock implementations of the same frameworks using these software optimizations.

Access to a range of hardware, including Intel Xeon Scalable CPUs and Intel Gaudi 2 AI Accelerators, is made possible via the Intel Tiber Developer Cloud, which powers AI applications and solutions that make use of ML frameworks. With free Jupyter notebooks and tutorials, developers may evaluate platform and software optimizations as well as learn, prototype, test, and execute workloads on their favourite CPU or GPU with Intel Tiber Developer Cloud.

Intel Tiber Developer Cloud

This post shows how to use PyTorch and TensorFlow on the Intel Tiber Developer Cloud to create and develop deep learning workloads. It is recommended that you read the comprehensive tutorial on how to get started with Intel Tiber Developer Cloud before attempting to follow the procedures in this article.

General Instructions for Using the Cloud with Intel Tiber Developer:

- To choose a service tier and establish an account, sign in or click the “Get Started” button.

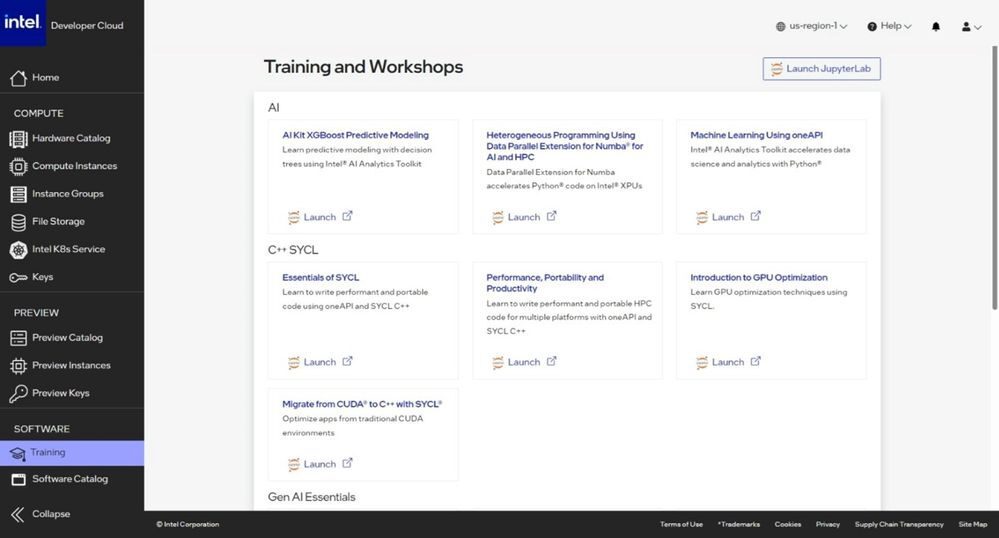

- Select SOFTWARE >Training from the panel on the left.

- Press the JuptyerLab button located in the upper right corner.

Depending on the demands of developers, the JupyterLab offers many kinds of kernels. When a user launches a new notebook, the JupyterHub locates the appropriate package installation to the designated environment thanks to the kernels, which are pre-installed Python environments. The packages required to execute the example programs below are often included in the Base kernel.

Intel Tiber Cloud

Use Intel Tiber Developer Cloud to Begin Deep Learning

Intel Extension for PyTorch: PyTorch is an open-source Python deep learning and AI toolbox. By adding the most recent feature optimisations to PyTorch, the Intel Extension for PyTorch enhances the efficiency of deep learning training and inference on Intel CPUs. The Intel Extension for PyTorch additionally includes specific optimisations for Large Language Models (LLMs) for various Generative AI (GenAI) applications.

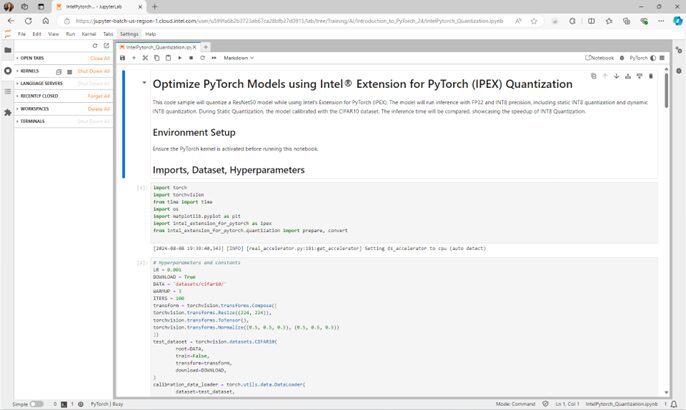

The following instructions explain how to use the Intel Tiber Developer Cloud to execute the Intel Extension for PyTorch Quantisation Sample:

- Start JupyterLab.

- Open IntelPytorch_Quantization.ipynb in its raw format on the Dashboard by copying and pasting the URL to File > Open from URL.

- To modify the kernel, go to Kernel > Modify Kernel > Choose Kernel > PyTorch.

- Execute every cell in the example code, then go at the results.

This code example uses The Intel Extension for PyTorch (IPEX) to demonstrate the quantization of a ResNet50 model. With both static and dynamic INT8 quantization, the model performs inference with FP32 and INT8 precision. The inference time will be compared in this case to demonstrate how quickly INT8 Quantization works.

Intel TensorFlow Extension: An open-source framework called TensorFlow is used to develop and implement deep learning systems in a range of contexts. Utilising OpenMP, the Intel Extension for TensorFlow parallelizes the execution of deep learning over several CPU cores. With the help of this addon, users may freely connect an XPU into TensorFlow whenever they want, revealing the processing power contained inside Intel hardware.

To execute the Leveraging Intel Extension for TensorFlow with LSTM for Text Generation Sample on the Intel Tiber Developer Cloud, follow the instructions below:

- Start JupyterLab.

- Copy and paste the URL to File > Open from URL to get the raw TextGenerationModelTraining.ipynb on the Dashboard.

- To modify the kernel, choose TensorFlow GPU by clicking Kernel > Change Kernel > choose Kernel.

- Execute every cell in the example code, then go at the results.

This code example shows how to use Intel Extension for TensorFlow and LSTM on Intel CPUs to train the text generation model. Predicting the probability distribution of the subsequent word in a sequence using the input provided is the primary objective of the text generation model (supply the input from a text phrase for better results). There will be a reduction in GPU memory usage and a quicker training time when TensorFlow is extended by Intel.

Tiber Intel

Available as part of AI Tools are all of the aforementioned frameworks that Intel has optimized. Additionally, there is a tutorial on using Intel Tiber Developer Cloud with well-known machine learning frameworks like Modin, XGBoost, and Scikit-learn.

To get the newest silicon hardware and optimized software to support and power your next creative AI applications, check out Intel Tiber Developer Cloud! They invite you to explore the unified, open, standards-based oneAPI programming paradigm that serves as the basis for Intel’s AI Software Portfolio, as well as the company’s AI Tools and Framework optimizations. How their other partnerships with top system integrators, OEMs, business users, and independent software suppliers (ISV) in the industry has accelerated the adoption of AI.

Utilising GPU computation and Intel-optimized software on the newest Intel Xeon processors, Intel Tiber Developer Cloud speeds up the creation of AI.

Start Using Intel

Get familiar with the newest Intel products firsthand. Use Intel to enhance your AI abilities.

Early Access to Technology: Examine prerelease Intel systems and related software stacks optimised for Intel.

Implement AI Widely: Utilize Intel’s most recent machine learning toolkits and libraries offered on the Intel Tiber Developer Cloud to expedite AI installations.

Edge-based Intel Developer Cloud: Use deep learning models and inference apps to get started at any point in the edge development process.

Anywhere, access all the resources you need to build for the edge. With instant and unfettered access to the newest edge computing platforms and technology from Intel, you can build your edge solutions.