At Lowe’s, Google always work to give their customers a more convenient and pleasurable shopping experience. A recurring issue Google has noticed is that a lot of customers come to their mobile application or e-commerce site empty-handed, thinking they’ll know the proper item when they see it.

Google Cloud developed Visual Scout, an interactive tool for browsing the product catalogue and swiftly locating products of interest on lowes.com, to solve this problem and improve the shopping experience. It serves as an example of how artificial intelligence suggestions are transforming modern shopping experiences across a variety of communication channels, including text, speech, video, and images.

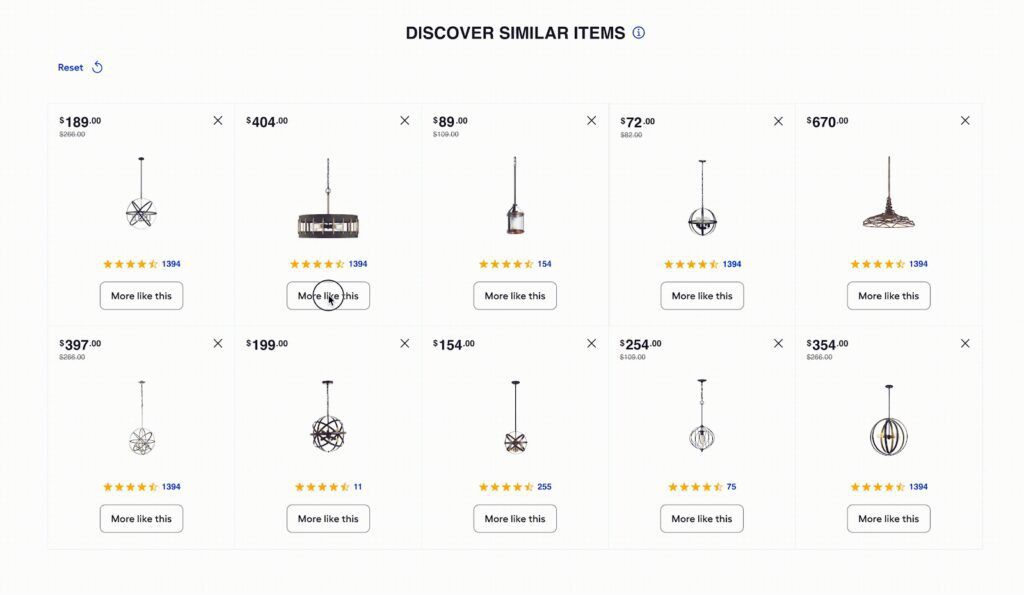

Visual Scout is intended for consumers who consider products’ aesthetic qualities when making specific selections. It provides an interactive experience that allows buyers to learn about different styles within a product category. First, ten items are displayed on a panel by Visual Scout. Following that, users express their choices by “liking” or “disliking” certain display items. Visual Scout dynamically changes the panel with elements that reflect client style and design preferences based on this feedback.

This is an illustration of how a discovery panel refresh is influenced by user feedback from a customer who is shopping for hanging lamps.

We will dive into the technical details and examine the crucial MLOps procedures and technologies in this post, which make this experience possible.

How Visual Scout Works

Customers usually know roughly what “product group” they are looking for when they visit a product detail page on lowes.com, although there may be a wide variety of product options available. Customers can quickly identify a subset of interesting products by using Visual Scout to sort across visually comparable items, saving them from having to open numerous browser windows or examine a predetermined comparison table.

The item on a particular product page will be considered the “anchor item” for that page, and it will serve as the seed for the first recommendation panel. Customers then iteratively improve the product set that is on show by giving each individual item in the display a “like” or “dislike” rating:

- “Like” feedback: When a consumer clicks the “more like this” button, Visual Scout substitutes products that closely resemble the one the customer just liked for the two that are the least visually similar.

- “Dislike” feedback: On the other hand, Visual Scout substitutes a product that is aesthetically comparable to the anchor item for a product that a client votes with a ‘X’.

Visual Scout offers a fun and gamified shopping experience that promotes consumer engagement and, eventually, conversion because the service refreshes in real time.

Would you like to give it a try?

Go to this product page and look for the “Discover Similar Items” section to see Visual Scout in action. It’s not necessary to have an account, but make sure you choose a store from the menu in the top left corner of the website. This aids Visual Scout in suggesting products that are close to you.

The technology underlying Visual Scout

Many Google Cloud services support Visual Scout, including:

- Dataproc: Batch processing tasks that use an item’s picture to feed a computer vision model as a prediction request in order to compute embeddings for new items; the predicted values are the image’s embedding representation.

- Vertex AI Model Registry: a central location for overseeing the computer vision model’s lifecycle

- Vertex AI Feature Store: Low latency online serving and feature management for product image embeddings

- For low latency online retrieval, Vertex AI Vector Search uses a serving index and vector similarity search.

- BigQuery: Stores an unchangeable, enterprise-wide record of item metadata, including price, availability in the user’s chosen store, ratings, inventories, and restrictions.

- Google Kubernetes Engine: Coordinates the Visual Scout application’s deployment and operation with the remainder of the online buying process.

Let’s go over a few of the most important activities in the reference architecture below to gain a better understanding of how these components are operationalized in production:

- For a given item, the Visual Scout API generates a vector match request.

- To obtain the most recent image embedding vector for an item, the request first makes a call to Vertex AI Feature Store.

- Visual Scout then uses the item embedding to search a Vertex AI Vector Search index for the most similar embedding vectors, returning the corresponding item IDs.

- Product-related metadata, such as inventory availability, is utilised to filter each visually comparable item so that only goods that are accessible at the user’s chosen store location are shown.

- The Visual Scout API receives the available goods together with their metadata so that lowes.com can serve them.

- An update job is started every day by a trigger to calculate picture embeddings for any new items.

- Any new item photos are processed by Dataproc once it is activated, and it then embeds them using the registered machine vision model.

- Providing live updates update the Vertex AI Vector Search providing index with updated picture embeddings

- The Vertex AI Feature Store online serving nodes receive new image embedding vectors, which are indexed by the item ID and the ingestion timestamp.

Vertex AI low latency serving

Visual Scout uses Vector Search and Feature Store, two Vertex AI services, to replace items in the recommendation panel in real time.

To keep track of an item’s most recent embedding representation, utilise the Vertex AI Feature Store. This covers any newly available photos for an item as well as any net new additions to the product catalogue. In the latter scenario, the most recent embedding of an item is retained in online storage while the prior embedding representation is transferred to offline storage. The most recent embedding representation of the query item is retrieved by the Feature Store look-up from the online serving nodes at serving time, and it is then passed to the downstream retrieval job.

Visual Scout then has to identify the products that are most comparable to the query item among a variety of things in the database by analyzing their embedding vectors. Calculating the similarity between the query and candidate item vectors is necessary for this type of closest neighbor search, and at this size, this computation can easily become a retrieval computational bottleneck, particularly if an exhaustive (i.e., brute-force) search is being conducted. Vertex AI Vector Search uses an approximate search to get over this barrier and meet their low latency serving needs for vector retrieval.

Visual Scout can handle a large number of queries with little latency thanks to these two services. Google Cloud performance objectives are met by the 99th percentile reaction times, which come in at about 180 milliseconds and guarantee a snappy and seamless user experience.

Why does Vertex AI Vector Search happen so quickly?

From a billion-scale vector database, Vertex AI Vector Search is a managed service that offers effective vector similarity search and retrieval. This offering is the culmination of years of internal study and development because these features are essential to numerous Google Cloud initiatives. It’s important to note that ScaNN, an open-source vector search toolkit from Google Research, also makes a number of core methods and techniques openly available. The ultimate goal of ScaNN is to create reliable and repeatable benchmarking, which will further the field’s research. Offering a scalable vector search solution for applications that are ready for production is the goal of Vertex AI Vector Search.

ScaNN overview

The 2020 ICML work “Accelerating Large-Scale Inference with Anisotropic Vector Quantization” by Google Research is implemented by ScaNN. The research uses a unique compression approach to achieve state-of-the-art performance on nearest neighbour search benchmarks. Four stages comprise the high-level process of ScaNN for vector similarity search:

- Partitioning: ScaNN partitions the index using hierarchical clustering to minimise the search space. The index’s contents are then represented as a search tree, with the centroids of each partition serving as a representation for that partition. Typically, but not always, this is a k-means tree.

- Vector quantization: this stage compresses each vector into a series of 4-bit codes using the asymmetric hashing (AH) technique, leading to the eventual learning of a codebook. Because only the database vectors not the query vectors are compressed, it is “asymmetric.”

- AH generates partial-dot-product lookup tables during query time, and then utilises these tables to approximate dot products.

- Rescoring: recalculate distances with more accuracy (e.g., lesser distortion or even raw datapoint) given the top-k items from the approximation scoring.

Constructing a serving-optimized index

The tree-AH technique from ScaNN is used by Vertex AI Vector Search to create an index that is optimized for low-latency serving. A tree-X hybrid model known as “tree-AH” is made up of two components: (1) a partitioning “tree” and (2) a leaf searcher, in this instance “AH” or asymmetric hashing. In essence, it blends two complimentary algorithms together:

Tree-X, a k-means tree, is a hierarchical clustering technique that divides the index into search trees, each of which is represented by the centroid of the data points that correspond to that division. This decreases the search space.

A highly optimised approximate distance computing procedure called Asymmetric Hashing (AH) is utilised to score how similar a query vector is to the partition centroids at each level of the search tree.

It learns an ideal indexing model with tree-AH, which effectively specifies the quantization codebook and partition centroids of the serving index. Additionally, when using an anisotropic loss function during training, this is even more optimised. The rationale is that for vector pairings with high dot products, anisotropic loss places an emphasis on minimising the quantization error. This makes sense because the quantization error is negligible if the dot product for a vector pair is low, indicating that it is unlikely to be in the top-k. But since Google Cloud want to maintain the relative ranking of a vector pair, they need be much more cautious about its quantization error if it has a high dot product.

To encapsulate the final point:

- Between a vector’s quantized form and its original form, there will be quantization error.

- Higher recall during inference is achieved by maintaining the relative ranking of the vectors.

- At the cost of being less accurate in maintaining the relative ranking of another subset of vectors, Google can be more exact in maintaining the relative ranking of one subset of vectors.

Assisting applications that are ready for production

Vertex AI Vector Search is a managed service that enables users to benefit from ScaNN performance while providing other features to reduce overhead and create value for the business. These features include:

- Updates to the indexes and metadata in real time allow for quick queries.

- Multi-index deployments, often known as “namespacing,” involve deploying several indexes to a single endpoint.

- By automatically scaling serving nodes in response to QPS traffic, autoscaling guarantees constant performance at scale.

- Periodic index compaction to accommodate for new updates is known as “dynamic rebuilds,” which enhance query performance and reliability without interpreting service

- Complete metadata filtering and diversity: use crowding tags to enforce diversity and limit the use of strings, numeric values, allow lists, and refuse lists in query results.