Healthcare organisations’ performance requirements are met by WiNGPT, Winning Health LLM, which uses Intel technology.

Summary

Large language models (LLMs) are a novel technology with great promise for use in medical settings. This is well acknowledged in the current state of smart hospital improvement. Medical report generation, AI-assisted imaging diagnosis, pathology analysis, medical literature analysis, healthcare Q&A, medical record sorting, and chronic disease monitoring and management are just a few of the applications that are powered by LLMs and help to improve patient experience while lowering costs for medical institutions in terms of personnel and other resources.

The absence of high-performance and reasonably priced computing systems, however, is a significant barrier to the wider adoption of LLMs in healthcare facilities. Consider the inference process for models: the sheer magnitude and complexity of LLMs considerably outweigh those of typical AI applications, making it difficult for conventional computing platforms to satisfy their needs.

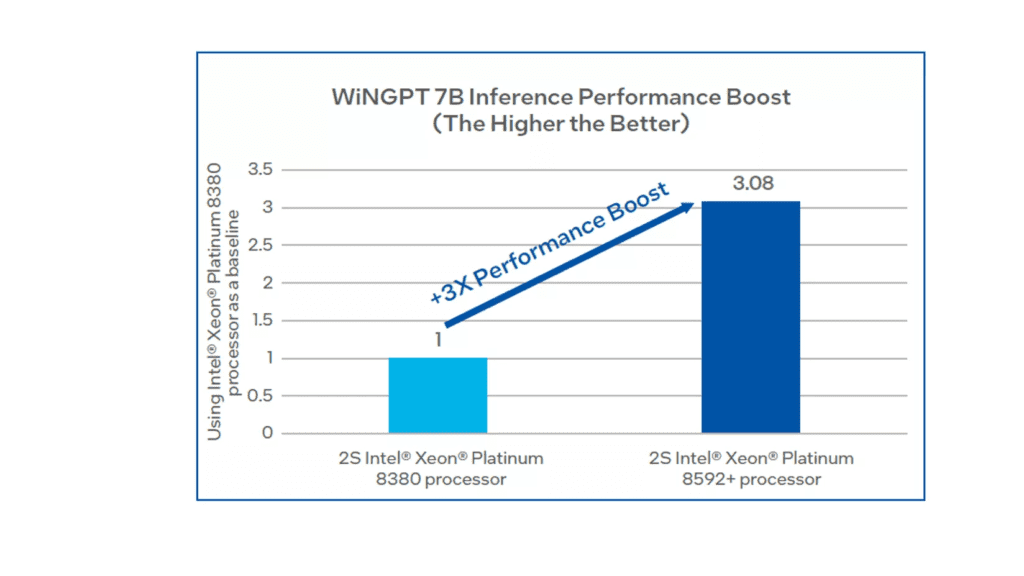

WiNGPT, a medical LLM leader, has been enhanced by Winning Health with the release of its 5th generation Intel Xeon Scalable processor-based WiNGPT system. The method efficiently makes use of the processors’ integrated accelerators, such as Intel Advanced Matrix Extensions (Intel AMX), for model inference. In comparison with the platform based on the 3rd Gen Intel Xeon Scalable processors, the inference performance has been boosted by nearly three times through collaboration with Intel in areas such as graph optimisation and weight-only quantization. Accelerating the implementation of LLM applications in healthcare organisations, the upgrade satisfies the performance demand for scenarios like automated medical report creation.

Challenge: Medical LLM Inference’s Compute Dilemma

The broad implementation of LLMs across multiple industries, including healthcare, is seen as a significant achievement for the practical usage of this technology. Healthcare organisations are investing more and have advanced significantly in LLMs for medical services, management, and diagnosis. According to research, the healthcare industry will embrace LLMs at a rapid pace between 2023 and 2027. By that time, the market is predicted to grow to a size of over 7 billion yuan.

Typically, LLMs are compute-intensive applications with high computing costs because of the significant computing resources required for training, fine-tuning, and inference. Model inference is one of the most important phases in the deployment of LLM among them. Healthcare facilities frequently face the following difficulties when developing model inference solutions:

- There is a great need for real-time accuracy in these intricate settings. For this to work, the computing platform must have sufficient inference capacity. Furthermore, healthcare organisations typically prefer that the platform be deployed locally rather than on the cloud due to the strict security requirements for medical data.

- While GPU improvements may be necessary in response to LLM updates, hardware upgrades are rarely common. Updated models might therefore not be compatible with outdated hardware.

- The inference of Transformer-based LLMs now requires significantly more hardware than it did previously. It is challenging to completely use prior computational resources since memory and time complexity both rise exponentially with the length of the input sequence. As such, hardware utilisation is still below maximum potential.

- From an economic standpoint, it would be more expensive to deploy computers specifically for model inference, and their utilisation would be restricted. Because of this, many healthcare organisations choose to employ CPU-based server systems for inference in order to reduce hardware costs while maintaining the ability to handle a range of workloads.

WiNGPT based on Intel 5th Generation Xeon Scalable Processors

An LLM created especially for the healthcare industry is called WiNGPT by Winning Health. WiNGPT, which is based on the general-purpose LLM, combines high-quality medical data and is tailored and optimised for medical scenarios, enabling it to offer intelligent knowledge services for various healthcare scenarios. The three unique characteristics of WiNGPT are as follows:

- Perfected and specialised: WiNGPT delivers remarkable data accuracy that satisfies a variety of business needs. It has been trained and refined for medical scenarios and on high-quality data.

- Cheap: By means of algorithm optimisation, the CPU-based deployment has achieved generation efficiency that is comparable to that of the GPU through testing.

- Assist with personalised private deployment: In addition to improving system stability and dependability, private deployment guarantees that medical data stays inside healthcare facilities and prevents data leaks. It also enables tailored alternatives to suit different financial plans for organisations with different needs.

Winning Health has teamed with Intel and selected the 5th Gen Intel Xeon Scalable processors in order to speed up WiNGPT’s inference speed. These processors have a lower total cost of ownership (TCO), provide remarkable performance gains per watt across a range of workloads, and are more reliable and energy-efficient. They also perform exceptionally well in data centres, networks, AI, and HPC. In the same power consumption range, the 5th Gen Intel Xeon Scalable processors provide faster memory and more processing capability than their predecessors. Furthermore, when deploying new systems, they greatly reduce the amount of testing and validation that must be done because they are interoperable with platforms and software from previous generations.

AI performance is elevated by the integrated Intel AMX and other AI-optimized technologies found in the 5th generation Intel Xeon Scalable CPUs. Intel AMX introduces a novel instruction set and circuit design that allows matrix operations, resulting in a large increase in instructions per cycle (IPC) for AI applications. For training and inference workloads in AI, the breakthrough results in a significant performance boost.

The Intel Xeon Scalable 5th generation processor enables:

- Up to 21% increase in overall performance

- A 42 percent increase in inference performance

- Memory speed up to 16 percent quicker

- Larger L3 cache by up to 2.7 times

- Greater performance per watt by up to ten times

Apart from the upcoming 5th generation Intel Xeon Scalable processors, Winning Health and Intel are investigating methods to tackle the memory access limitation in LLM inference using the existing hardware platform. LLMs are typically thought of being memory-bound because of their large number of parameters, which frequently necessitate loading billions or even tens of billions of model weights into memory before calculating. Large amounts of data must be momentarily stored in memory during computation and accessed for further processing. Thus, the main factor preventing inference speed from increasing has shifted from processing power to memory access speed.

In order to maximise memory access and beyond, Winning Health and Intel have implemented the following measures:

Graph optimisation is the technique of combining several operators in order to minimise the overhead associated with operator/core calls. By combining many operators into one operation, performance is increased because fewer memory resources are used than when reading in and reading out separate operators. Winning Health has optimised the algorithms in these procedures using Intel Extension for PyTorch, which has effectively increased performance. Intel employs the Intel Extension for PyTorch plug-in, which is built on Intel Xeon Scalable processors and Intel Iris Xe graphics, to enhance PyTorch performance on servers by utilising acceleration libraries like oneDNN and oneCCL.

Weight-only quantization: This kind of optimisation is specifically designed for LLMs. The parameter weights are transformed to an INT8 data type as long as the computing accuracy is ensured, but they are then returned to half-precision. This helps to speed up the computing process overall by reducing the amount of memory used for model inference.

WiNGPT’s inference performance has been enhanced by Winning Health and Intel working together to improve memory utilisation. In order to further accelerate inference for the deep learning framework, the two have also worked together to optimise PyTorch’s main operator algorithms on CPU platforms.

The LLaMA2 model’s inference performance reached 52 ms/token in a test-based validation environment. An automated medical report is generated in less than three seconds for a single output.

Winning Health also contrasted the 3rd Gen and 5th Gen Intel Xeon Scalable processor-based solutions’ performance during the test. According to the results, current generation CPUs outperform third generation processors by nearly three times.

The robust performance of the 5th Gen Intel Xeon Scalable CPU is sufficient to suit customer expectations because the business situations in which WiNGPT is employed are relatively tolerant of LLM latency. In the meantime, the CPU-based approach may be readily scaled to handle inference instances and altered to run inference on several platforms.

Advantages

Healthcare facilities have benefited from WiNGPT’s solution, which is built on 5th generation Intel Xeon Scalable processors, in the following ways:

- Enhanced application experience and optimised LLM performance: Thanks to technical improvements on both ends, the solution has fully benefited from AI’s performance benefits when using 5th generation Intel Xeon Scalable processors. It can ensure a guaranteed user experience while reducing generation time by meeting the performance requirements for model inference in scenarios like medical report generation.

- Increased economy while controlling platform construction costs: By avoiding the requirement to add specialised inference servers, the solution can use the general-purpose servers that are currently in use in healthcare facilities for inference. This lowers the cost of procurement, deployment, operation, maintenance, and energy usage.

- LLMs and other IT applications are ideally balanced: Due to the solution’s ability to employ CPU for inference, computing power allocation for healthcare organisations can be more agile and flexible as needed, as CPU can be divided between LLM inference and other IT operations.

Observing Up Front

When combined with WiNGPT, the 5th generation Intel Xeon Scalable CPUs offer exceptional inferencing speed, which facilitates and lowers the cost of LLM implementation. To increase user accessibility and benefit from Winning Health’s most recent AI technologies, both parties will keep refining their work on LLMs.