VESSL AI

Even while AI has the ability to provide competitive advantages, it is still difficult to realise its business worth. It is still difficult for organisations to advance AI initiatives beyond trial; estimates from the past few years suggest that over half of machine learning (ML) pilots do not make it to production.

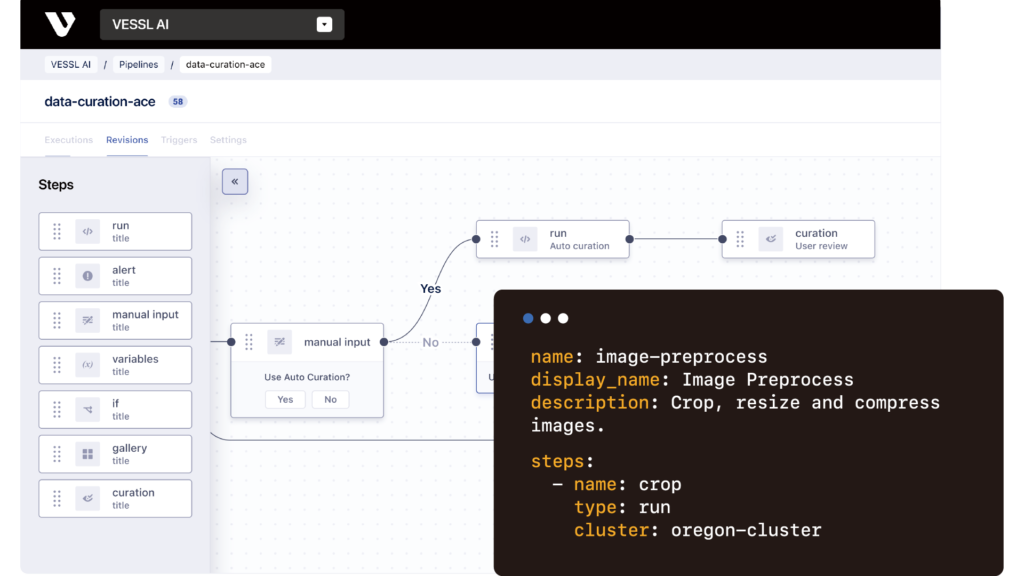

ML systems have a tonne of unreported technical debt. Just a small portion of the processes, parts, and infrastructure needed to implement ML systems in the actual world are represented by ML code. In order to satisfy the growing needs of AI-driven businesses, today’s machine learning (ML) teams must improve their ML operations through streamlined, effective workflows.

With the goal of bridging the gap between proof-of-concept and production, VESSL AI offers comprehensive tools, fully managed infrastructure, and an innovative end-to-end MLOps platform. Google cloud are committed to helping organisations grow and accelerate their AI initiatives.

Google Cloud: The impetus for VESSL AI’s development

VESSL AI selected Google Cloud as their partner because VESSL AI saw early on the revolutionary potential of cloud computing and services to improve their MLOps platform.

VESSL AI ability to deploy solutions smoothly is made possible by Google Cloud’s enormous resources, which guarantees that their customers can continue operating and grow as needed. VESSL AI offer an architecture that scales in accordance with model requirements by utilising Google Cloud services, including Compute Engine and Google Kubernetes Engine (GKE), so that customers may make the best use of their resources. Because of Google Cloud’s vast network and infrastructure, VESSL AI are also able to provide their solutions to businesses all over the world.

For instance, constructing a reliable infrastructure that could quickly source a variety of GPUs for executing large language models (LLMs) and generative AI models presented a problem to many generative AI firms, including their own. As a result, VESSL AI developed VESSL Run, a single interface that can run different kinds of machine learning workloads on different GPU setups. They built resilient computing clusters with GKE so we can scale ML workloads on Kubernetes dynamically. With this configuration, They can oversee the whole machine learning lifecycle, from training to deployment. Furthermore, Spot GPUs guarantee that their platform can reach maximum computing efficiency, cutting expenses dramatically without compromising functionality.

Since data is the primary commercial asset of AI teams and organisations, data security is equally crucial. VESSL AI can reassure their users with confidence that they adhere to the strictest standards and criteria thanks to Google Cloud’s stringent security and compliance measures. For example, VESSL AI Artefacts uses fine-grained access control on Google Cloud to safely store models, datasets, and other important artefacts. To guarantee that teams can always access their data and workloads, VESSL AI use Filestore for NFS and Cloud Storage for all of their ML datasets and models. Because of this, They are able to guarantee that the data is accurate, consistent, safe, and secure in addition to being available for the whole machine learning lifecycle.

Using (ever more) powerful AI

To greatly improve VESSL AI capabilities, They have just implemented integration with Vertex AI. They can now enhance their current MLOps components with Vertex AI, enabling customers to leverage robust models and AutoML solutions from Google in addition to labelling datasets and training models with less manual intervention and expertise. With this connection, VESSL AI can now provide their clients with a more complete and effective solution that better meets the changing demands of AI model development. They can now enhance their current MLOps components with Vertex AI, enabling customers to train models and label datasets with less manual labour and skill, leading to even more efficient machine learning workflows.

By utilising the power of VESSL AI platform, numerous organisations are already reaping transformative benefits that save them money and time. Teams can now create AI models up to four times faster thanks to Google Cloud’s VESSL AI, which has saved hundreds of hours from ideation to deployment. Furthermore, and enabled them to achieve up to 80% savings on cloud expenses.

Furthermore, being able to collaborate closely with Google Cloud’s team of professionals has allowed them to fully grasp everything that Google Cloud has to offer and build a mutually beneficial partnership.

Considering the future

Even though VESSL AI has already had a major impact on the MLOps scene, They are still early in the process. VESSL AI is currently preparing for a future where ML teams can easily expand their ML processes from training to model deployment by integrating several third-party cloud platforms and building on their strong enterprise success stories, culminating in the release of VESSL AI‘s public beta in 2023.

Furthermore, being a part of the Google for Startups Accelerator programme offers them a unique chance to expand their perspectives. This course gives entrepreneurs access to a wealth of resources, including potential investments and mentorship. It works as a catalyst to assist entrepreneurs in growing quickly, entering international markets, and improving their products. This creates opportunities for collaboration with other top players in the sector and creates a strong environment for them to flourish in.

Industries are being revolutionised by the combination of AI and operations. VESSL AI think that working with Google Cloud will help them stay at the vanguard of this change and, ideally, serve as a model for other businesses hoping to use the cloud to speed growth in the emerging field of generative AI. Innovation partners with Google Cloud have access to state-of-the-art technology as well as a network that may greatly expand the scope and potential of their company research.