What is pgvector

With the help of the open-source Pgvector extension for PostgreSQL, you may deal with vectors from inside the database. This implies that you can use PostgreSQL to store, search for, and analyse vector data in addition to structured data.

The following are some essential pgvector knowledge points:

Vector Similarity Search

Enabling vector similarity search is the primary purpose of pgvector. This is helpful for things like recommending products based on user behaviour or content or locating related items. Pgvector provides options for both exact and approximation searches.

Storing Embeddings

Vector embeddings, which are numerical representations of data points, can also be stored using Pgvector. Many machine learning tasks can make use of these embeddings.

Functions with Various Vector Data Types

Pgvector is compatible with binary, sparse, half-precision, and single-precision vector data types.

Rich Functionality

Pgvector offers a wide range of vector operations, such as addition and subtraction, as well as distance measurements (such as cosine similarity) and indexing for quicker search times.

PostgreSQL integration

Since pgvector is a PostgreSQL extension, it interacts with PostgreSQL without any problems. This enables you to use PostgreSQL’s built-in architecture and features for your AI applications.

All things considered, pgvector is an effective tool for giving your PostgreSQL database vector similarity search capabilities. Numerous applications in artificial intelligence and machine learning may benefit from this.

RAG Applications

In order to speed up your transition to production, Google Cloud is pleased to announce the release of a quickstart solution and reference architecture for Retrieval Augmented Generation (RAG) applications. This article will show you how to use Ray, LangChain, and Hugging Face to quickly deploy a full RAG application on Google Kubernetes Engine (GKE), along with Cloud SQL for PostgreSQL and pgvector.

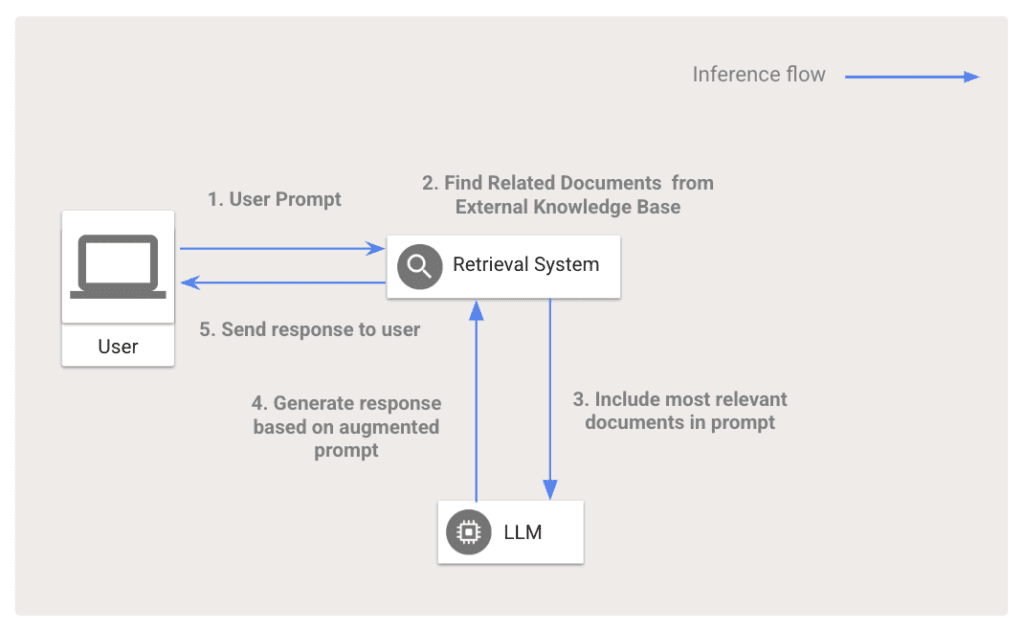

Describe RAG

For a particular application, RAG can enhance the outputs of foundation modes, such as large language models (LLMs). AI apps with RAG support can extract the most pertinent information from an external knowledge source, add it to the user’s prompt, and then transmit it to the generative model instead of depending solely on knowledge acquired during training. Digital shopping assistants can access product catalogues and customer reviews, vector databases, relational databases, and customer service chabots can look up help centre articles using the knowledge base. AI-powered travel agents can also retrieve the most recent flight and hotel information from the knowledge base.

LLMs rely on their training data, which may not contain information pertinent to the application’s domain and can rapidly become outdated. Retraining or optimising an LLM to deliver new, domain-specific data can be a costly and difficult procedure. RAG provides the LLM with access to this data without the need for fine-tuning or training. but can also direct an LLM towards factual answers, minimising delusions and allowing applications to offer material that can be verified by a person.

AI Framework for RAG

An application architecture would typically consist of a database, a collection of microservices, and a frontend before Generative AI gained popularity. New requirements for processing, retrieving, and serving LLMs are introduced by even the most rudimentary RAG applications. Customers demand infrastructure that is specifically optimised for AI workloads in order to achieve these criteria.

Many clients decide to use a fully managed platform, like Vertex AI, to access AI infrastructure, such as TPUs and GPUs. Others, on the other hand, would rather use open-source frameworks and open models to run their own infrastructure on top of GKE. This blog entry is intended for the latter.

Making a lot of important decisions when starting from scratch with an AI platform includes choosing which frameworks to use for model serving, which machine models to use for inference, how to secure sensitive data, how to fulfil performance and cost requirements, and how to expand as traffic increases. Every choice you make pits you against an expansive and dynamic array of creative AI tools.

LangChain pgvector

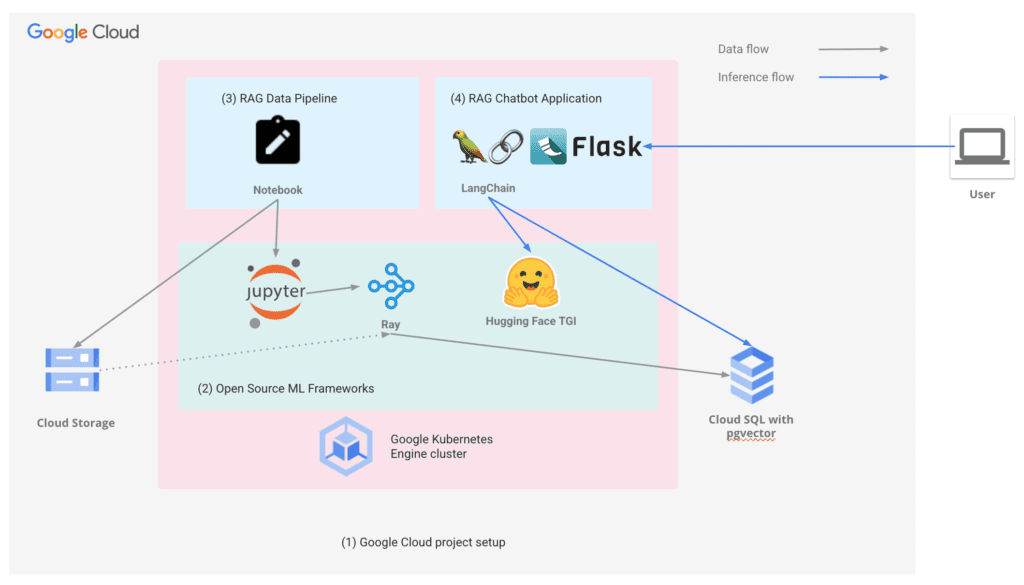

For RAG applications, Google Cloud has created a quickstart solution and reference architecture based on GKE, Cloud SQL, and the open-source frameworks Hugging Face, Ray, and LangChain. With RAG best practices integrated right from the start, the Google Cloud solution is made to help you get started quickly and accelerate your journey to production.

RAG’s advantages for GKE and Cloud SQL

GKE with Cloud SQL expedite your deployment process through multiple means:

Load Data Quickly

Using GKE’s GCSFuse driver, you can easily access data in parallel from your Ray cluster by using Ray Data. To do low latency vector search at scale, load your embeddings into Cloud SQL for PostgreSQL and pgvector efficiently.

Fast deploy

Install Hugging Face Text Generation Inference (TGI), JupyterHub, and Ray on your GKE cluster quickly.

Simplified security

GKE provides move-in ready Kubernetes security. Use Sensitive Data Protection (SDP) to filter out anything that is hazardous or sensitive. Use Identity-Aware Proxy to take advantage of Google’s standard authentication and enable users to login to your LLM frontend and Jupyter notebooks with ease.

Cost effectiveness and lower management overhead

GKE simplifies the use of cost-cutting strategies like spot nodes through YAML configuration and lowers cluster maintenance.

Scalability

As traffic increases, GKE automatically allocates nodes, removing the need for human configuration to expand.

Pgvector Performance

The following are provided by the Google Cloud end-to-end RAG application and reference architecture:

Google Cloud project

The Google Cloud project setup provides the necessary setup for the RAG application to run, such as a GKE Cluster, Cloud SQL for PostgreSQL, and pgvector instance.

AI frameworks

Ray, JupyterHub, and Hugging Face TGI are implemented at GKE

RAG Embedding Pipeline

The RAG Embedding Pipeline creates embedding and loads the PostgreSQL and pgvector instance’s data into the Cloud SQL.

Example RAG Chatbot Application

A web-based RAG chatbot is deployed to GKE via the example RAG chatbot application.

Pgvector Postgres

An open source LLM can be interacted with by users through the web interface offered by the example chatbot programme. By utilising the data that is loaded into Cloud SQL for PostgreSQL with pgvector via the RAG data pipeline, it may provide users with more thorough and insightful answers to their queries.

The Google Cloud end-to-end RAG solution shows how this technology may be used for a variety of applications and provides a foundation for future development. With the strength of RAG, the scalability, flexibility, and security capabilities of GKE and Cloud SQL, along with the security features of Google Cloud, developers can create robust and adaptable apps that manage intricate processes and offer insightful data.