What is Reinforcement learning?

A machine learning (ML) method called Reinforcement Learning(RL) teaches software to make choices that will produce the best outcomes. It simulates the process of trial-and-error learning that people employ to accomplish their objectives. Actions in the software that advance your objective are rewarded, while those that hinder it are disregarded.

When processing data, RL algorithms employ a reward-and-punishment paradigm. They gain knowledge from each action’s input and figure out for themselves the most efficient processing routes to get desired results. Additionally, the algorithms can provide delayed satisfaction. The best course of action they find might involve some penalties or going back a step or two because the best overall plan might necessitate temporary sacrifices. RL is an effective technique for assisting artificial intelligence (AI) systems in achieving the best results in situations that cannot be observed.

What are the benefits of reinforcement learning?

Reinforcement learning (RL) has numerous advantages. These three, nevertheless, frequently stick out.

Excels in complex environments

In complicated systems with numerous rules and dependencies, RL algorithms can be applied. Even with superior environmental knowledge, a human might not be able to decide which course to pursue in the same situation. Rather, model-free RL algorithms discover innovative ways to maximize outcomes and quickly adjust to constantly shifting contexts.

Requires fewer interactions with people

In conventional machine learning methods, the algorithm is guided by human labeling of data pairings. Using an RL algorithm eliminates the need for this. It picks up knowledge on its own. In addition, it provides ways to include human input, enabling systems to adjust to human knowledge, preferences, and corrections.

Focuses on long-term objectives

Because RL is primarily concerned with maximizing long-term rewards, it is well-suited for situations in which decisions have long-term effects. Because it can learn from delayed incentives, it is especially well-suited for real-world scenarios where input isn’t always available at every stage.

For instance, choices regarding energy storage or consumption may have long-term effects. Long-term cost and energy efficiency can be maximized with RL. Additionally, RL agents can apply their learnt techniques to similar but distinct tasks with the right designs.

What are the use cases of reinforcement learning?

There are numerous real-world applications for reinforcement learning (RL). Next, AWS provide some examples.

Personalization in marketing

RL can tailor recommendations to specific users based on their interactions in applications such as recommendation systems. Experiences become more customized as a result. For instance, depending on certain demographic data, an application might show a user advertisements. In order to maximize product sales, the program learns which ads to show the user with each ad interaction.

Optimization problems

Conventional optimization techniques assess and contrast potential solutions according to predetermined standards in order to resolve issues. RL, on the other hand, uses interaction learning to gradually identify the best or nearly best answers.

For instance, RL is used by a cloud expenditure optimization system to select the best instance kinds, numbers, and configurations while adapting to changing resource requirements. It bases its choices on things like spending, use, and the state of the cloud infrastructure.

Forecasts for finances

Financial market dynamics are intricate, having changing statistical characteristics. By taking transaction costs into account and adjusting to changes in the market, RL algorithms can maximize long-term gains.

For example, before testing actions and recording related rewards, an algorithm could study the stock market’s laws and tendencies. It establishes a strategy to optimize earnings and dynamically generates a value function.

How does reinforcement learning work?

In behavioral psychology, the learning process of Reinforcement learning (RL) algorithms is comparable to that of human and animal reinforcement learning. A youngster might learn, for example, that when they clean or assist a sibling, they get praise from their parents, but when they yell or toss toys, they get unfavorable responses. The child quickly discovers which set of actions leads to the final reward.

A similar learning process is simulated by an RL algorithm. To get the final reward outcome, it attempts various tasks to learn the corresponding positive and negative values.

Important ideas

You should become familiar with the following important ideas in Reinforcement learning:

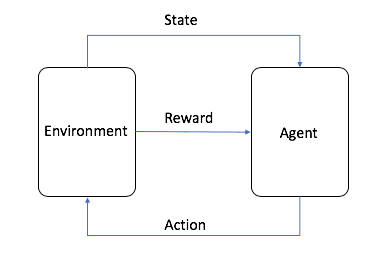

- The ML algorithm, often known as the autonomous system, is the agent.

- The environment, which has characteristics like variables, boundary values, rules, and legitimate activities, is the adaptive problem space.

- The action is a move made by the RL agent to move through the surroundings.

- The environment at a specific moment in time is the state.

- The reward is the value that results from an activity; it can be positive, negative, or zero.

The total of all incentives or the final amount is the cumulative reward.

Fundamentals of algorithms

The Markov decision process, a discrete time-step mathematical model of decision-making, is the foundation of reinforcement learning. The agent performs a new action at each stage, which changes the state of the environment. In a similar vein, the order of earlier activities is responsible for the current situation.

The agent develops a set of if-then rules or policies by navigating the environment and making mistakes. For the best cumulative reward, the policies assist it in determining the next course of action. Additionally, the agent has to decide whether to take known high-reward actions from a given state or continue exploring the environment to discover new state-action rewards. This is known as the trade-off between exploration and exploitation.

What are the types of reinforcement learning algorithms?

Reinforcement learning (RL) uses temporal difference learning, policy gradient approaches, Q-learning, and Monte Carlo methods. The use of deep neural networks for reinforcement learning is known as “deep RL.” TRPO, or Trust Region Policy Optimization, is an illustration of a deep reinforcement learning method.

Reinforcement Learning Example

Two major categories can be used to classify all of these algorithms.

Model based Reinforcement Learning

When testing in real-world situations is challenging and surroundings are well-defined and static, model-based reinforcement learning is usually employed.

First, the agent creates an internal model, or representation, of the surroundings. This procedure is used to create this model:

- It acts in the surroundings and records the reward value and the new state.

- It links the reward value to the action-state transition.

The agent simulates action sequences depending on the likelihood of optimal cumulative rewards after the model is finished. The action sequences themselves are then given additional values. In order to accomplish the intended end goal, the agent thus creates several tactics inside the environment.

Example

Imagine a robot that learns to find its way to a certain room in a new building. The robot first freely explores the building and creates an internal model, sometimes known as a map. For example, after advancing 10 meters from the main door, it may discover that it comes across an elevator. After creating the map, it might create a sequence of the shortest paths connecting the various places it commonly goes within the building.

Model-free RL

When the environment is big, complicated, and difficult to describe, model-free RL works best. There aren’t many serious drawbacks to environment-based testing, and it’s perfect in situations where the surroundings are unpredictable and changeable.

The environment and its dynamics are not internally modeled by the agent. Rather, it employs an environment-based trial-and-error method. In order to create a policy, it rates and records state-action pairings as well as sequences of state-action pairs.

Example

Think about a self-driving automobile that has to handle traffic in a city. The surroundings can be extremely dynamic and complex due to roads, traffic patterns, pedestrian behavior, and a myriad of other things. In the early phases, AI teams train the vehicle in a simulated environment. Depending on its current condition, the vehicle acts and is rewarded or penalized.

Without explicitly simulating all traffic dynamics, the car learns which behaviors are optimal for each state over time by traveling millions of miles in various virtual scenarios. The vehicle applies the learnt policy when it is first deployed in the real world, but it keeps improving it with fresh information.

What is the difference between reinforced, supervised, and unsupervised machine learning?

ML methods including supervised, unsupervised, and Reinforcement learning (RL) differ in AI.

Comparing supervised and reinforcement learning

Both the input and the anticipated corresponding result are defined in supervised learning. The algorithm is supposed to recognize a new animal image as either a dog or a cat, for example, if you give it a collection of pictures tagged “dogs” or “cats.”

Algorithms for supervised learning discover correlations and patterns between input and output pairs. Then, using fresh input data, they forecast results. In a training data set, each data record must be assigned an output by a supervisor, who is usually a human.

On the other hand, RL lacks a supervisor to pre-label related data, but it does have a clearly stated end objective in the form of a desired outcome. It maps inputs with potential outcomes during training rather than attempting to map inputs with known outputs. You give the greatest results more weight when you reward desired behaviors.

Reinforcement vs. unsupervised learning

During training, unsupervised learning algorithms are given inputs without any predetermined outputs. They use statistical methods to uncover hidden links and patterns in the data. For example, if you provide the algorithm a collection of documents, it might classify them into groups according to the terms it recognizes in the text. The results are inside a range and you don’t receive any particular results.

RL, on the other hand, has a preset ultimate goal. Even though it employs an exploratory methodology, the findings are regularly verified and enhanced to raise the likelihood of success. It has the ability to teach itself to achieve extremely particular results.

What are the challenges with reinforcement learning?

Although applications of Reinforcement learning(RL) have the potential to transform the world, implementing these algorithms may not be simple.

Realistic

It might not be feasible to test out reward and punishment schemes from the real world. For example, if a drone is tested in the real world without first being tested in a simulator, a large proportion of aircraft will break. Environments in the real world are subject to frequent, substantial, and little notice changes. In practice, it can make the algorithm less effective.

Interpretability

Data science examines conclusive research and findings to set standards and processes, just like any other scientific discipline. For provability and replication, data scientists would rather know how a particular result was arrived at.

It can be challenging to determine the motivations behind a specific step sequence in complicated RL algorithms. Which steps taken in a particular order produced the best outcome? Deducing this can be challenging, which makes implementation harder.