How to Use oneTBB in C++ Applications for Effective Memory Allocation.

Introduction

Any software application’s development and optimization must take dynamic memory allocation into account. Parallel applications make it much more difficult because of their demanding requirements, which include:

- Moderate use of memory

- Quicker thread-to-thread memory allocation and deallocation

- Keeping the CPU cache’s assigned memory hot

- Preventing cache-associativity limitations from being reached

- Avoiding thread contention and fraudulent sharing

The efficiency of various memory managers varies depending on the application.

One such memory allocator made available by the Intel oneAPI Threading Building Blocks (oneTBB) package is the subject of this blog.

The oneTBB method preserves and extends the Standard Template Library (STL) template class std::allocator’s API. Scalable_allocator and cache_aligned_allocator are two comparable templates that are introduced to solve the above-mentioned significant difficulties in parallel programming.

Overview of oneTBB

OneTBB is a versatile and efficient threading framework that makes C++ parallel programming on accelerated hardware easier. OneTBB can manage a number of time-consuming operations, including memory allocation, task scheduling, and introducing parallelism to intricate loop structures. It is compatible with Windows, Linux, macOS, and Android apps.

General oneTBB memory allocator architecture

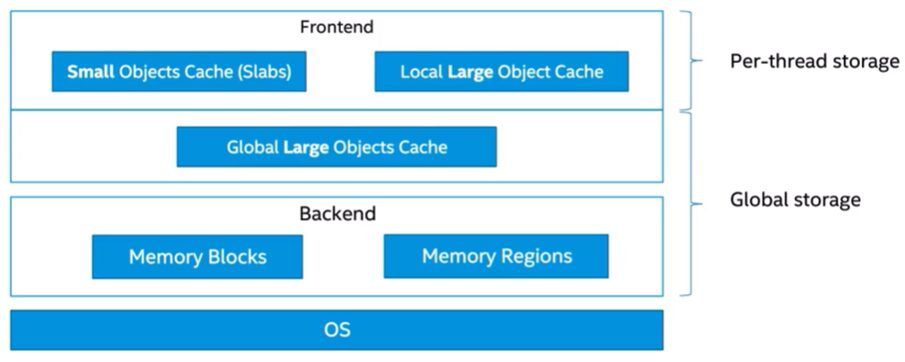

The frontend and backend are the two separate layers that make up the general design of the oneTBB memory allocator (Figure 1).

With two storage types per-thread heap and global heap the frontend serves as a caching layer. It asks the backend for RAM. In order to meet the demands of the frontend and prevent major fragmentation, the backend minimizes the amount of costly calls to the OS.

Small (<=8kB size) and big (>8kB size) objects are defined by the oneTBB memory allocator; each has a unique structure and allocation method. Let’s examine how the frontend and backend manage these objects’ memory allocation.

Frontend: Managing little items

A thread-private heap is used by the oneTBB allocator to improve scalability, decrease false sharing, and reduce the amount of code that needs to be synchronized. A copy of the heap structure is allocated by each thread:

- The primary use of a thread-local storage (TLS) is to store little items, but it may also accommodate a reasonably sized pool of large objects for the same reason that is, to lessen synchronization.

- The segregated small-object heap allocates items of varying sizes using distinct storage bins. The closest object size is used to round up a memory request. For items of comparable sizes that are often used together, this method improves locality.

In the oneTBB allocator, bin storage is a commonly utilized approach. A bin is arranged as a doubly linked list of so-called slabs and can only contain items of a specific size. For a given object size, no more than one active slab is being used at any one time to fulfill allocation requests. The bin is shifted to utilize the next partially empty slab (if available) or a totally empty slab if there are no more free items in the current slab. A replacement slab is purchased if none is available. The slab returns to the backend when all of its objects have been released.

Slab data structure

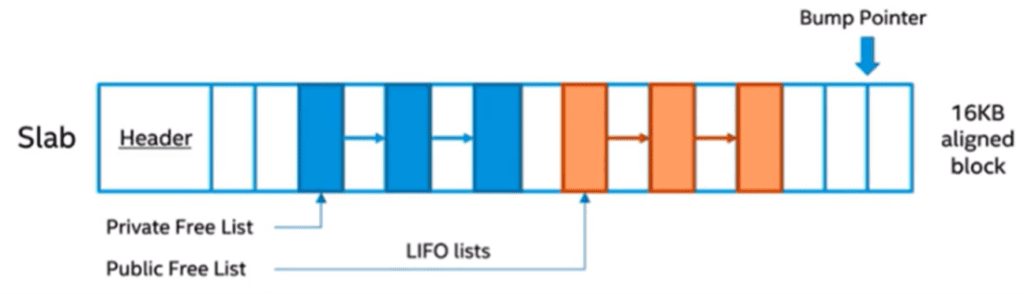

Let’s examine slab data structures in more detail, including how they stack tiny items (Figure 2).

A 16KB aligned structure, each slab block can hold items of the same size. It is a free list allocator that makes malloc-free operations relatively easy by joining unallocated memory areas into a Last-In First-Out (LIFO) linked list.

- The library just needs to connect an object to the top of the list in order to release it.

- It only needs to take an item from the top of the list and utilize it in order to allocate it.

Through cross-deallocation, a foreign thread may impede the owner thread’s actions, thus slowing down the process. In order to prevent that, the owner’s and the foreign thread’s returned objects are placed in two different free lists: public and private.

When the private free list is unable to fulfill the memory requirement, the public free list merges into the private list. Avoiding such scenarios is preferable since this is a long procedure because the former must obtain the lock in order to combine with the latter.

Frontend: Managing big items

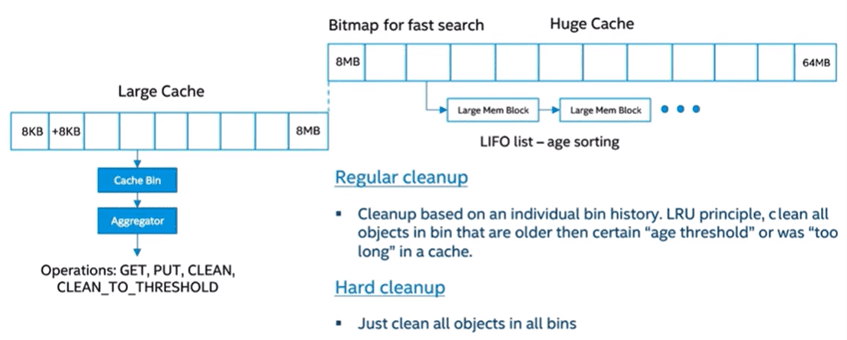

The global heap, which uses distinct storage bins to assign items of varying sizes, is where large things are mostly stored. It is similarly separated to the storage of tiny objects. This method makes it possible to operate with many bins simultaneously. However, the internal mutual exclusion primitive protects parallel access to the same bin.

There are two varieties of cache large and huge that only differ in the number of their bins in order to control internal fragmentation and the size of an allocated data structure. To maintain the object that is hot in cache at the top, each bin has a LIFO list of cached objects. This cache is limited by default, and the library periodically clears it to maintain reasonable memory use.

Backend: General framework

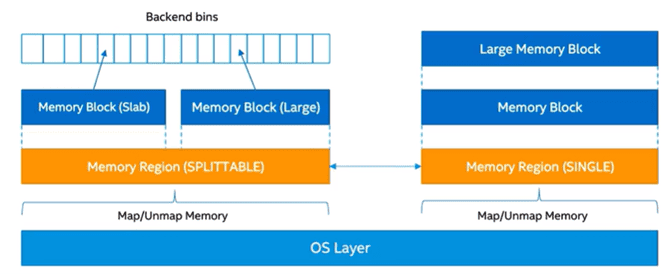

In order to meet the demands of the frontend, the backend aims to minimize the amount of costly calls to the operating system while preventing severe fragmentation.

How is this accomplished? The backend maps a larger memory region than requested with a granularity of at least a memory page for every memory request. The required memory block is then divided into the desired size, which can be a slab or a big object as seen in Figure 4. For a subsequent frontend request, the last block might be divided, and so on. The three blocks that coalesce in this case the block itself, its left neighbor, and its right neighbor control fragmentation. The size of each memory block is contained in a lock.

Scalability is enhanced since a region and a block do not share a state and are not aware of one another. As a result, we may divide and combine memory segments without using caching. To avoid breaking them up, particularly big objects are mapped and unmapped straight from the operating system. As seen in Figure 4, memory regions come in two varieties: splittable and single.

Templates for oneTBB memory allocators

In a concurrent environment, the oneTBB memory allocator templates scalable_allocator and cache_aligned_allocator address the crucial problems of scalability and false sharing (i.e., two threads trying to access different words that share the same cache line), respectively, and are similar to the STL class std::allocator.

In the absence of any additional oneTBB components, the scalable_allocator may be used independently and just needs the oneTBB scalable memory std::allocator.

The scalable memory allocator is used by default by the tbb_allocator and cache_aligned_allocator templates; in the event that it is not available, the normal C memory allocation techniques malloc and free are used.

Configuration of a oneTBB memory allocator

Despite being a general-purpose allocator, the oneTBB memory allocator may be made to behave differently by utilizing the following API functions:

- Scalable_allocation_mode(): This method allows you to choose heap size and other memory allocator settings. Until the function is called again, the specified arguments are still in use.

- Scalable_allocation_command(): This method makes it possible to clear the global memory buffers and the per-thread memory.

The following list of environment variables can also be used to customize the allocator. Nevertheless, the environment variables are always subordinated to scalable_allocation_mode().

- If the OS permits it, TBB_MALLOC_USE_HUGE_PAGES tells the allocator to utilize big pages.

- The lower bound threshold (size in bytes) for allocations that are not returned to OS until a cleaning is specifically requested is defined by TBB_MALLOC_USE_HUGE_OBJECT_THRESHOLD.

Scalable memory-pool feature

OneTBB allocator’s memory-pool functionality enables users to aggregate various memory objects under designated pool instances and supply their own memory for thread-safe management by the allocator. It makes it possible to deallocate all of the memory quickly, reduce memory fragmentation, and synchronize disparate sets of memory objects.

For thread-safe memory management, the memory pool is primarily composed of two classes: tbb::fixed_pool and tbb::memory_pool. Both make use of a memory provider that the user specifies. However, the second one can grow and relinquish on demand, whereas the first one is for allocating memory block(s) to little things. Within an STL container, memory-pool use is permitted via a class named tbb::memory_pool_allocator. View comprehensive details on memory pools that are scalable.

Memory API replacement library

By connecting your application to the memory-replacement API library known as tbbmalloc_proxy, you may use the oneTBB allocator without ever explicitly using the C/C++ API interface. This allows you to immediately substitute a oneTBB scalable equivalent for any calls made to the normal routines for dynamic memory allocation. Using dynamic memory allocation on Linux and Windows is explained in full here.

Conclusion

In both concurrent and non-concurrent environments, you can effectively manage memory allocation in your C++ programs using oneTBB memory allocator. Start using oneTBB to take use of its memory allocator and a number of other fantastic features, such concurrent containers for a threading experience that is fast.