Introduction of Using TorchDynamo to Write PyTorch Programs Faster. Presenters Yuning Qiu and Zaili Wang discuss the new computational graph capture capabilities in PyTorch 2.0 in their webinar, Introduction to Getting Faster PyTorch Programs with TorchDynamo.

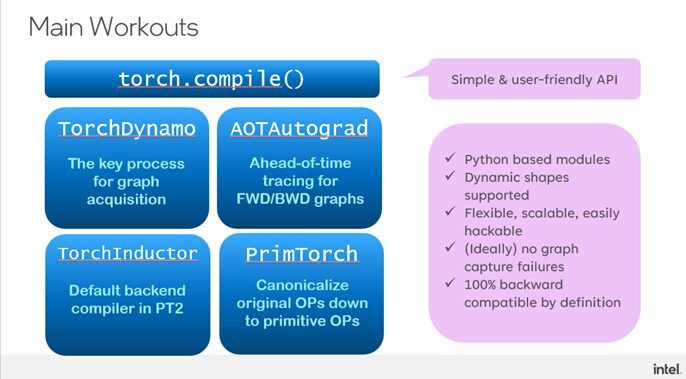

TorchDynamo is designed to keep flexibility and usability while speeding up PyTorch scripts with little to no code modifications. It’s important to note that while TorchDynamo was originally used to describe the whole functionality, it is now known by its API name “torch.compile” in the most recent PyTorch documentation. This nomenclature is also used in this lesson.

Principles of Design and Motivation

PyTorch functions mostly in a “imperative mode” (sometimes called eager mode), which is why data scientists and academics have embraced it so enthusiastically due to its Pythonic philosophy and simplicity of use. This mode makes debugging simple and flexible by executing user code step-by-step. For large-scale model deployment, however, imperative execution may not be the best option.

In these cases, performance improvements are often obtained by assembling the model into an efficient computational network. Although they provide graph compilation, traditional PyTorch techniques like FX and TorchScript (JIT) have a number of drawbacks, especially when it comes to managing control flow and backward graph optimization. TorchDynamo was created to solve these issues by offering a more smooth graph capture procedure while maintaining PyTorch’s natural flexibility.

Torch Dynamo: Synopsis and Essential Elements

TorchDynamo works by tying into the frame evaluation process of Python, which is made possible by PEP 523, and examining Python bytecode while it is running. This enables it to execute in eager mode and dynamically capture computational graphs. PyTorch code must be converted by TorchDynamo into an intermediate representation (IR) so that a backend compiler like TorchInductor may optimize it. It functions with a number of important technologies:

AOTAutograd: Enhances training and inference performance by concurrently tracing forward and backward computational graphs in advance. These graphs are divided into manageable chunks by AOTAutograd so that they may be assembled into effective machine code.

PrimTorch: Reduces the original PyTorch operations to a set of around 250 primitive operators, hence simplifying and reducing the number of operators that backend compilers must implement. Thus, PrimTorch improves the built PyTorch models’ extensibility and portability on many hardware platforms.

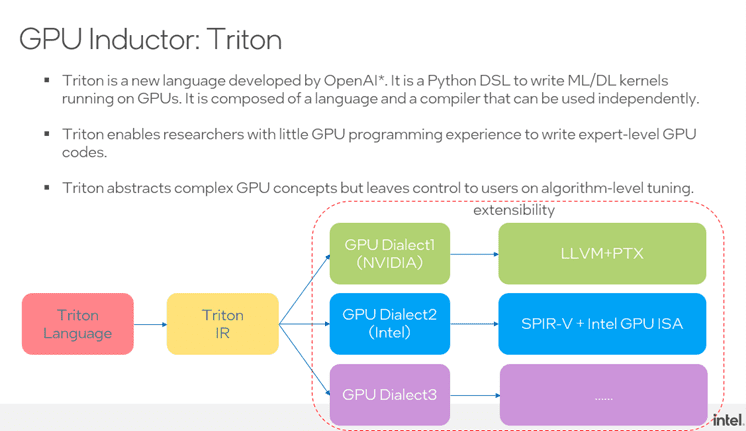

TorchInductor: The backend compiler that converts the computational graphs that are recorded into machine code that is optimized. Both CPU and GPU optimizations are supported by TorchInductor, including Intel’s contributions to CPU inductor and Triton-based GPU backend optimizations.

Contributions of Intel to TorchInductor

An important factor in improving PyTorch model performance on CPUs and GPUs has been Intel:

CPU Optimizations: For more than 94% of inference and training kernels in PyTorch models, Intel has provided vectorization utilizing the AVX2 and AVX512 instruction sets. Significant gains in performance have resulted from this; depending on the precision utilized (FP32, BF16, or INT8), speedups have ranged from 1.21x to 3.25x.

GPU Support via Triton: OpenAI’s Triton is a domain-specific language (DSL) for Python that is used to write GPU-accelerated machine learning kernels. By using SPIR-V IR to bridge the gap between Triton’s GPU dialect and Intel’s SYCL implementations, Intel has expanded Triton to accommodate their GPU architectures. Triton may be used to optimize PyTorch models on Intel GPUs because to its extensibility.

Guard Systems and Caching

In order to manage dynamic control flow and reduce the need for recompilation, TorchDynamo provides a guard mechanism. Guards monitor the objects that are referred to in every frame and make sure that the graphs that are cached are only utilized again when the calculation has not changed. A guard will recompile the graph, dividing it into subgraphs if needed, if it notices a change. In doing so, the performance overhead is reduced and the accuracy of the compiled graph is guaranteed.

Adaptable Forms and Scalability

Support for dynamic forms is one of TorchDynamo’s primary features. TorchDynamo is capable of handling dynamic input shapes without the need for recompilation, in contrast to earlier graph-compiling techniques that often had trouble with input-dependent control flow or shape fluctuations. This greatly increases PyTorch models’ scalability and adaptability, enabling them to better adjust to changing workloads.

Examples and Use Cases

During the webinar, a number of real-world use cases were shown to show how useful TorchDynamo and TorchInductor are. For example, when optimized with TorchDynamo and TorchInductor, ResNet50 models trained on Intel CPUs using the Intel Extension for PyTorch (IPEX) demonstrated significant increases in performance. Furthermore, comparable performance advantages for models deployed on Intel GPU architectures are promised by Intel’s current efforts to expand Triton for Intel GPUs.

In summary

TorchDynamo and related technologies provide a major step forward in PyTorch’s capacity to effectively aggregate and optimize machine learning models. Compared to older methods like TorchScript and FX, TorchDynamo provides a more adaptable and scalable solution by integrating with Python’s runtime with ease and enabling dynamic shapes.

The contributions from Intel, especially in terms of maximizing performance for both CPUs and GPUs, greatly expand this new framework’s possibilities. As they continue to be developed, researchers and engineers who want to implement high-performance PyTorch models in real-world settings will find that TorchDynamo and TorchInductor are indispensable resources.