Real-world workloads enable huge SSD capacities and efficient data granularity

Large SSDs (30TB+) present new challenges. Two important ones are:

High-density NAND, like QLC (quad-level cell NAND that stores 4 bits of data per cell), enables large SSDs but presents more challenges than TLC.

SSD capacity growth requires local DRAM memory growth for maps that have traditional 1:1000 DRAM to Storage Capacity ratios.

We cannot sustain the 1:1000 ratio. Do we need it? Why not 1:4000? Or 1:8000? They would cut DRAM demand by 4 or 8. What’s stopping us?

This blog examines this approach and proposes a solution for large-capacity SSDs

First, why must DRAM and NAND capacity be 1:1000? The SSD must map system logical block addresses (LBA) to NAND pages and keep a live copy of them to know where to write or read data. Since LBAs are 4KB and map addresses are 32 bits (4 bytes), we need one 4-byte entry per 4KB LBA, hence the 1:1000 ratio. We’ll stick to this ratio because it simplifies the reasoning and won’t change the outcome. Very large capacities would need more.

Once map entry per LBA is the best granularity because it allows the system to write at the lowest level. 4KB random writes are used to benchmark SSD write performance and endurance.

Long-term, this may not work. What about one map entry every four LBAs? 8, 16, 32+ LBAs? One map entry every 4 LBAs (i.e., 16KB) may save DRAM space, but what happens when the system wants to write 4KB? Since the entry is every 16KB, the SSD must read the 16KB page, modify the 4KB to be written, and write back the entire 16KB page. This would affect performance (“read 16KB, modify 4KB, write back 4KB”, rather than just “write 4KB”) and endurance (system writes 4KB but SSD writes 16KB to NAND), reducing SSD life by a factor of 4.

It’s concerning when this happens on QLC technology, which has a harder endurance profile. For QLC, endurance is essential!

Common wisdom holds that changing the map granularity (or Indirection Unit, or “IU”) would severely reduce SSD life (endurance).

All of the above is true, but do systems write 4KB data? And how often? One can buy a system to run FIO with 4KB RW profile, but most people don’t. They buy them for databases, file systems, object stores, and applications. Does anyone use 4KB Writes?

We measured it. We measured how many 4KB writes are issued and how they contribute to Write Amplification, i.e., extra writes that reduce device life, on a set of application benchmarks from TPC-H (data analytics) to YCSB (cloud operations), running on various databases (Microsoft SQL Server, RocksDB, Apache Cassandra), File Systems (EXT4, XFS), and, in some cases, entire software defined storage solutions like Red Hat Ceph Storage

Before discussing the analysis, we must discuss why write size matters when endurance is at stake.

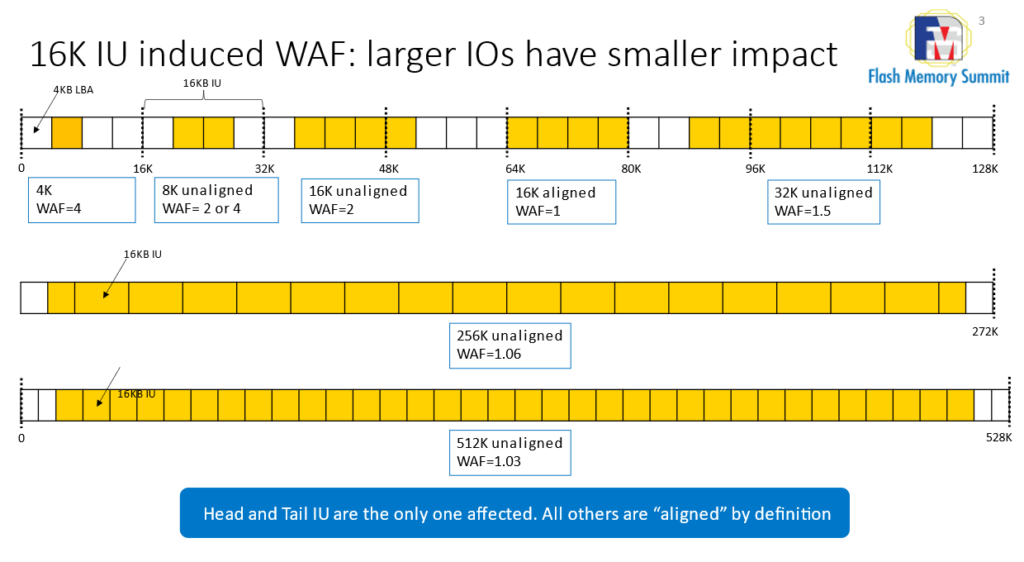

Write 16K to modify 4K creates a 4x Write Amplification Factor for a 4KB write. What about an 8K write? If in the same IU, “write 16K to modify 8K” = WAF=2. A bit better. If we write 16K? It may not contribute to WAF because one “writes 16K to modify 16KB”. Just small writes contribute to WAF.

Writes may not be aligned, so there is always a misalignment that contributes to WAF but decreases rapidly with size.

The chart below shows this trend:

Large writes barely affect WAF. 256KB may have no effect (WAF=1x) if aligned or low (WAF=1.06x) if misaligned. Much better than 4KB writes’ 4x!

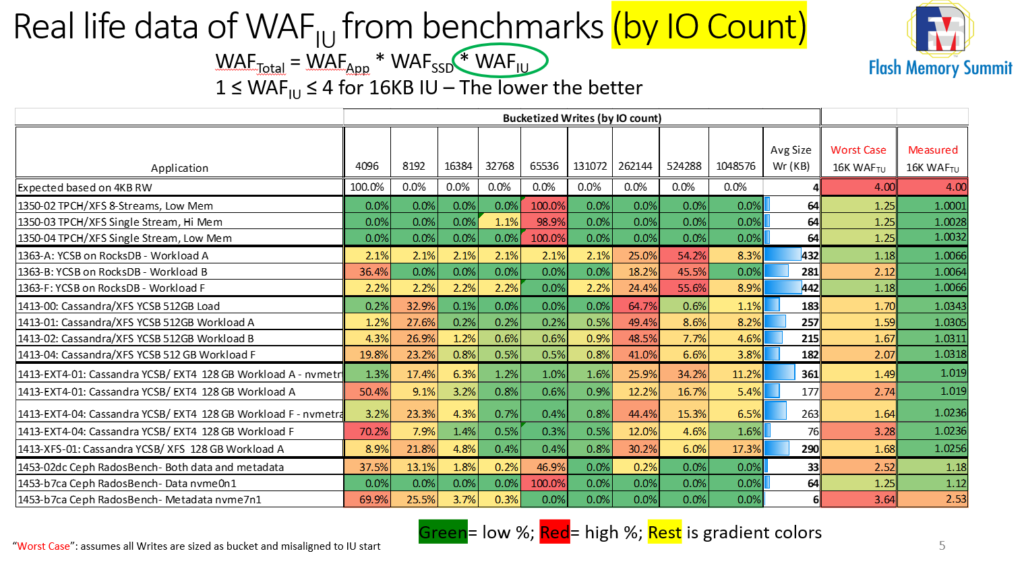

To calculate WAF contribution, we must profile all SSD writes and align them within an IU. Bigger is better. The system was instrumented to trace IOs for several benchmarks. After 20 min, we post-process samples (100–300 million per benchmark) to check size, IU alignment, and add every IO contribution to WAF.

The table below shows how many IOs each size bucket holds:

As shown, most writes fit in the 4-8KB (bad) or 256KB+ (good) buckets.

If we apply the above WAF chart to all misaligned IOs, the “Worst case” column shows most WAF is 1.x, a few 2.x, and very rarely 3.x. Better than 4x but not enough to make it viable.

IOs are not always misaligned. Why would they? Why would modern file systems misalign structures at such small scales? They don’t.

We measured and post-processed each benchmark’s 100+ million IOs to compare them to a 16KB IU. Last column “Measured” WAF shows the result. WAF >=1.05x, so one can grow the IU size by 400%, make large SSD using QLC NAND and existing, smaller DRAM technologies at a life cost of >5%, not 400%! These results are amazing.

The argument may be that many 4KB and 8KB writes contribute 400% or 200% to WAF. Given the small but numerous IO contributions, shouldn’t the aggregated WAF be much higher? Though many, they are small and carry a small payload, minimising their volume impact. A 4KB write and a 256KB write are both considered single writes in the above table, but the latter carries 64x the data.

Adjusting the above table for IO Volume (i.e., each IO size and data moved) rather than IO count yields the following representation:

The colour grading for more intense IOs is now skewed to the right, indicating that large IOs move a lot of data and have a small WAF contribution.

Finally, not all SSD workloads are suitable for this approach. For instance, the metadata portion of a Ceph storage node does very little IO, causing high WAF=2.35x. Metadata is unsuitable for large IU drives. The combined WAF is minimally affected if we mix data and metadata in Ceph (a common approach with NVMe SSDs), as data is larger than metadata.

Moving to 16K IU works in apps and most benchmarks, according to our testing. The industry must be convinced to stop benchmarking SSDs with 4K RW and FIO, which is unrealistic and harmful to evolution.