Part 3 of a 4-part series: Intel’s Cloud Optimization Modules for Terraform Unlock Cloud Automation.

The Terraform Databricks Modules use Intel’s Optimizations for Databricks, including Optimized AI/ ML runtime libraries and Apache Spark tuning parameters. Additionally, this module gives people the opportunity to quickly and efficiently enter numerous clouds at scale, creating enormous clusters that run on Intel Xeon 3rd Gen in minutes as opposed to hours or days as when deployments are done manually. With the benefits of public clouds, such as scalability, flexibility, etc., this makes powerful computational capability readily available while preserving centralized management with preferred technology like Terraform Cloud and Sentinel.

To assist customers in effectively optimizing and managing their Databricks workspaces, Intel has created Cloud Optimization Modules for Databricks. These modules offer pre-configured resources and optimizations for Databricks clusters on AWS and Azure and are accessible through the Terraform Registry. Three modules are currently available for Databricks. You require two modules to deploy Databricks infrastructure.

1.Databricks Workspace Module

2.Databricks Cluster Module

For the workspace deployment, there are two options: an AWS Databricks Workspace and an Azure Databricks Workspace. The Intel Optimized Databricks Cluster Module for the Databricks Cluster deployment deploys Intel-optimized clusters in your AWS or Azure Databricks workspace (running on Intel Xeon Processors). Let’s take a quick look at each of these three parts.

The Intel Databricks Cluster module, accessible through the Terraform Registry, makes it simple to provision and manage Databricks clusters on AWS and Azure. It enables you to take advantage of Intel’s expertise to enhance your Databricks workloads and includes optimizations for speed, security, and cost. For instance, the Databricks cluster by default uses an Intel Xeon 3rd Generation Chip.

The deployment and management of Azure Databricks workspaces are made simpler by the Intel Azure Databricks Workspace module, which is accessible through Terraform Registry. It offers a thorough collection of options and enhancements to make sure your Azure Databricks system is set up effectively.

The following is a list of Databricks optimizations included in these modules:

Apache Spark Tunning Parameters: Using the Xeon Tuning Guide, the module includes the Apache Spark Tunning Parameters, which significantly improves performance.

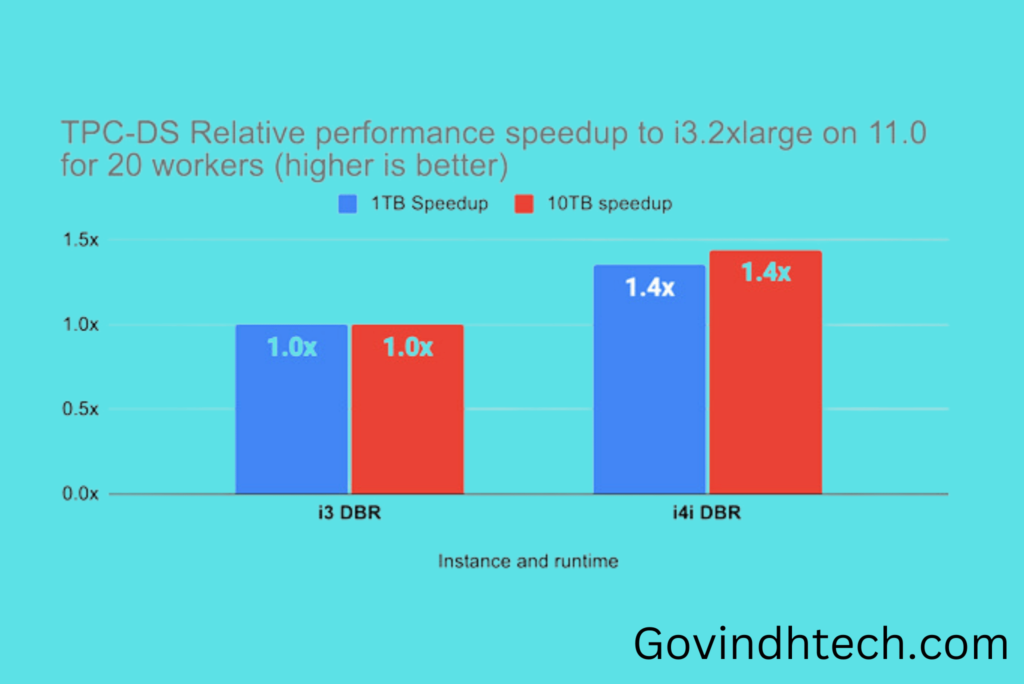

Third-generation Xeon Photon Engine Enablement: Using Ice Lake chips with Databricks Photon Engine can increase speed by 5.3 times and improve price/performance by up to 2.5 times!

Accelerating Databricks Runtime

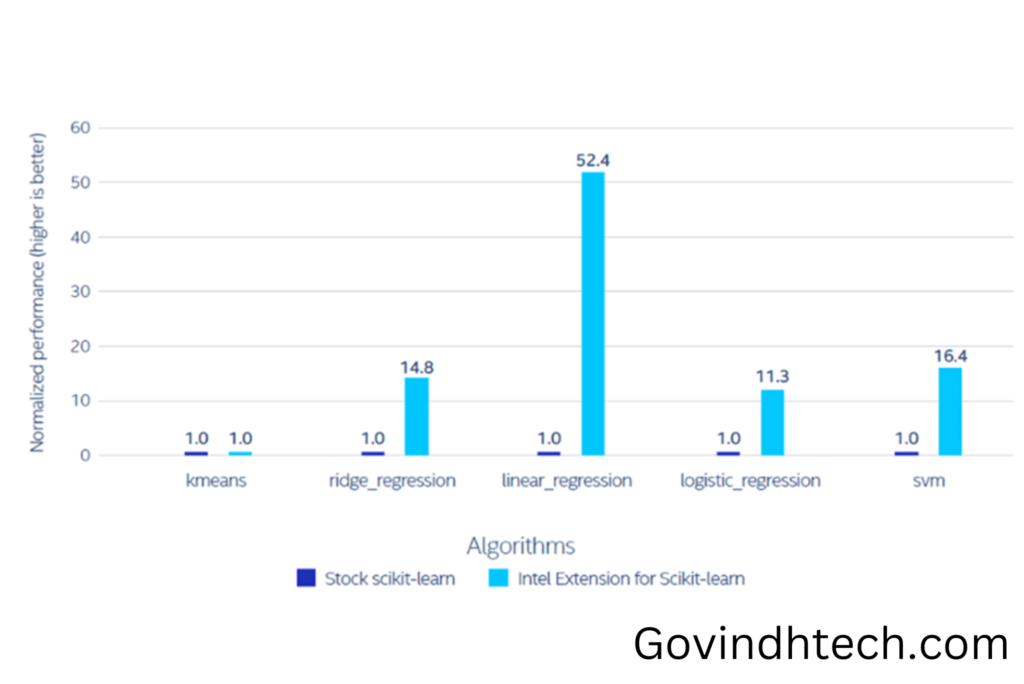

The module also contains Intel Optimized Machine Learning Runtime Libraries, which speed up Databricks runtime. The Intel oneAPI AI Analytics Toolkit provides familiar Python tools and frameworks to data scientists, AI developers, and researchers to speed up end-to-end data science and analytics pipelines on Intel architecture. For low-level compute optimizations, oneAPI libraries are used in the construction of the components. This toolkit offers interoperability for effective model creation and enhances performance from preprocessing through ML. Scikit-learn and TensorFlow are two well-liked ML and DL frameworks.

This series’ last article will examine how to deploy and implement an Intel-optimized Databricks workspace and cluster using the Intel Cloud Optimization Module for Databricks.