Impactful Innovation: How NVIDIA-Research Drives Revolutionary Work in AI, Graphics, and Other Fields.

The company’s research team has been responsible for the development of renowned products including NVIDIA DLSS, NVLink, and Cosmos for almost 20 years.

The company’s research organisation, which consists of about 400 experts worldwide in areas like computer architecture, generative AI, graphics, and robotics, is where many of NVIDIA’s ground-breaking innovations the technology that underpins AI, accelerated computing, real-time ray tracing, and seamlessly connected data centers have their roots.

NVIDIA-Research, which was founded in 2006 and has been run since 2009 by Bill Dally, a former chair of Stanford University’s computer science department, stands out among corporate research organisations because it was founded with the goal of pursuing difficult technological problems that would have a significant impact on both the business and the wider community.

At NVIDIA GTC, the premier developer conference at the centre of AI, which is being held this week in San Jose, California, Dally is one of the leaders of NVIDIA-Research who will be showcasing the group’s innovations.

NVIDIA researchers look for initiatives with a broader “risk horizon” and a huge potential payoff if they succeed, in contrast to many research organisations that could characterise their objective as pursuing topics with a longer time horizon than those of a product team.

The objective is to act in the best interests of the business. It’s not about creating a museum of renowned graphics researchers and NVIDIA’s first researcher, or a trophy case of best paper prizes. “As a small team, everyone has the opportunity of working on potentially unsuccessful concepts. Therefore, it is a duty to take advantage of that chance and give his all to initiatives that, if successful, will have a significant impact.

Innovating as One Team

One of the fundamental principles of NVIDIA is “one team,” which refers to a strong dedication to cooperation that enables researchers to work closely with product teams and industry stakeholders to turn their concepts into practical applications.

Because the accelerated computing work that NVIDIA undertakes necessitates full-stack optimization of applied deep learning research at NVIDIA, everyone at NVIDIA is motivated to learn how to collaborate. “You can’t accomplish it if everyone remains in their silos and every piece of technology operates independently. Acceleration requires teamwork.

NVIDIA researchers consider whether the project is better for a product or research team, whether it should be published at a famous conference, and whether NVIDIA will benefit from it. They consult key stakeholders before proceeding with the project.

It collaborate with others to bring ideas to life, and frequently, in doing so, often find if the brilliant lab concepts don’t translate well to the real world. “The research team must be humble enough to learn from the rest of the company what they need to do to make their ideas work because it’s a close collaboration.”

A large portion of the team’s work is shared via technical conferences, articles, and open-source websites like Hugging Face and GitHub. The impact on the industry is still its main focus, though.

Although publishing is not the main goal of this project, which is it to be a very significant byproduct.

Ray tracing was the subject of NVIDIA-Research‘s initial endeavour, which, after ten years of diligent labour, immediately led to the release of NVIDIA RTX and revolutionized real-time computer graphics. In addition to teams that focus on chip design, networking, programming systems, massive language models, physics-based simulation, climate science, humanoid robotics, and self-driving cars, the organisation is still growing to take on new research topics and draw on global knowledge.

Transforming NVIDIA and the Industry

In addition to laying the foundation for some of the company’s best-known products, NVIDIA-Research’s inventions have advanced computing and ushered in the current era of artificial intelligence.

It started with CUDA, a programming methodology and software platform for parallel computing that lets researchers use GPU acceleration for a wide range of applications. When CUDA was introduced in 2006, it made it simple for programmers to use GPUs’ parallel processing capabilities to accelerate AI model development, gaming applications, and scientific simulations.

For NVIDIA, creating CUDA was the most revolutionary development. “As a hired top researchers and had them collaborate with top architects, which is why it happened even before company had a formal research group.”

Making Ray Tracing a Reality

Following the establishment of NVIDIA-Research, its members spent years creating the hardware and algorithms necessary to enable GPU-accelerated ray tracing. The late Steven Parker, a real-time ray tracing pioneer and NVIDIA vice president of professional graphics, led the 2009 initiative that produced the NVIDIA OptiX application framework, outlined in a 2010 SIGGRAPH paper.

Researchers collaborated with NVIDIA’s architecture department to create NVIDIA RTX ray-tracing technology, which includes RT Cores for professional producers and gamers.

In 2018, NVIDIA RTX launched Deep Learning Super Sampling (DLSS), another NVIDIA-Research discovery. The graphics pipeline no longer has to draw every pixel in a video to DLSS. Rather, it only draws a portion of the pixels, providing an AI pipeline with the data it needs to produce a high-resolution, sharp image.

Accelerating AI for Virtually Any Application

The NVIDIA cuDNN library for GPU-accelerated neural networks, which was created as a research project when the deep learning field was still in its infancy and later launched as a product in 2014, marked the beginning of NVIDIA’s research efforts in AI software.

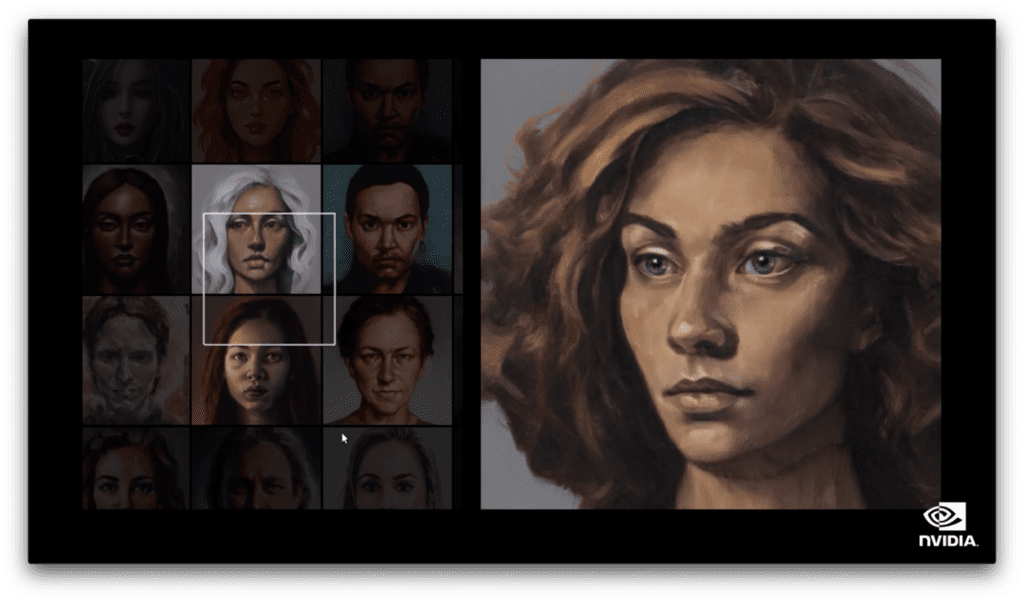

NVIDIA StyleGAN, a ground-breaking visual generative AI model that showed how neural networks could quickly produce lifelike imagery, is an example of how NVIDIA-Research was at the vanguard as deep learning gained popularity and developed into generative AI.

StyleGAN was the first model to produce images that could fully pass muster as a photograph, even though generative adversarial networks, or GANs, were originally unveiled in 2014. “It was a turning point.”

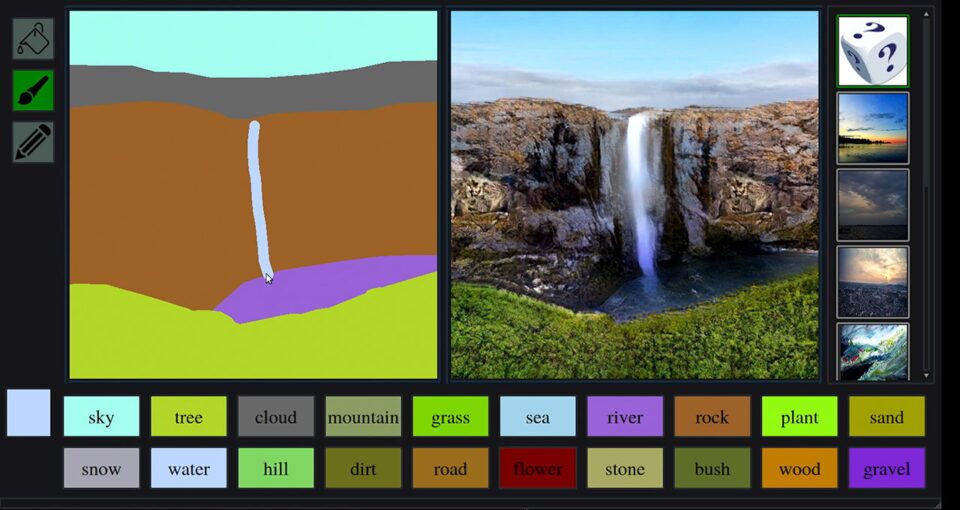

Numerous well-known GAN models were presented by NVIDIA researchers, including GauGAN, an AI painting tool that evolved into the NVIDIA Canvas application. Additionally, they are continuing to advance visual generative AI, notably in 3D, with new models like Edify 3D and 3DGUT, owing to the development of diffusion models, neural radiance fields, and Gaussian splatting.

Megatron-LM was an applied research project in the field of large language models that made it possible to train and infer huge LLMs for language-based activities including conversational AI, translation, and content creation in an effective manner. The NVIDIA NeMo platform, which includes voice recognition and speech synthesis models developed by NVIDIA-Research, incorporates it for creating custom generative AI.

Achieving Breakthroughs in Chip Design, Networking, Quantum and More

NVIDIA Research works in a number of areas, including AI and graphics. Several teams are making strides in programming languages, quantum computing, chip architecture, electronic design automation, and more.

For a project that would eventually become NVIDIA NVLink and NVSwitch, the high-speed interconnect that facilitates quick communication between GPU and CPU processors in accelerated computing systems, Dally filed a research proposal to the U.S. Department of Energy in 2012.

A signalling system co-designed with the connection was introduced in the circuit research team’s 2013 work on chip-to-chip communications, allowing for a high-speed, low-area, and low-power link between dies. In the end, the project served as a bridge connecting the NVIDIA Hopper GPU and NVIDIA Grace CPU.

The ASIC and VLSI Research group created VS-Quant, a software-hardware codesign technique for AI accelerators, in 2021. This technique allowed several machine learning models to operate with excellent accuracy using 4-bit weights and 4-bit activations. The creation of FP4 precision support in the NVIDIA Blackwell architecture was impacted by their work.

Additionally, NVIDIA Cosmos, a platform developed by NVIDIA-Research to speed up the development of physical AI for next-generation robotics and autonomous vehicles, was introduced this year at the CES trade show.