NVIDIA AI factory are driving a new industrial revolution powered by AI.

NVIDIA AI factory, in contrast to conventional data centers, produce intelligence at scale by turning unprocessed data into insights in real time. This translates into a significantly quicker time to value for businesses and nations worldwide, transforming AI from a long-term investment into an instant source of competitive advantage. Businesses who make investments in specially designed AI factories now will be at the forefront of efficiency, innovation, and market distinction in the future.

NVIDIA AI factory are designed to maximise the value of Artificial Intelligence, whereas traditional data centers are usually designed for general-purpose computing and can handle a variety of workloads. From data intake to training, fine-tuning, and above all high-volume inference, they coordinate every step of the AI lifecycle.

Intelligence is the main output of NVIDIA AI factory rather than a byproduct. AI token throughput, real-time predictions that inform decisions, automation, and completely new services are all indicators of this intelligence.

Traditional data centers aren’t going away anytime soon, but the enterprise business strategy will determine whether they link to or develop into NVIDIA AI factory.

NVIDIA-powered AI factories are already producing intelligence at scale, revolutionising the development, improvement, and application of Artificial Intelligence, regardless of how businesses decide to adjust.

The Scaling Laws Driving Compute Demand

Large-scale model training has been the focus of AI in recent years. However, inference is now the primary force behind AI economics due to the recent growth of AI reasoning models. This is explained by three important scaling laws:

Pretraining scaling

Predictable intelligence increases are produced by larger datasets and model parameters, but getting to this point requires a large investment in qualified specialists, data curation, and computing power. Pretraining scaling has resulted in a 50 million-fold rise in computing demand over the past five years. On the other hand, once a model is trained, it makes it much easier for others to build upon it.

Post-training scaling

It takes 30 times as much computing power to fine-tune AI models for certain real-world applications during AI inference as it does during pretraining. The demand for AI infrastructure rises as businesses modify current models to suit their particular requirements.

Test-time scaling (long thinking)

Iterative reasoning is necessary for advanced AI applications like agentic AI and physical AI, where models consider several potential answers before choosing the optimal one. Compared to conventional inference, this uses up to 100 times as much computing power.

This new AI era is not compatible with traditional data centers. NVIDIA AI factory offer the best way forward for AI inference and deployment since they are specifically designed to maximise and maintain this enormous demand for computing.

Reshaping Industries and Economies With Tokens

Governments and businesses worldwide are rushing to construct NVIDIA AI factory in an effort to promote efficiency, creativity, and economic growth.

Plans to construct seven AI factories in partnership with 17 EU member states were recently revealed by the European High Performance Computing Joint Undertaking.

This comes after a global wave of investments in NVIDIA AI factory, as businesses and nations speed up AI-driven economic growth in all sectors and geographical areas:

India

By launching the Shakti Cloud Platform in collaboration with NVIDIA, Yotta Data Services is assisting in the democratisation of access to cutting-edge GPU resources. Yotta offers a smooth environment for AI development and deployment by fusing open-source tools with NVIDIA AI Enterprise software.

Japan

Prominent cloud service providers, such as GMO Internet, Highreso, KDDI, Rutilea, and SAKURA Internet, are constructing AI infrastructure driven by NVIDIA to revolutionise sectors like telecom, healthcare, automotive, and robotics.

Norway

With an emphasis on sustainability and workforce upskilling, Telenor has opened an AI factory powered by NVIDIA to hasten the deployment of AI throughout the Nordic region.

These projects highlight a worldwide fact: NVIDIA AI factory are rapidly emerging as vital national infrastructure, comparable to energy and telecommunications.

Inside an AI Factory: Where Intelligence Is Manufactured

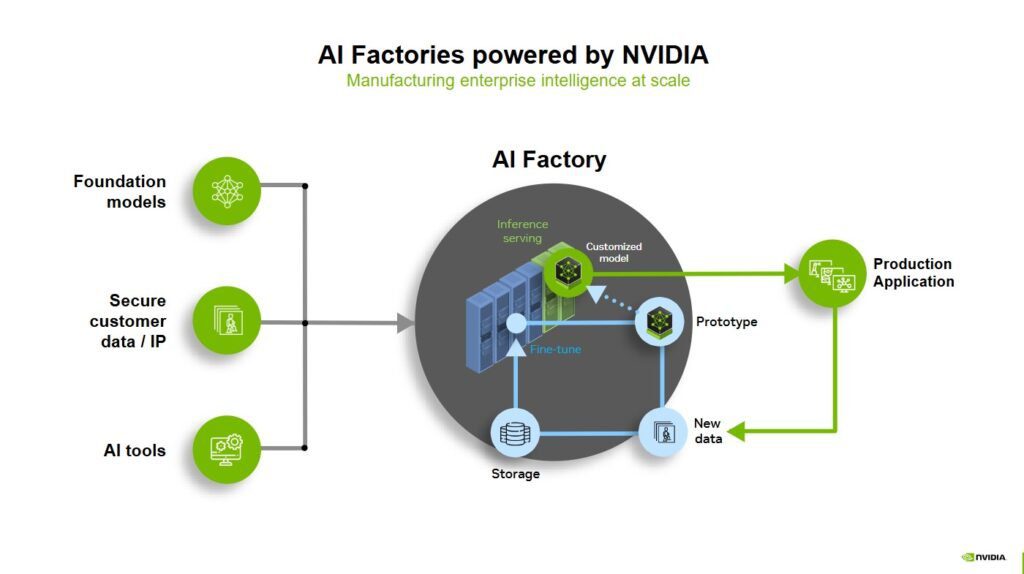

The raw resources for powering NVIDIA AI factory where inference serving, prototyping, and fine-tuning create strong, customised models ready for production come from foundation models, safe consumer data, and AI tools.

These models continuously learn from fresh data as they are implemented in real-world applications. This data is then stored, improved, and re-fed into the system through a data flywheel. This optimisation cycle guarantees AI’s continued adaptability, effectiveness, and improvement, propelling enterprise intelligence to previously unheard-of levels.

An AI Factory Advantage With Full-Stack NVIDIA AI

Every component of NVIDIA’s comprehensive, integrated AI factory stack from the silicon to the software is tuned for large-scale training, fine-tuning, and inference. Businesses can deploy NVIDIA AI factory that are high-performing, affordable, and future-proofed for the exponential expansion of AI with full-stack strategy.

NVIDIA has developed building pieces for the full-stack AI factory with its ecosystem partners, providing:

- Powerful compute performance

- Advanced networking

- Infrastructure management and workload orchestration

- The largest AI inference ecosystem

- Storage and data platforms

- Blueprints for design and optimization

- Reference architectures

- Flexible deployment for every enterprise

Powerful Compute Performance

Any AI factory’s compute power is its fundamental component. For this new industrial revolution, NVIDIA offers the most potent accelerated computing available, from NVIDIA Hopper to NVIDIA Blackwell. NVIDIA AI factory may achieve up to 50X the output for AI reasoning with the NVIDIA Blackwell Ultra-based GB300 NVL72 rack-scale system, establishing a new benchmark for efficiency and scale.

By combining the finest features of NVIDIA accelerated computing, the NVIDIA DGX SuperPOD is the model of a turnkey AI factory for businesses. An AI factory offered by NVIDIA DGX Cloud offers high-performance NVIDIA accelerated computing in the cloud.

On the basis of NVIDIA accelerated computing, which now includes the NVIDIA GB200 NVL72 and GB300 NVL72 rack-scale solutions, global systems partners are constructing full-stack NVIDIA AI factory for their clients.

Advanced Networking

The entire AI factory stack must have smooth, high-performance connection in order to move intelligence at scale. High-speed, multi-GPU connectivity is made possible by NVIDIA NVLink and NVLink Switch, which speeds up data transfer both within and between nodes.

A strong network backbone is also necessary for AI manufacturing. By removing bottlenecks, the NVIDIA Quantum InfiniBand, NVIDIA Spectrum-X Ethernet, and NVIDIA BlueField networking solutions guarantee effective, high-throughput data transfer amongst enormous GPU clusters. In order to scale AI workloads to million-GPU levels and achieve breakthrough performance in both training and inference, this end-to-end integration is crucial.

Infrastructure Management and Workload Orchestration

Companies need a means to leverage AI infrastructure’s capabilities without putting the costs, complexity, and knowledge on IT, but yet having the agility, efficiency, and scalability of a hyperscaler.

Organisations can optimise resource utilisation, accelerate AI experimentation, and scale workloads with NVIDIA Run:ai’s smooth AI workload orchestration and GPU management. In addition to offering full-stack intelligence that produces world-class infrastructure robustness, NVIDIA Mission Control software, which incorporates NVIDIA Run:ai technology, simplifies AI factory operations from workloads to infrastructure.

The Largest AI Inference Ecosystem

To transform data into intelligence, AI manufacturers require the appropriate equipment. The NVIDIA AI inference platform offers the most extensive portfolio of AI acceleration libraries and optimised software in the industry. It spans the NVIDIA TensorRT ecosystem and includes NVIDIA Dynamo and NVIDIA NIM microservices, which are all a part of or soon to be a part of the NVIDIA AI Enterprise software platform. It offers high throughput, ultra-low latency, and optimal inference performance.

Storage and Data Platforms

AI systems are powered by data, yet due to its continuously expanding quantity and complexity, enterprise data is frequently too expensive and time-consuming to use efficiently. Businesses must fully utilise their data if they want to prosper in the AI era.

An adaptable reference architecture for creating a new class of AI infrastructure for demanding workloads involving AI inference is the NVIDIA AI Data Platform. In order to develop tailored AI data platforms that can use enterprise data to reason and provide complicated answers, NVIDIA is working with its Certified Storage partners.

Blueprints for Design and Optimization

Teams can utilise the NVIDIA Omniverse Blueprint for AI factory design and operations to create and optimise NVIDIA AI factory. Using digital twins, the blueprint allows engineers to develop, test, and optimise AI manufacturing infrastructure prior to deployment. The blueprint assists in preventing expensive downtime, which is crucial for operators of AI factories, by lowering risk and uncertainty.

Every day of downtime can cost more than $100 million for an AI plant that is one gigawatt in size. The blueprint speeds deployment and guarantees operational resilience by resolving complexity up front and allowing siloed teams in network, mechanical, electrical, power, and IT engineering to collaborate in parallel.

Reference Architectures

For partners creating and implementing NVIDIA AI factory, NVIDIA Enterprise Reference Architectures and NVIDIA Cloud Partner Reference Architectures offer a road map. Using the NVIDIA AI software stack and partner ecosystem, they assist businesses and cloud providers in creating scalable, high-performance, and secure AI infrastructure built on NVIDIA-Certified Systems.

To satisfy the increasing demands of AI, each tier of the AI manufacturing stack depends on effective computing. The cornerstone of the stack is NVIDIA accelerated computing, which offers the best performance per watt to guarantee AI factories run as energy-efficiently as possible. Businesses may scale AI while controlling energy costs by using liquid cooling and energy-efficient architecture.

Flexible Deployment for Every Enterprise

NVIDIA’s full-stack technologies make it simple for businesses to create and implement AI factories that meet the operational requirements and preferred IT consumption models of their clients.

While some businesses choose cloud-based solutions for scalability and flexibility, others choose on-premises NVIDIA AI factory to retain complete control over data and performance. For pre-integrated solutions that speed up deployment, many also look to their reliable international systems partners.

On Premises

For the most demanding AI training and inference workloads, NVIDIA DGX SuperPOD is a turnkey AI factory infrastructure solution that offers accelerated infrastructure with scalable performance. Businesses can now launch NVIDIA AI factory in weeks rather than months with its design-optimized AI computing, network fabric, storage, and NVIDIA Mission Control software. It also offers best-in-class uptime, robustness, and utilisation.

The NVIDIA global ecosystem of enterprise technology partners with NVIDIA-Certified Systems also offers AI factory solutions. They provide cutting-edge hardware and software, data center systems know-how, and advances in liquid cooling to assist businesses reduce the risk of their AI initiatives and boost the ROI of their AI factory deployments.

In order to assist clients in effectively implementing AI factories and producing intelligence at scale, these international systems partners are offering full-stack solutions built on NVIDIA reference architectures that are integrated with NVIDIA accelerated processing, high-performance networking, and AI software.

In the Cloud

NVIDIA DGX Cloud offers a unified platform on top clouds for creating, customising, and deploying AI applications for businesses wishing to adopt a cloud-based solution for their AI factory. With enterprise-grade software and large, contiguous clusters on top cloud providers, DGX Cloud offers the best of NVIDIA AI in the cloud across all layers. Its scalable compute resources are perfect for even the most demanding AI training workloads.

A dynamic and scalable serverless inference platform is another feature of DGX Cloud that offers high throughput for AI tokens in hybrid and multi-cloud systems while drastically lowering operational overhead and infrastructure complexity.

By offering a full-stack platform that combines hardware, software, ecosystem partners, and reference architectures, NVIDIA is assisting businesses in creating high-performing, scalable, and reasonably priced AI factories that will prepare them for the upcoming industrial revolution.