Micron 6500 ION SSD

Results for MLPerf Storage v0.5 on the Micron 9400 NVMe SSD were just released by Micron. These outcomes demonstrate how effectively the Micron 9400 NVMe SSD performs in the use case of an AI server as a local cache, thanks to its high-performance NVMe SSD. The majority of AI training material, however, is stored on shared storage rather than in local cache. The identical MLPerf Storage AI workload was chosen to be tested for SC23 on a WEKA storage cluster that was powered by a 30TB Micron 6500 ION SSD.

They were interested in learning how the MLPerf Storage AI application scaled on a high-performance SDS solution. WEKA is a distributed, parallel filesystem built for AI workloads. The results are insightful, pointing to the need for huge throughput in future AI storage systems and assisting us in sizing suggestions for current-generation AI systems.

Let’s quickly review MLPerf Storage first

In order to facilitate the creation of future state-of-the-art models, MLCommons creates and maintains six distinct benchmark suites in addition to accessible datasets. The most recent addition to the MLCommons benchmark collection is the MLPerf Storage Benchmark Suite.

MLPerf Storage aims to tackle several issues related to the storage workload of AI training systems, including the limited size of available datasets and the high expense of AI accelerators.

See these earlier blog entries for a detailed analysis of the benchmark and the workload produced by MLPerf Storage:

- Regarded as the best PCIe Gen4 SSD for AI storage, the Micron 9400 NVMe SSD

- MLPerf Storage on the Micron 9400 NVMe SSD: storage for AI training

Let’s now discuss the test WEKA cluster

Earlier this year, they colleague Sujit wrote a post outlining the cluster’s performance in synthetic workloads.

Six storage nodes comprise the cluster, and the configuration of each node is as follows:

- The AS-1115CS-TNR Supermicro

- Processor AMD EPYC 9554P single-socket

- 64 cores, 3.75 GHz boost, and 3.1 GHz base

- Micron DDR5 DRAM, 384 GB

- 30TB, 10 Micron 6500 ION SSDs

- 400 GbE networking

This cluster can handle 838TB of capacity overall and can reach 200 GB/s for workloads with a high queue depth.

Let’s now take a closer look at this cluster’s MLPerf Storage performance

A brief note: Since the data have not been submitted for evaluation to MLPerf Storage, they are unvalidated. Changes are also being made to the MLPerf Storage benchmark from version 0.5 to the upcoming version for the first 2024 release. Utilizing the same methodology as the v0.5 release, the values displayed here share a barrier between accelerators in a client and independent datasets for each client.

In the 0.5 version, the MLPerf Storage benchmark simulates NVIDIA V100 accelerators. There are sixteen V100 accelerators on the NVIDIA DGX-2 server. The number of clients supported for this testing is displayed on the WEKA cluster, where each client simulates 16 V100 accelerators, similar to the NVIDIA DGX-2.

Furthermore, Unet3D and BERT, two distinct models, are implemented in MLPerf Storage benchmark version 0.5. Testing reveals that BERT does not produce a substantial amount of storage traffic, hence the testing here will concentrate on Unet3D. (Unet3D is a model for 3D medical imaging.)

Micron 6500 ion ssd specs

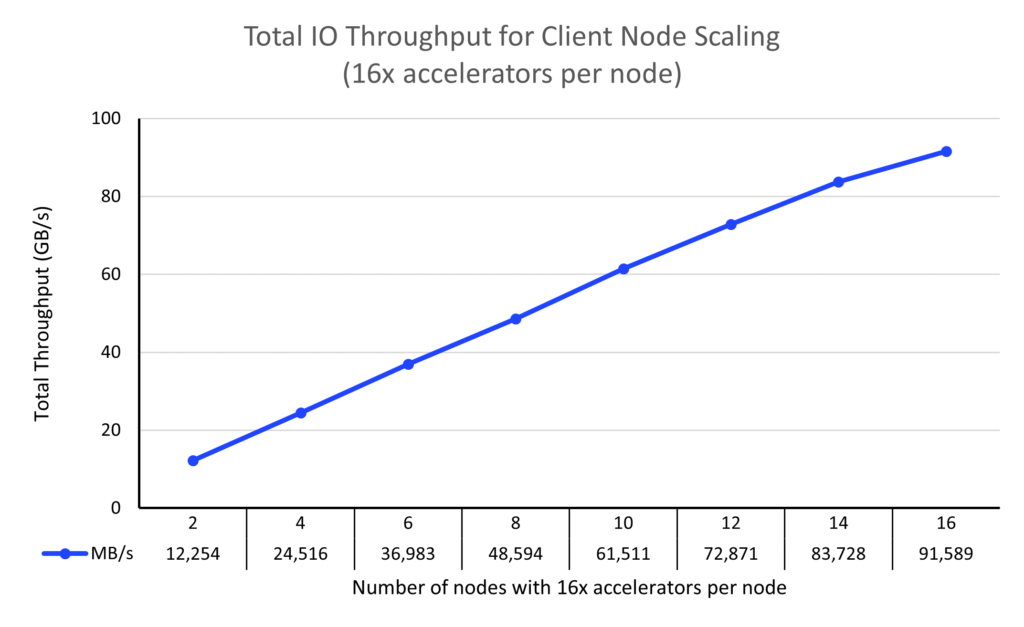

For a specific number of client nodes, the overall throughput to the storage system is displayed in this graphic. Recall that there are 16 emulated accelerators on each node. Additionally, for a given number of nodes and accelerators to be deemed a “success,” they must maintain an accelerator utilization rate of greater than 90%. The accelerators are idle while they wait for data if their percentage falls below 90%.

The six-node WEKA storage cluster can handle 16 clients, each of which can imitate 16 accelerators, for a total of 256 emulated accelerators, and achieve a throughput of 91 GB/s.

With 16 V100 GPUs per system, this performance is equivalent to 16 NVIDIA DGX-2 systems, which is an astonishingly large number of AI systems powered by a six-node WEKA cluster.

The V100 is a PCIe Gen3 GPU, and NVIDIA’s GPU generations are advancing at a rate that is far faster than PCIe and platform generations. They discover that an emulated NVIDIA A100 GPU performs this workload four times quicker in a single-node system.

They may calculate that this WEKA deployment would handle eight DGX A100 systems (each with eight A100 GPUs) at a maximum throughput of 91 GB/s.

Future-focused AI training servers, such as the H100 / H200 (PCIe Gen5) and X100 (PCIe Gen6) models, are expected to push extremely high throughput.

As of right now, the Micron 6500 ION SSD and WEKA storage offer the ideal balance of scalability, performance, and capacity for your AI workloads.

[…] Artificial intelligence has transformed the software development industry in recent times. There are undoubtedly some obstacles for the many developers and businesses hoping to accelerate AI development and integrate AI experiences into their apps. Developers require a stable hardware solution and an AI software development environment that allows the setup of AI projects easy in order to successfully launch generative AI (GenAI) development. In this blog post, they will explore why workstations like Dell Precision paired with the appropriate development tools like Microsoft’s recently released Windows AI Studio make AI software development the optimal option, as well as how these tools can improve developers’ coding experience, productivity, and inventiveness. […]