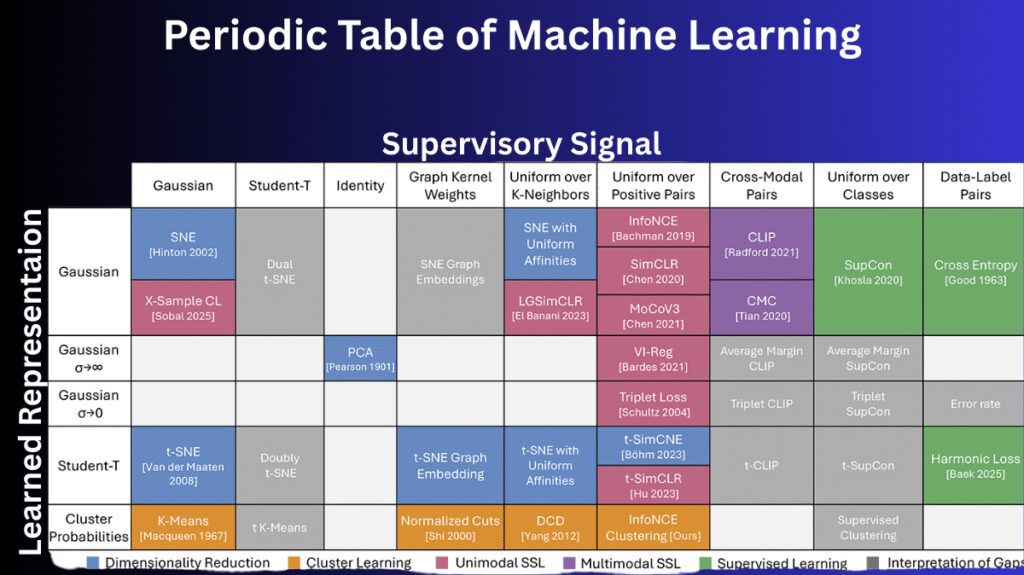

The “machine learning periodic table” is a framework called Information Contrastive Learning (I-Con), developed by researchers from MIT, Microsoft, and Google, goals to join the varied landscape of machine learning techniques. It like the periodic table in chemistry, but instead of organizing elements, this table organizes machine learning algorithms based on a principle: learning relationships between data points.

I-Con provides a single, main framework that proves how seemingly different machine learning algorithms including classification, regression, large language modeling, clustering, dimensionality reduction, and spectral graph theory can all be viewed as variations of a shared mathematical idea.

Importance of machine learning periodic table

The machine learning with new algorithms and techniques developing regularly. The connections between different approaches and to understand the fundamental principles at play. The machine learning periodic table offers some benefits:

- Unification: It provides a combined viewpoint, showing how many popular machine learning techniques are related through a common mathematical framework. The commonalities across algorithms may be better understood, which is useful for both researchers and practitioners.

- Clarification: The chemical periodic table clarifies the relationships between elements, the I-Con framework clarifies the relationships between existing machine learning methods. It helps to organize the vast array of algorithms into a structured and understandable format.

- Discovery: The most thrilling feature is its potential to fuel new discoveries. The chemical periodic table had gaps that predicted the existence of undiscovered elements. Similarly, the Periodic Table of Machine Learning has “empty spaces” that suggest potential new algorithms that have not yet been developed.

- Innovation: I-Con makes it easier for researchers to experiment, redefine how data points are considered “neighbors,” adjust the level of certainty in connections, and combine strategies from different existing algorithms to create entirely new methods. It inspires creative thinking and the combination of approaches that would not have been associated with one another in the past.

- Efficiency: This framework can help researchers develop new machine learning methods without having to “reinvent past ideas”. By understanding the principles and the existing algorithms within the table, they can more strategically explore potential new techniques.

How was the periodic table of machine learning developed?

The atomic table wasn’t something that was planned; it was an unexpected result of study. Shaden Alshammari, a researcher at MIT in the Freeman Lab, was looking into clustering, a way to group data points that are similar together. She saw links between contrastive learning and grouping. Contrastive learning is a type of machine learning that learns by comparing pairs of positive and negative data.

Alshammari found, by investigating the mathematics underlying both approaches, that they could be described using the same fundamental equation. This turning point led to the creation of the Information Contrastive Learning (I-Con) paradigm, which shows that different machine learning algorithms seek to simulate real-world links between data points while reducing mistakes.

The researchers then structured these results into a periodic table. The table classifies algorithms depending on two fundamental factors:

- How points are related in real datasets: This relates to the underlying relationships in the data, such as visual resemblance, common class labels, or cluster membership. These “connections” might have different degrees of trust and need not be total.

- The principal methods by which algorithms can approximate those connections: This pertains to the manner in which various algorithms acquire and internally reflect these connections.

By categorising many established machine learning approaches inside this framework according to these two criteria, the researchers discovered that numerous prominent algorithms align precisely within designated “squares”. Moreover, they found some “gaps” – areas where algorithms that should logically exist according to the framework have not yet been constructed.

How to fill Gaps

One interesting illustration of the strength of this approach is how the researchers applied it to create a new modern algorithm for identifying photos devoid of any human-labeled data. Combining the connection ideas employed in debiased contrastive representation learning with the approximation connections utilized in clustering helped them to “fill a gap” in their periodic table. Compared to earlier techniques, this recently found strategy increased picture classification accuracy on the ImageNet-1K dataset by 8%. They also showed that a data debiasing technique meant for contrastive learning might improve clustering accuracy.

Information Contrastive Learning (I-Con)

I-Con really shifts the way to think about machine learning, seeing it as a way to get a handle on those tricky relationships in data. Imagine a lively party where guests, or data points, mingle and connect over common interests or hometowns, finding their way to different tables, which represent clusters. Think of different machine learning algorithms as various ways these guests figure out how to find their friends and get settled In.

The I-Con framework is all about creating a simpler version of how data points connect in the real world, making it easier to work with in the algorithm. The idea of “connection” is pretty flexible and can mean different things, like looking similar, having common labels, or being part of the same group. The main point here is that every algorithm aims to reduce the gap between the connections it learns to mimic and the real connections found in the training data.

The Periodic Table as a Resource for Research

In addition to its role as an organizational tool, the machine learning periodic table, which is constructed on the I-Con structure, has several other purposes. A toolset for the development of novel algorithms is made available to researchers by this. As soon as many machine learning techniques are described in the same conceptual language that is offered by I-Con, it becomes much simpler to experiment with variations:

- The process of redefining neighbourhoods involves investigating various methods for deciding which data points are deemed to be “neighbours” or associated as a group.

- When adjusting uncertainty, it is necessary to incorporate varied degrees of confidence in the relationships that have been learnt.

- Combining strategies involves combining methods from a variety of distinct algorithms that are already in existence in unique ways.

Every one of these variations has the potential to correlate to a new entry in the periodic table. The adaptability of the table also makes it possible to include new rows and columns, which may be used to illustrate a wider variety of links between the data points.

Looking Forward to the Future

With artificial intelligence making strides and its uses growing, frameworks like I-Con play an important role in helping us make sense of everything happening in the field. They assist researchers in uncovering the hidden patterns that lie beneath the surface and offer the tools needed for more intentional innovation. For anyone not deep in the AI scene, it’s a good reminder that even in really complicated areas, there are still basic patterns and structures out there just waiting to be found.

So, the main idea here is all about sorting algorithms according to how they grasp and estimate the links between different data points. Putting together a detailed table that covers all the algorithms along with their specific connection and approximation methods would need a lot more information than what it has got here. So, a simple table to show the idea might look like this:

| Algorithm Category | Real Data Connection Example | Approximation Method Example | Place in the Table (Conceptual) | Potential New Algorithms? |

| Clustering (e.g., K-Means) | Physical proximity of data points | Sharing a common cluster | One Section | Yes |

| Dimensionality Reduction (e.g., PCA) | High-dimensional physical proximity | Low-dimensional physical proximity | Another Section | Yes |

| Classification (e.g., Cross Entropy) | Association with a particular class | Learning to predict class based on features | A Different Section | Yes |

| Contrastive Learning | Did two data points arise from the same process? | Learning embeddings that bring similar points closer | Another Section | Yes |