IBM Granite 3.0: open, cutting-edge business AI models

IBM Granite 3.0, the third generation of the Granite series of large language models (LLMs) and related technologies, is being released by IBM. The new IBM Granite 3.0 models maximize safety, speed, and cost-efficiency for enterprise use cases while delivering state-of-the-art performance in relation to model size, reflecting its focus on striking a balance between power and usefulness.

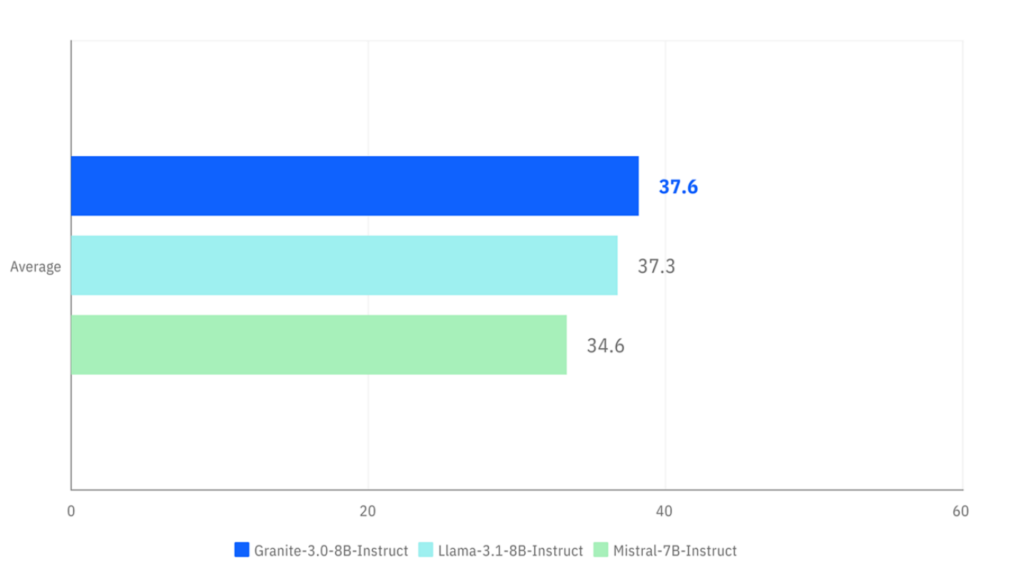

Granite 3.0 8B Instruct, a new, instruction-tuned, dense decoder-only LLM, is the centerpiece of the Granite 3.0 collection. Granite 3.0 8B Instruct is a developer-friendly enterprise model designed to be the main building block for complex workflows and tool-based use cases. It was trained using a novel two-phase method on over 12 trillion tokens of carefully vetted data across 12 natural languages and 116 programming languages. Granite 3.0 8B Instruct outperforms rivals on enterprise tasks and safety metrics while matching top-ranked, similarly-sized open models on academic benchmarks.

Businesses may get frontier model performance at a fraction of the expense by fine-tuning smaller, more functional models like Granite. Using Instruct Lab, a collaborative, open-source method for enhancing model knowledge and skills with methodically created synthetic data and phased-training protocols, to customize Granite models to your organization’s specific requirements can further cut costs and timeframes.

Contrary to the recent trend of closed or open-weight models published by peculiar proprietary licensing agreements, all Granite models are released under the permissive Apache 2.0 license, in keeping with IBM’s strong historical commitment to open source. IBM is reinforcing its commitment to fostering transparency, safety, and confidence in AI products by disclosing training data sets and procedures in detail in the Granite 3.0 technical paper, which is another departure from industry trends for open models.

The complete IBM Granite 3.0 release includes:

General Purpose/Language: Granite 3.0 8B Instruct, Granite 3.0 2B Instruct, Granite 3.0 8B Base, Granite 3.0 2B Base

Guardrails & Safety: Granite Guardian 3.0 8B, Granite Guardian 3.0 2B

Mixture-of-Experts: Granite 3.0 3B-A800M Instruct, Granite 3.0 1B-A400M Instruct, Granite 3.0 3B-A800M Base, Granite 3.0 1B-A400M Base

Speculative decoder for faster and more effective inference: Granite-3.0-8B-Accelerator-Instruct

The enlargement of all model context windows to 128K tokens, more enhancements to multilingual support for 12 natural languages, and the addition of multimodal image-in, text-out capabilities are among the upcoming developments scheduled for the rest of 2024.

On the IBM Watsonx platform, Granite 3.0 8B Instruct and Granite 3.0 2B Instruct, along with both Guardian 3.0 safety models, are now commercially available. Additionally, Granite 3.0 models are offered by platform partners like as Hugging Face, NVIDIA (as NIM microservices), Replicate, Ollama, and Google Vertex AI (via Hugging Face’s connections with Google Cloud’s Vertex AI Model Garden).

IBM Granite 3.0 language models are trained on Blue Vela, which is fueled entirely by renewable energy, further demonstrating IBM’s dedication to sustainability.

Strong performance, security, and safety

Prioritizing specific use cases, earlier Granite model generations performed exceptionally well in domain-specific activities across a wide range of industries, including academia, legal, finance, and code. Apart from providing even more effectiveness in those areas, IBM Granite 3.0 models perform on par with, and sometimes better than, the industry-leading open-weight LLMs in terms of overall performance across academic and business benchmarks.

Regarding academic standards featured in Granite 3.0 8B, Hugging Face’s OpenLLM Leaderboard v2, Teach competitors with comparable-sized Meta and Mistral AI models. The code for IBM’s model evaluation methodology is available on the Granite GitHub repository and in the technical paper that goes with it.

It’s also easy to see that IBM is working to improve Granite 3.0 8B Instruct for enterprise use scenarios. For example, Granite 3.0 8B Instruct was in charge of the RAGBench evaluations, which included 100,000 retrieval augmented generation (RAG) assignments taken from user manuals and other industry corpora. Models were compared across the 11 RAGBench datasets, assessing attributes such as correctness (the degree to which the model’s output matches the factual content and semantic meaning of the ground truth for a given input) and faithfulness (the degree to which an output is supported by the retrieved documents).

Additionally, the Granite 3.0 models were trained to perform exceptionally well in important enterprise domains including cybersecurity: Granite 3.0 8B Instruct performs exceptionally well on both well-known public security benchmarks and IBM’s private cybersecurity benchmarks.

Developers can use the new Granite 3.0 8B Instruct model for programming language use cases like code generation, code explanation, and code editing, as well as for agentic use cases that call for tool calling. Classic natural language use cases include text generation, classification, summarization, entity extraction, and customer service chatbots. Granite 3.0 8B Instruct defeated top open models in its weight class when tested against six distinct tool calling benchmarks, including Berkeley’s Function Calling Leaderboard evaluation set.

Developers may quickly test the new Granite 3.0 8B Instruct model on the IBM Granite Playground, as well as browse the improved Granite recipes and how-to documentation on Github.

Transparency, safety, trust, and creative training methods

Responsible AI, according to IBM, is a competitive advantage, particularly in the business world. The development of the Granite series of generative AI models adheres to IBM’s values of openness and trust.

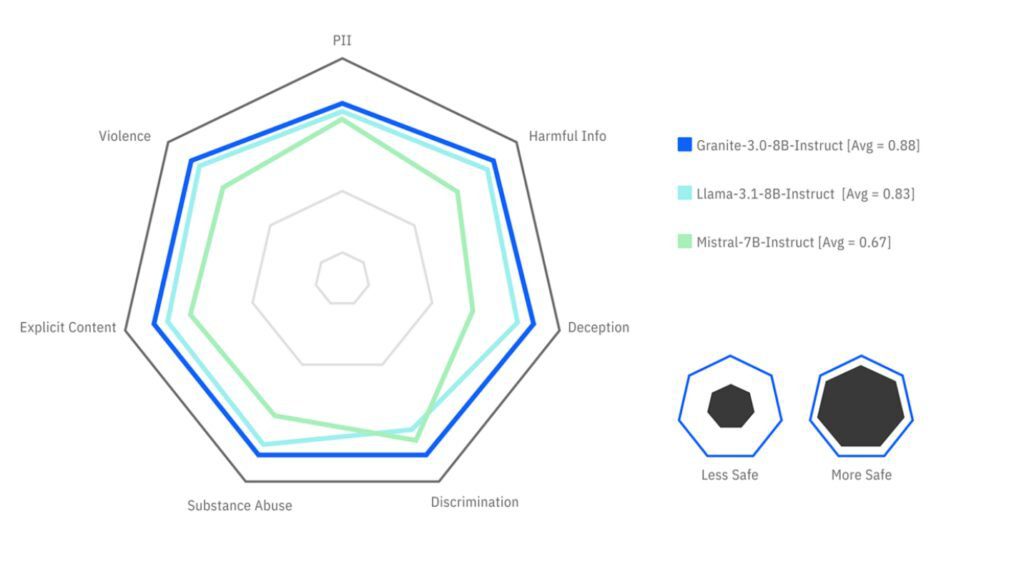

As a result, model safety is given equal weight with IBM Granite 3.0’s superior performance. On the AttaQ benchmark, which gauges an LLM’s susceptibility to adversarial prompts intended to induce models to provide harmful, inappropriate, or otherwise unwanted prompts, Granite 3.0 8B Instruct exhibits industry-leading resilience.

The team used IBM’s Data Prep Kit, a framework and toolkit for creating data processing pipelines for end-to-end processing of unstructured data, to train the Granite 3.0 language models. In particular, the Data Prep Kit was utilized to scale data processing modules from a single laptop to a sizable cluster, offering checkpoint functionality for failure recovery, lineage tracking, and metadata logging.

Granite Guardian: the best safety guardrails in the business

Along with introducing a new family of LLM-based guardrail models, the third version of IBM Granite offers the broadest range of risk and harm detection features currently on the market. Any LLM, whether proprietary or open, can have its inputs and outputs monitored and managed using Granite Guardian 3.0 8B and Granite Guardian 3.0 2B.

In order to assess and categorize model inputs and outputs into different risk and harm dimensions, such as jailbreaking, bias, violence, profanity, sexual content, and unethical behavior, the new Granite Guardian models are variations of their correspondingly sized base pre-trained Granite models.

A variety of RAG-specific issues are also addressed by the Granite Guardian 3.0 models.

Efficiency and speed: a combination of speculative decoding and experts (MoE) models

A speculative decoder for rapid inference and mixture of experts (MoE) models are two more inference-efficient products included in the Granite 3.0 version.

The initial MoE models from IBM Granite

Granite 3.0 3B-A800M and Granite 3.0 1B-A400M, offer excellent inference efficiency with little performance compromise. The new Granite MoE models, which have been trained on more than 10 trillion tokens of data, are perfect for use in CPU servers, on-device apps, and scenarios that call for incredibly low latency.

Both their active parameter counts the 3B MoE employ 800M parameters at inference, while the smaller 1B uses 400M parameters at inference and their overall parameter counts 3B and 1B, respectively are mentioned in their model titles. There are 40 expert networks in Granite 3.0 3B-A800M and 32 expert networks in Granite 3.0 1B-A400M. Top-8 routing is used in both models.

Both base pre-trained and instruction-tuned versions of the Granite 3.0 MoE models are available. You may now obtain Granite 3.0 3B-A800M Instructions from Hugging Face, Ollama, and NVIDIA. Hugging Face and Ollama provide the smaller Granite 3.0 1B-A400M. Only Hugging Face now offers the base pretrained Granite MoE models.

Speculative decoding for Granite 3.0 8B

An optimization method called “speculative decoding” helps LLMs produce text more quickly while consuming the same amount of computing power, enabling more users to use a model simultaneously. The recently published Granite-3.0-8B-Instruct-Accelerator model uses speculative decoding to speed up tokens per step by 220%.

LLMs generate one token at a time after processing each of the previous tokens they have generated so far in normal inferencing. LLMs also assess a number of potential tokens that could follow the one they are about to generate in speculative decoding; if these “speculated” tokens are confirmed to be correct enough, a single pass can yield two or more tokens for the computational “price” of one. The method was initially presented in two 2023 articles from Google and DeepMind, which used a small, independent “draft model” to perform the speculative job. An open source technique called Medusa, which only adds a layer to the base model, was released earlier this year by a group of university researchers.

Conditioning the hypothesized tokens on one another was the main advance made to the Medusa approach by IBM Research. The model will speculatively forecast what follows “I am” instead of continuing to predict what follows “happy,” for instance, if “happy” is the first token that is guessed following “I am.” Additionally, they presented a two-phase training approach that trains the base model and speculation simultaneously by utilizing a type of information distillation. Granite Code 20B’s latency was halved and its throughput quadrupled because to this IBM innovation.

Hugging Face offers the Granite 3.0 8B Instruct-Accelerator model, which is licensed under the Apache 2.0 framework.