Hyperdisk ML can speed up the loading of AI/ML data. This tutorial explains how to use it to streamline and speed up the loading of AI/ML model weights on Google Kubernetes Engine (GKE). The main method for accessing Hyperdisk ML storage with GKE clusters is through the Compute Engine Persistent Disk CSI driver.

What is Hyperdisk ML?

You can scale up your applications with Hyperdisk ML, a high-performance storage solution. It is perfect for running AI/ML tasks that require access to a lot of data since it offers high aggregate throughput to several virtual machines at once.

Overview

It can speed up model weight loading by up to 11.9X when activated in read-only-many mode, as opposed to loading straight from a model registry. The Google Cloud Hyperdisk design, which enables scalability to 2,500 concurrent nodes at 1.2 TB/s, is responsible for this acceleration. This enables you to decrease pod over-provisioning and improve load times for your AI/ML inference workloads.

The following are the high-level procedures for creating and utilizing Hyperdisk ML:

Pre-cache or hydrate data in a disk image that is persistent: Fill Hyperdisk ML volumes with serving-ready data from an external data source (e.g., Gemma weights fetched from Cloud Storage). The disk image’s persistent disk needs to work with Google Cloud Hyperdisk.

Using an existing Google Cloud Hyperdisk, create a Hyperdisk ML volume: Make a Kubernetes volume that points to the data-loaded Hyperdisk ML volume. To make sure your data is accessible in every zone where your pods will operate, you can optionally establish multi-zone storage classes.

To use it volume, create a Kubernetes deployment: For your applications to use, refer to the Hyperdisk ML volume with rapid data loading.

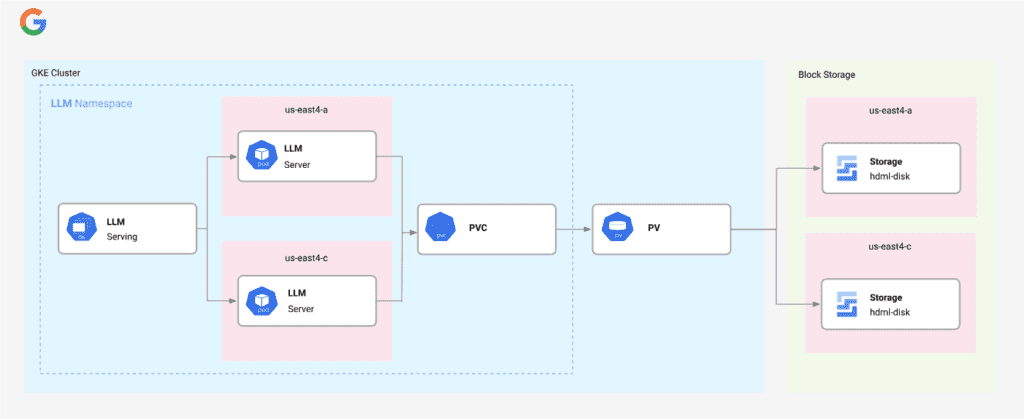

Multi-zone Hyperdisk ML volumes

There is just one zone where hyperdisk ML disks are accessible. Alternatively, you may dynamically join many zonal disks with identical content under a single logical PersistentVolumeClaim and PersistentVolume by using the Hyperdisk ML multi-zone capability. The multi-zone feature’s referenced zonal disks have to be in the same area. For instance, the multi-zone disks (such as us-central1-a and us-central1-b) must be situated in the same area if your regional cluster is established in us-central1.

Running Pods across zones for increased accelerator availability and cost effectiveness with Spot VMs is a popular use case for AI/ML inference. Because it is zonal, GKE will automatically clone the disks across zones if your inference server runs several pods across zones to make sure your data follows your application.

The limitations of multi-zone Hyperdisk ML volumes are as follows:

- There is no support for volume resizing or volume snapshots.

- Only read-only mode is available for multi-zone Hyperdisk ML volumes.

- GKE does not verify that the disk content is consistent across zones when utilizing pre-existing disks with a multi-zone Hyperdisk ML volume. Make sure your program considers the possibility of inconsistencies between zones if any of the disks have divergent material.

Requirements

The following Requirements must be met by your clusters in order to use it volumes in GKE:

- Use Linux clusters with GKE 1.30.2-gke.1394000 or above installed. Make sure the release channel contains the GKE version or above that is necessary for this driver if you want to use one.

- A driver for the Compute Engine Persistent Disk (CSI) must be installed. On new Autopilot and Standard clusters, the Compute Engine Persistent Disc driver is on by default and cannot be turned off or changed while Autopilot is in use. See Enabling the Compute Engine Persistent Disk CSI Driver on an Existing Cluster if you need to enable the Cluster’s Compute Engine Persistent Disk CSI driver.

- You should use GKE version 1.29.2-gke.1217000 or later if you wish to adjust the readahead value.

- You must use GKE version 1.30.2-gke.1394000 or later in order to utilize the multi-zone dynamically provisioned capability.

- Only specific node types and zones allow hyperdisk ML.

Conclusion

This source offers a thorough tutorial on how to use Hyperdisk ML to speed up AI/ML data loading on Google Kubernetes Engine (GKE). It explains how to pre-cache data in a disk image, create a it volume that your workload in GKE can read, and create a deployment to use this volume. The article also discusses how to fix problems such a low it throughput quota and provides advice on how to adjust readahead numbers for best results.