429 Errors

Avoid leaving your visitors waiting when resources run out: How to deal with 429 errors

429 error meaning

When a client sends too many requests to a server in a specified period of time, an HTTP error known as “Too Many Requests” (Error 429) occurs. This error can occur for many reasons:

Rate-limiting

The server limits client requests per time period.

Security

A DDoS attack or brute-force login attempt was detected by the server. In this instance, the server may block the suspect requestor’s IP.

Limits bandwidth

Server bandwidth is maxed out.

Per-user restrictions

The server has hit its maximum on user requests per time period.

The mistake may go away, but you should fix it to avoid losing traffic and rankings. Flushing your DNS cache forces your computer to acquire the latest DNS information, fixing the issue.

Large language models (LLMs) offer developers a great deal of capability and scalability, a seamless user experience depends on resource management. Because LLMs require a lot of processing power, it’s critical to foresee and manage possible resource depletion. Otherwise, 429 “resource exhaustion” errors could occur, which could interfere with users’ ability to interact with your AI application.

Google examines the reasons behind the 429 errors that LLMs make nowadays and provides three useful techniques for dealing with them successfully. Even during periods of high demand, you can contribute to ensuring a seamless and continuous experience by comprehending the underlying causes and implementing the appropriate solutions.

Backoff!

Retry logic and exponential backoff have been used for many years. LLMs can also benefit from these fundamental strategies for managing resource depletion or API unavailability. Backoff and retry logic in the code might be useful when a model’s API is overloaded with calls from generative AI applications or when a system is overloaded with inquiries. Until the overloaded system recovers, the waiting time grows dramatically with each retry.

Backoff logic can be implemented in your application code using decorators in Python. For instance, Tenacity is a helpful Python general-purpose retrying module that makes it easier to incorporate retry behavior into your code. Asynchronous programs and multimodal models with broad context windows, like Gemini, are more prone to 429 errors.

To show how backoff and retry are essential to the success of your gen AI application, Google tested sending a lot of input to Gemini 1.5 Pro. Google is straining the Gemini system by using photos and videoskept in Google Cloud Storage.

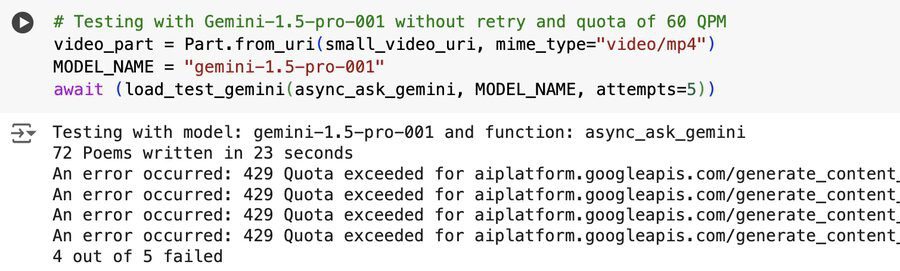

The results, where four of five attempts failed, are shown below without backoff and retry enabled.

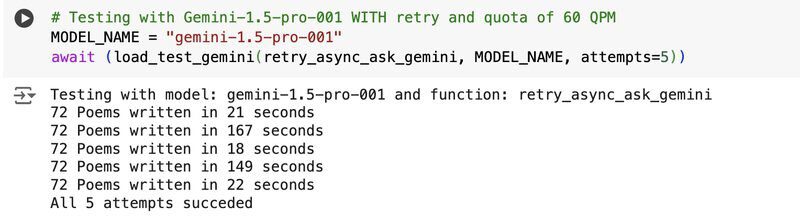

The outcomes with backoff and retry set up are shown below. By using backoff and retry, all five tries were successful. There is a trade-off even when the model responds to a successful API call. A response’s latency increases with the backoff and retry. Performance might be enhanced by modifying the model, adding more code, or moving to a different cloud zone. Backoff and retry, however, is generally better in times of heavy traffic and congestion.

Additionally, you could frequently run into problems with the underlying APIs when working with LLMs, including rate-limiting or outages. It becomes increasingly crucial to protect against these when you put your LLM applications into production. For this reason, LangChain presented the idea of a fallback, which is a backup plan that might be employed in an emergency. One fallback option is to switch to a different model or even to a different LLM provider. To make your LLM applications more resilient, you can incorporate fallbacks into your code in addition to backoff and retry techniques.

With Apigee, circuit breaking is an additional strong choice for LLM resilience. You can control traffic distribution and graceful failure management by putting Apigee in between a RAG application and LLM endpoints. Naturally, every model will offer a unique solution, thus it is important to properly test the circuit breaking design and fallbacks to make sure they satisfy your consumers’ expectations.

Dynamic shared quota

For some models, Google Cloud uses dynamic shared quota to control resource allocation in an effort to offer a more adaptable and effective user experience. This is how it operates:

Dynamic shared quota versus Traditional quota

Traditional quota: In a Traditional quota system, you are given a set amount of API requests per day, per minute, or region, for example. You often have to file a request for a quota increase and wait for approval if you need more capacity. This can be inconvenient and slow. Of course, capacity is still on-demand and not dedicated, thus quota allocation alone does not ensure capacity.

Dynamic shared quota: Google Cloud offers a pool of available capacity for a service through dynamic shared quota. All of the users submitting requests share this capacity in real-time. You draw from this shared pool according to your needs at any given time, rather than having a set individual limit.

Dynamic shared quota advantages

- Removes quota increase requests: For services that employ dynamic shared quota, quota increase requests are no longer required. The system adapts to your usage habits on its own.

- Increased efficiency: Because the system can distribute capacity where it is most needed at any given time, resources are used more effectively.

- Decreased latency: Google Cloud can reduce latency and respond to your requests more quickly by dynamically allocating resources.

- Management made easier: Since you don’t have to worry about reaching set limits, capacity planning is made easier.

Using a dynamic shared quota

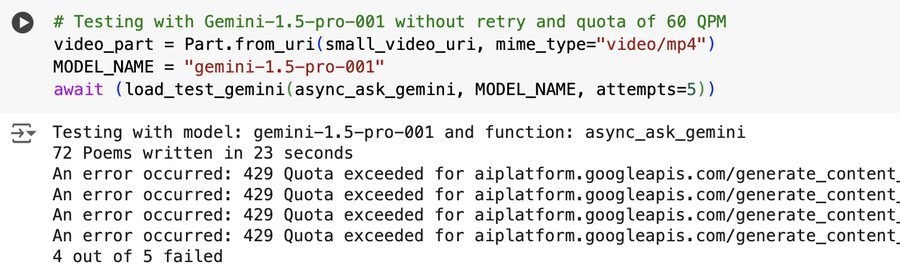

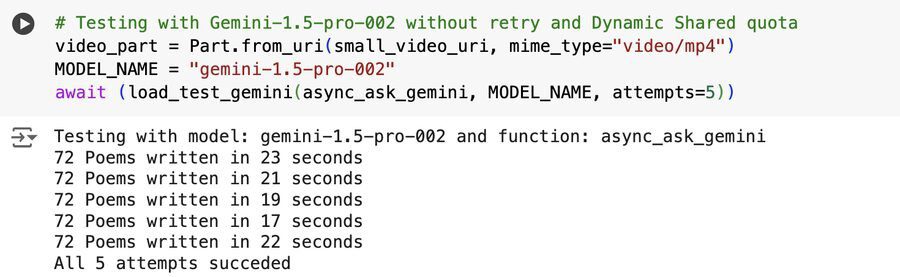

429 resource exhaustion errors to Gemini with big multimodal input, like large video files, are more likely to result in resource exhaustion failures. The model performance of Gemini-1.5-pro-001 with a traditional quota and Gemini-1.5-pro-002 with a dynamic shared quota is contrasted below. It can be observed that the second-generation Gemini Pro model performs better than the first-generation model due to dynamic shared quota, even without retrying (which is not advised).

Dynamic shared quota should be used with backoff and retry systems, particularly as request volume and token size grow. In all of its initial attempts, it ran into 429 errors when testing the -002 model with greater video input. The test results below, however, show that all five subsequent attempts were successful when backoff and retry logic were used. This demonstrates how important this tactic is to the consistent performance of the more recent -002 Gemini model.

A move toward a more adaptable and effective method of resource management in Google Cloud is represented by dynamic shared quota. It seeks to maximize resource consumption while offering users a tightly integrated experience through dynamic capacity allocation. There is no user-configurable dynamic shared quota. Only certain models, such as Gemini-1.5-pro-002 and Gemini-1.5-flash-002, have Google enabled it.

As an alternative, you may occasionally want to set a hard-stop barrier to cease making too many API requests to Gemini. In Vertex AI, intentionally creating a customer-defined quota depends on a number of factors, including abuse, financial constraints and restrictions, or security considerations. The customer quota override capability is useful in this situation. This could be a helpful tool for safeguarding your AI systems and apps. Terraform’s google_service_usage_consumer_quota_override schema can be used to control consumer quota.

Provisioned Throughput

You may reserve specific capacity for generative AI models on the Vertex AI platform with Google Cloud’s Provisioned Throughput feature. This implies that even during periods of high demand, you can rely on consistent and dependable performance for your AI workloads.

Below is a summary of its features and benefits:

Benefits

- Predictable performance: Your AI apps will function more smoothly if you eliminate performance fluctuation and receive predictable reaction times.

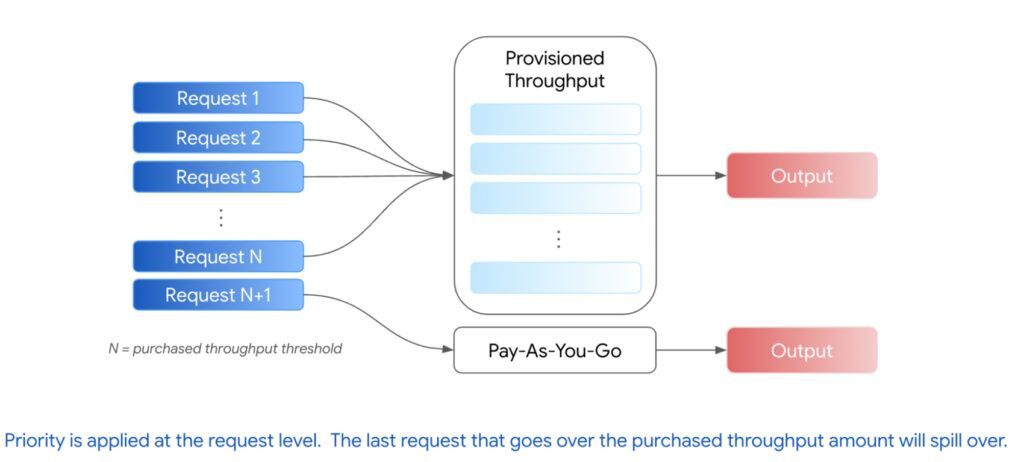

- Reserved capacity: Queuing and resource contention are no longer concerns. For your AI models, you have a specific capacity. The pay-as-you-go charge is automatically applied to extra traffic when Provisioned Throughput capacity is exceeded.

- Cost-effective: If you have regular, high-volume AI workloads, it can be less expensive than pay-as-you-go pricing. Use steps one through ten in the order process to determine whether Provisioned Throughput can save you money.

- Scalable: As your demands change, you may simply increase or decrease the capacity you have reserved.

This would undoubtedly be helpful if your application has a big user base and you need to give quick response times. This is specifically made for applications like chatbots and interactive content creation that need instantaneous AI processing. Computationally demanding AI operations, including processing large datasets or producing intricate outputs, can also benefit from provisioned throughput.

Stay away with 429 errors

Reliable performance is essential when generative AI is used in production. Think about putting these three tactics into practice to accomplish this. It is great practice to integrate backoff and retry capabilities into all of your gen AI applications since they are made to cooperate.