Google Memorystore

Google Cloud mission at Character.AI is to provide an unmatched user experience, and they believe that the key to maintaining user engagement is application responsiveness. Understanding the value of low latency and economy, they set out to optimise their cache layer, a vital part of the infrastructure supporting Google cloud service. Google cloud’s desire for efficiency and their requirement for scalability prompted us to choose Google Cloud’s Memorystore for Redis Cluster.

First incorporation

Thanks to Django’s flexible caching settings, Google’s first attempt at utilising Memorystore for caching within their Django application was simple. Because Memorystore for Redis (non-cluster) fully supported Redis and satisfied their needs across several application pods, they selected it. Look-aside lazy caching greatly shortened response times for us; Memorystore now frequently returns results in the single digit millisecond range. This strategy, together with a high anticipated cache hit rate, made sure that our application continued to function well and be responsive even as we grew.

Turn away When an application uses caching, it first looks in the cache to determine if the requested data is there. In the unlikely event that the data is not in the cache, this calls a different datastore. This compromise benefits from memorystore’s high projected cache hit rate and typical single-digit millisecond response time once the cache heats up.

In contrast to pre-loading all of the possible data beforehand, lazy-loading loads data into the cache on a cache miss. Each data record has a customisable time-to-live (TTL) in the application logic, allowing data to expire from the cache after a certain amount of time. After it expires, the application idly loads the data again into the cache. As a result, there will be new data during the subsequent session and high cache hit rates during active user sessions.

Using a proxy to scale

Google Cloud became aware of the constraints of a single Memorystore instance as Google Cloud user base increased. They were able to spread out their operations over several Memorystore instances by using Twemproxy to establish a consistent hash ring.

This was successful at first, but there were a few major problems. First, their staff had to work more to manage, tune, and keep an eye on Twemproxy, which added to the operational load and resulted to multiple outages as it couldn’t keep up with their demands. The main problem was that the hash ring was not self-healing, even though they still needed to adjust the proxy for performance.

If one of the numerous target Memorystore instances was overloaded or undergoing maintenance, the ring would break and we would lose cache hit rate, which would result in an excessive strain on the database. As Google Cloud team scaled both the application and Twemproxy pods in their Kubernetes cluster, they also encountered severe TCP resource contentions.

Software scaling

After determining that, despite Twemproxy’s many advantages, it was not growing with them in terms of dependability, they added ring hashing to the application layer directly. By now, they had created three services and were scaling up their backend systems; two Python-based services shared a single implementation, while their Golang service had a different implementation. They also had to maintain ring implementations in three services. Once more, this was more effective than their earlier methods; nevertheless, when they scaled up, problems arose. The implementation of the per-service ring began to encounter issues with the indexes’ 65K maximum connection limit, and maintaining lengthy lists of hosts became laborious.

Moving to Memorystore for Redis Cluster

One of the major milestones in their scaling journeys came in 2023 when Google Cloud released Memorystore for Redis Cluster. By utilising sharding logic that is tightly linked with the Redis storage layer, this service enables us to simplify their design and do away with the requirement for external sharding solutions. Their application’s scalability was improved by switching to Memorystore for Redis Cluster, which also allowed their primary database layer to sustain steady loads and high cache hit rates as they grew.

Character.AI’s experience with Memorystore for Redis Cluster is a prime example of their dedication to using state-of-the-art technology to improve user experience. They no longer need to bother about manually managing proxies or hashrings thanks to Memorystore for Redis Cluster. Alternatively, they can depend on a fully-managed system to provide scalability with minimal downtime and predictable low latencies. By shifting this administration burden to Google Cloud, they are able to concentrate on their core competencies and user experience, enabling them to provide their global user base with revolutionary AI experiences. Character.AI is committed to staying at the forefront of the AI market by always investigating new technologies and approaches to improve the performance and dependability of their application.

Overview of Redis Cluster Memorystore

A completely managed Redis service is called Memorystore for Redis Cluster.

For Google Cloud, by utilising the highly scalable, accessible, and secure Redis service, applications running on Google Cloud may gain extraordinary speed without having to worry about managing intricate Redis setups.

Important ideas and terminology

Resource organisation in a hierarchy

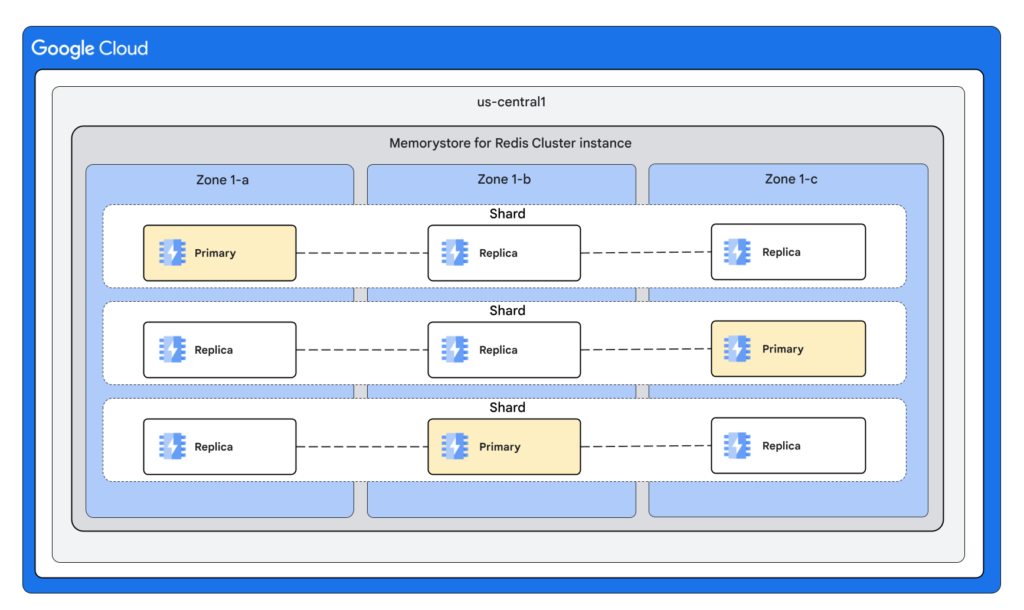

Memorystore for Redis Cluster streamlines administration and management by organising the many resources utilised in a Redis deployment into a hierarchical structure. This diagram serves as an example of this structure:

A collection of shards, each representing a portion of your key space, make up the memorystore for Redis Cluster instances. In a Memorystore cluster, each shard consists of one primary node and, if desired, one or more replica nodes. Memorystore automatically distributes a shard’s nodes across zones upon the addition of replica nodes in order to increase throughput and availability.

Instances

Instances Your data is contained in a Memorystore for Redis Cluster instance. When speaking of a single Memorystore for a Redis Cluster unit of deployment, the terms instance and cluster are synonymous. You need to provision enough shards for your Memorystore instance in order to service the keyspace of your whole application.

Shards

There are several shards in your cluster that are all of the same size.

Principal and secondary nodes

Every shard has a single primary node. Replica nodes can be zero, one, or two per shard. Replicas are uniformly dispersed throughout zones and offer increased read throughput and excellent availability.

Redis version

Redis version Memorystore for Redis Cluster supports a portion of the entire Redis command library and is based on the open-source Redis version 7.x.

Endpoints of clusters

Your client connects to the discovery endpoint on each instance. The discovery endpoint is also used by your client to find cluster nodes. Refer to Cluster endpoints for additional details.

Prerequisites for networking

Networking needs to be configured for your project before you can construct a Memorystore for a Redis Cluster instance.

Memorystore for Redis Cluster pricing

For details on prices for the regions that are offered, visit the Pricing page.