Intel Labs Collaboration with Mila

By working together, Intel Labs and the Bang Liu group at Mila have developed HoneyBee, a cutting-edge large language model (LLM) specialized to materials science that is currently available on Hugging Face. This builds on Intel and the Mila – Quebec AI Institute’s ongoing research efforts to develop novel AI tools for materials discovery to address challenges like climate change and sustainable semiconductor manufacturing. A spotlight at the AI for Accelerated Materials Discovery (AI4Mat) Workshop at the Conference on Neural Information Processing Systems (NeurIPS 2023) and a Findings poster presentation at Empirical Methods in Natural Language Processing (EMNLP 2023) were both recently accepted for HoneyBee.

According to they partnership between Intel Labs and Mila on the MatSci-NLP article and blog, materials science is an intricate multidisciplinary area that aims to comprehend matter’s interaction in order to efficiently develop, create, and evaluate novel materials systems. The opportunity to develop specialized scientific LLMs that can comprehend specialized material, such chemical and mathematical formulas, as well as domain-specific scientific language is made possible by the abundance of research literature and textual information found in various documents. In order to accomplish this, they created HoneyBee, the first billion parameter-scale LLM that is open-source and focused on materials science. It has attained cutting-edge results on they MatSci-NLP benchmark, which is also open source.

Generate Reliable Training Data with MatSci-Instruct

A specific obstacle in creating LLMs in materials science is the deficiency of excellent annotations in scientific textual data. The difficulty is exacerbated by the fact that a large portion of scientific knowledge is expressed in language peculiar to a particular scientific field and has exact meanings within those contexts. Because high-quality data is so important, scientific LLMs need to assemble training and evaluation data using a reliable process.

Although expert annotation is the preferred method, it is not practical to execute at a large scale. They present MatSci-Instruct, a reliable instructions data generating procedure that may be used to produce fine-tuning data for LLMs in scientific domains, particularly materials science, in order to solve the difficulty of producing high-quality textual data. MatSci-Instruct expands upon two key realizations:

By assessing generated fine-tuning data using several, independent LLMs, they can reduce bias and add more resilience, resulting in trustworthiness for both the generated data and the final LLM.

Large-scale learning models (LLMs) have demonstrated emergent ability in domains where they were not trained at first, and instruction-based fine-tuning can help them become even more proficient in particular domains.

Iterative Improvement of Materials Science Linguistic Models

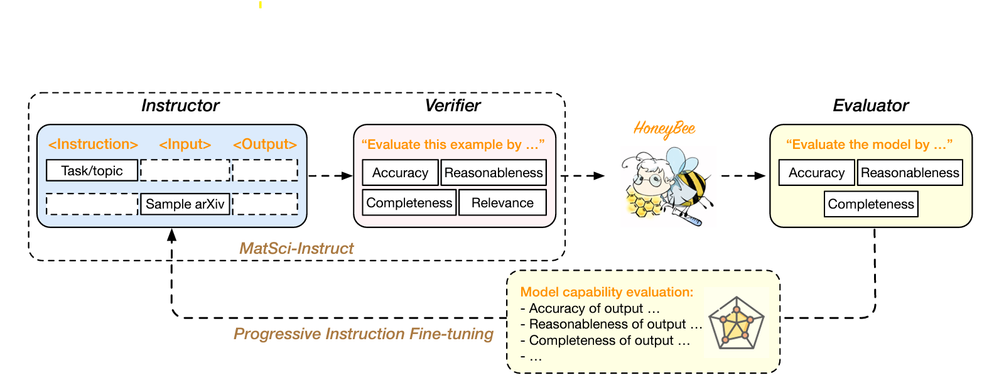

Generation: The Instructor’s (ChatGPT) materials science text data generation serves as the foundation for the LLM fine-tuning data.

Verification: Using predefined criteria, an impartial Verifier LLM (Claude) verifies the data generated by the instructor by removing low-quality data.

Fine-tuning and evaluating the models: HoneyBee language models are trained on verifiable data and subsequently assessed by the Evaluator (GPT-4), another independent LLM.

With each new cycle, the three previously mentioned procedures are iteratively performed to gradually enhance the performance of HoneyBee language models. With every modification, the quality of the HoneyBee LLMs and the resulting materials science text data both get better. To properly train LLMs on challenging scientific domains, the MatSci-Instruct produced data, as illustrated , covers a wide range of pertinent materials science topics.

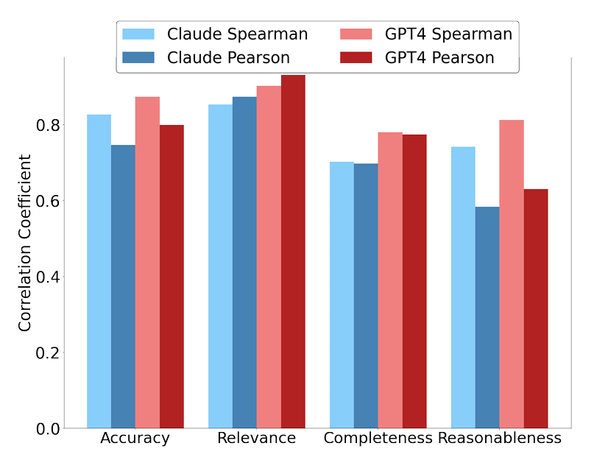

Language Models for HoneyBees

They research describes several studies to gain a better understanding of the performance of HoneyBee and the usefulness of MatSci-Instruct. They begin by examining the relationship between the evaluations made by human experts and the verification outcomes from the Verifier and Evaluator models. Figure 3 illustrates the good agreement between the two techniques with a pretty high correlation between the evaluation by human experts and the LLMs. This implies that reliable fine-tuning data can be produced using the LLMs employed in the MatSci-Instruct procedure.

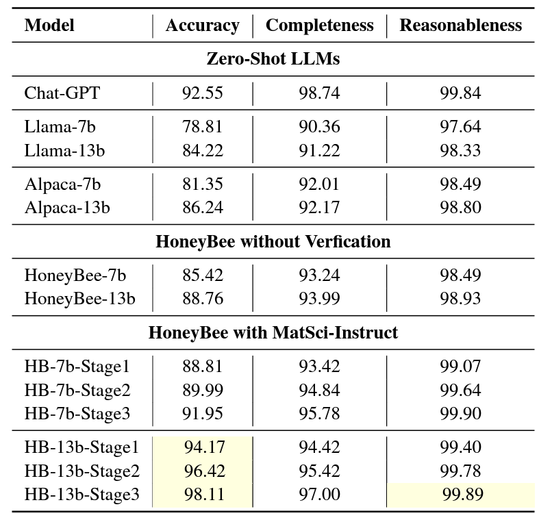

With every iteration of fine-tuning, HoneyBee-7b and HoneyBee-13b, which stand for the number of parameters in the LLM, exhibit increasing improvement. This offers proof of the iterative approach’ effectiveness.

Certain instances, indicated in light yellow, show that HoneyBee-13b can outperform the original Instructor (ChatGPT). Further evidence of the usefulness of MatSci-Instruct comes from the observation of similar behavior in other studies of instruction-fine-tuned LLMs.

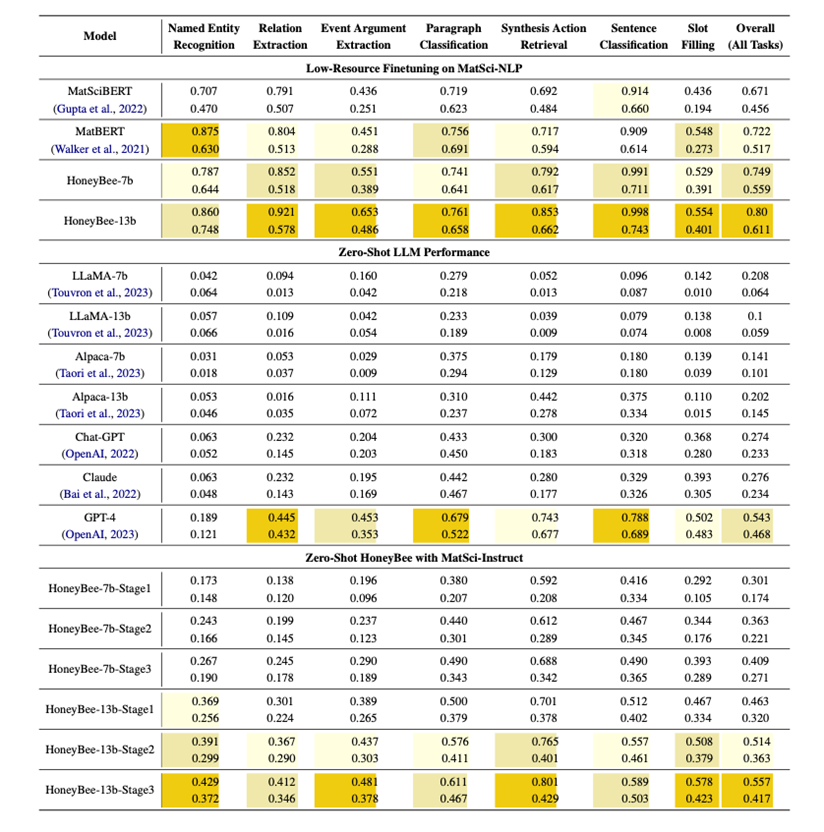

Lastly, they examine how well HoneyBee language models perform using the MatSci-NLP benchmark (refer to Figure 5). Using the same methodology as outlined in the MatSci-NLP publication, they discover that HoneyBee performs better than all LLMs in the initial MatSci-NLP analysis. HoneyBee performs better than all LLMs in the zero-shot condition, when LLMs assess the benchmark data without further training, with the exception of GPT-4, which served as the Evaluator in MatSci-Instruct. However, HoneyBee-13b uses up to 10 times less parameters than other models and still achieves competitive performance with GPT-4. This illustrates how highly specialized HoneyBee is, which makes it a cutting-edge language model for materials science.